- There is a demo for DragGAN and the source code is now available on Github.

DragGAN opens up a whole new category of image editing, where photorealistic images are customized by the user via drag & drop. The details are handled by a GAN.

Today's methods of image manipulation, such as with tools like Photoshop, require a high degree of skill to flexibly and precisely control the position, shape, expression, or arrangement of individual objects. Another option is to create entirely new images using generative AI such as Stable Diffusion or GANs, but these offer little control.

With DragGAN, researchers from the Max Planck Institute for Computer Science, the Saarbrücken Research Center for Visual Computing, MIT CSAIL, and Google demonstrate a new way to control GANs for image processing.

DragGAN: Drag and drop image processing

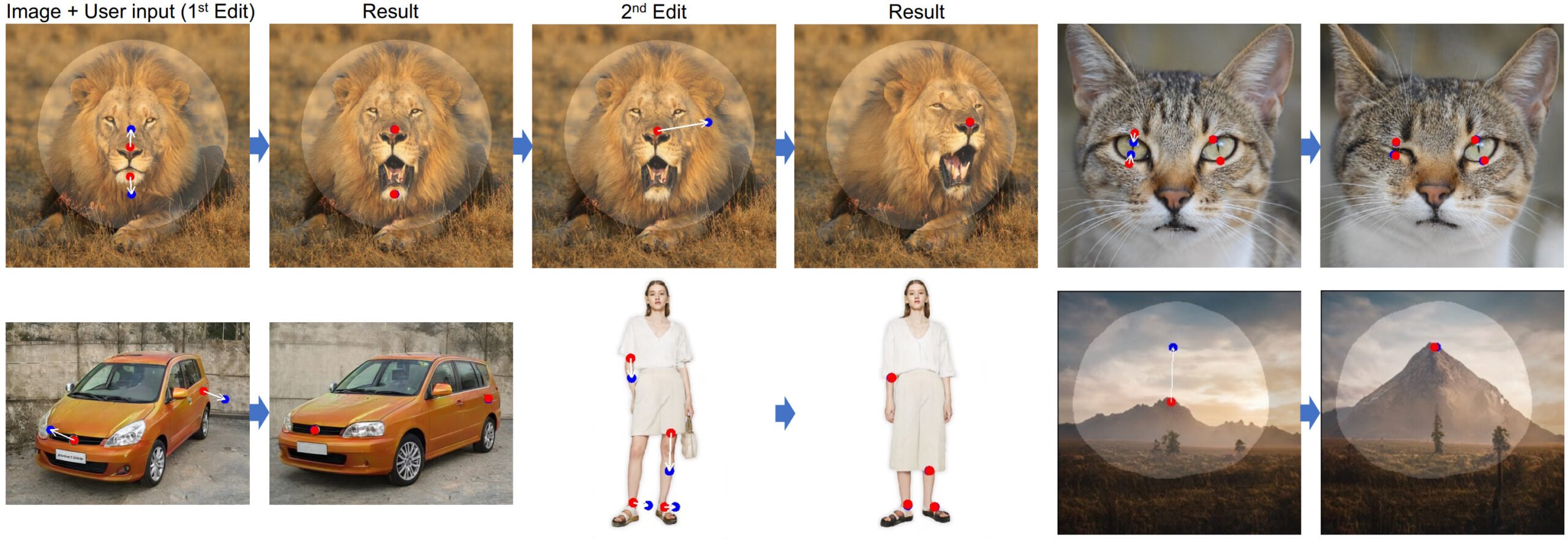

DragGAN can process photorealistic images as long as the representations match the categories of the GAN training dataset. These include animals, cars, people, cells, and landscapes. In a simple interface, users then drag points they have defined in an image to desired positions, for example, to close the eyes of a cat, rotate the head of a lion and open its mouth, or transform a car into another model.

Video: Pan et al.

DragGAN tracks these points and generates images corresponding to the desired changes.

DragGAN produces realistic outputs for challenging scenarios

"Through DragGAN, anyone can deform an image with precise control over where pixels go, thus manipulating the pose, shape, expression, and layout of diverse categories," the team said. "As these manipulations are performed on the learned generative image manifold of a GAN, they tend to produce realistic outputs even for challenging scenarios such as hallucinating occluded content and deforming shapes that consistently follow the object's rigidity."

In a comparison, the team shows that DragGAN is clearly superior to other approaches. However, some changes are still accompanied by artifacts when they fall outside the training distribution.

More information is available in the paper, on Hugging Face, or on the DragGAN project page.