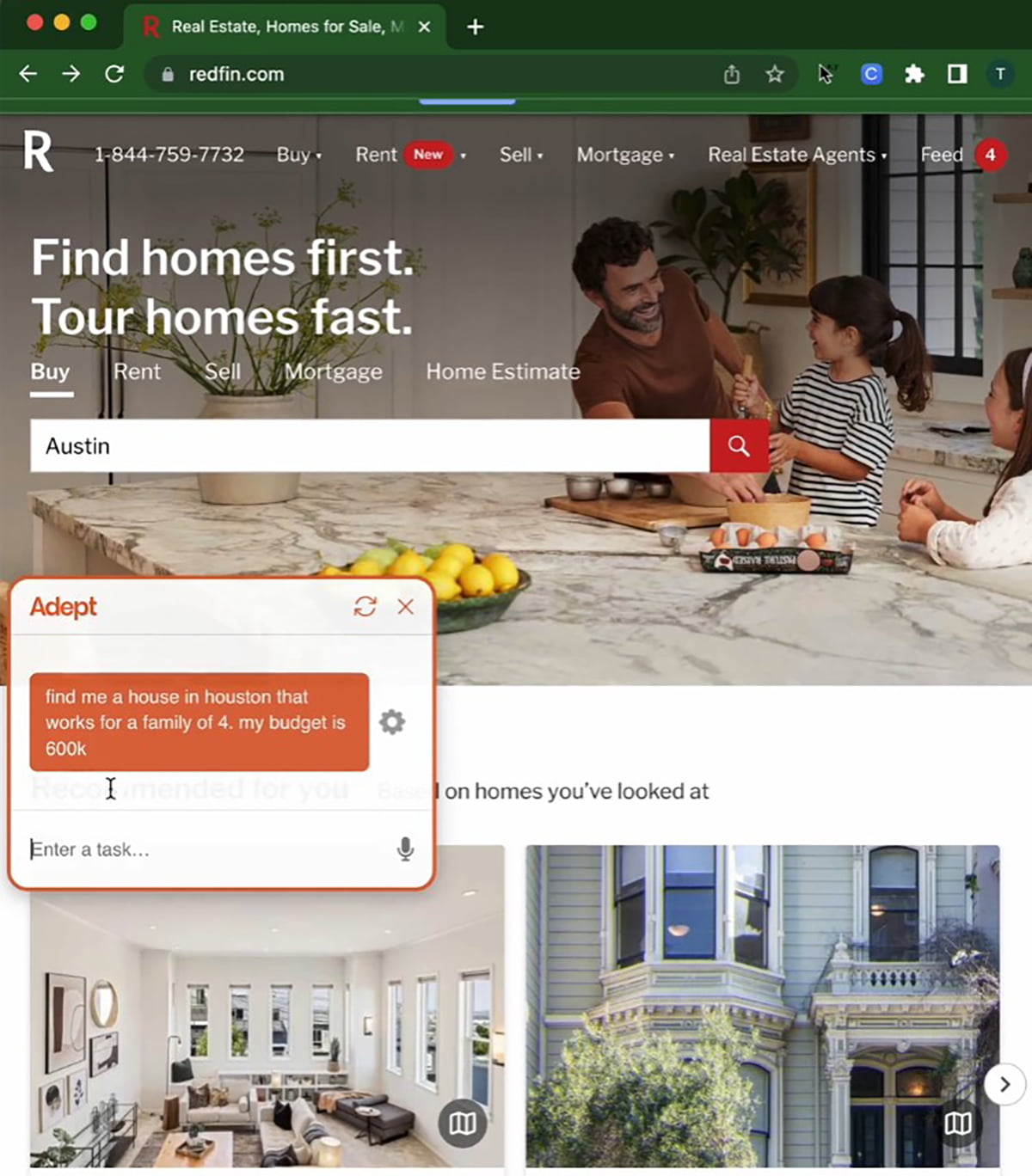

Adept recently introduced Fuyu-Heavy, a new multimodal AI model for digital agents. Fuyu-Heavy is the third most capable multimodal model after GPT-4V and Gemini Ultra, and excels in multimodal reasoning and UI understanding, the company says. It performs well on traditional multimodal benchmarks and matches or exceeds the performance of models in the same performance class on standard text-based benchmarks. The model performs similarly to Claude 2.0 on chat scores, and slightly better than Gemini Pro on the MMMU benchmark. Fuyu-Heavy will soon power Adept's enterprise product, and lessons learned from its development have already been applied to its successor. The following video demonstrates the model's ability to understand a user interface.

Adept's multimodal Fuyu-Heavy model is adept at understanding UIs and inferring actions to take

Adept AI

Sources: