Hub AI in practice

Artificial Intelligence is present in everyday life – from “googling” to facial recognition to vacuum cleaner robots. AI tools are becoming more and more elaborate and support people and companies more effectively in their tasks, such as generating graphics, texting or coding, or interpreting large amounts of data.

What AI tools are there, how do they work, how do they help in our everyday world – and how do they change our lives? These are the questions we address in our Content Hub Artificial Intelligence in Practice.

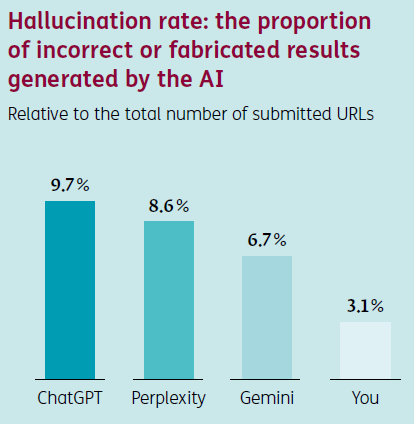

The ERGO Innovation Lab and ECODYNAMICS teamed up to analyze how insurance content shows up in AI-powered search. Their study looked at over 33,000 AI search results and 600 websites, focusing on which types of content large language models like ChatGPT tend to surface. The results show that LLMs favor content that is easy to read, well-structured, and trustworthy - all traits associated with classic SEO. Modular content, presented in a question-and-answer style, and well-linked internally is also more likely to show up in AI-generated answers, just as in classic SEO.

The study also looked at hallucination rates. ChatGPT had the highest rate, with just under ten percent of its responses containing inaccuracies, while you.com delivered much more reliable results. These findings apply specifically to insurance-related queries.

ChatGPT has started steering users who are losing touch with reality toward actual journalists - by email. New York Times reporter Kashmir Hill says the chatbot has repeatedly suggested that people caught up in conspiracy thinking or psychological distress should contact her directly. In these conversations, ChatGPT described Hill as "empathetic," "grounded," and someone who has personally researched AI and "might actually hold space for the truth behind this, not just the headline."

An accountant in Manhattan had been convinced that he was, essentially, Neo from "The Matrix," and needed to break out of a computer-simulated reality.

Kashmir Hill

Critics have been warning for a while that ChatGPT tends to mirror user behavior - sometimes even reinforcing delusions. What's new is that the chatbot is now actively sending unstable users to real people, with no clear safeguards in place.

Meta is reportedly in talks with financial firms to secure up to $29 billion in funding for new data centers in the US, according to the Financial Times. The plan includes $3 billion in equity and $26 billion in debt, allowing Meta to expand its AI infrastructure without putting added pressure on its own balance sheet. As part of the push, Meta has signed long-term power agreements with a nuclear plant and energy company Invenergy. The company is also aggressively hiring AI talent, including recruiting specialists from OpenAI with multi-million dollar offers, and recently acquired a 49% stake in Scale AI for about $14 billion to bolster its own superintelligence team.

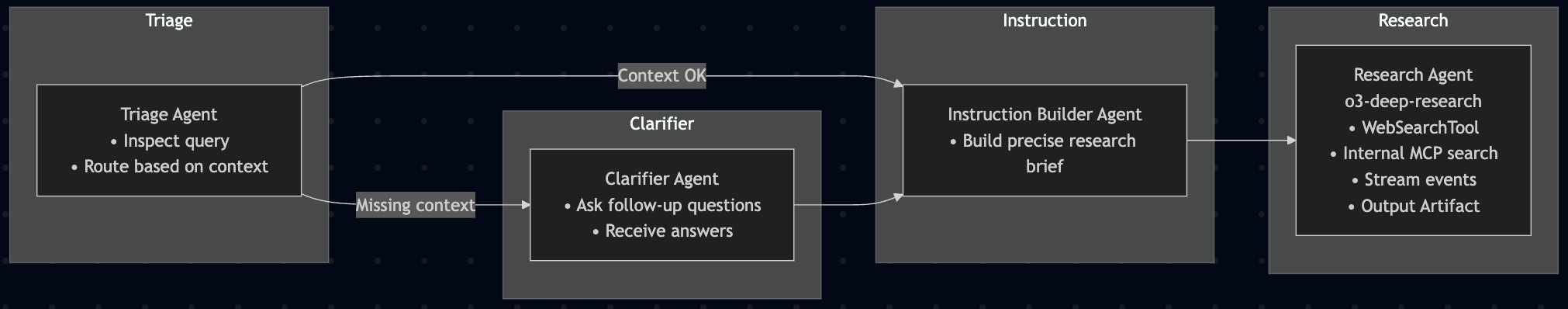

OpenAI is demonstrating how deep research agents can automate complex research tasks. These agents use the recently released o3-deep-research-2025-06-26 model through OpenAI's API, as well as web search and internal document search with the MCP system. The typical workflow involves four specialized agents: Triage, Clarification, Instruction, and Research. When a user submits a query, the system first checks and, if needed, clarifies it. Then, an instruction agent builds a structured research request, which the research agent carries out.

For simpler tasks, OpenAI offers a lightweight single agent powered by the o4-mini model. This setup is intended for developers looking to create scalable research workflows with OpenAI's tools.

Runway is launching a new platform called "Game Worlds" next week, letting users create text-based adventure games using written input and AI-generated images. The company plans to slowly expand the platform's features. CEO Cristóbal Valenzuela says Runway is in talks with game companies to use their data for training AI models and to explore ways to apply its technology in game development. Valenzuela also notes that game developers are currently adopting AI faster than film studios. Game Worlds is available at play.runwayml.com.

Shopify CEO Tobi Lütke and former Tesla and OpenAI researcher Andrej Karpathy say "context engineering" is more useful than prompt engineering when working with large language models. Lütke calls it a "core skill," while Karpathy describes it as the "delicate art and science of filling the context window with just the right information for the next step."

Too little or of the wrong form and the LLM doesn't have the right context for optimal performance. Too much or too irrelevant and the LLM costs might go up and performance might come down. Doing this well is highly non-trivial.

Andrej Karpathy

This matters even with large context windows, as model performance drops with overly long and noisy inputs.