Here's some good news for prompt engineers: GPT-4 prompts work with Gemini Ultra

If you have been building sophisticated prompts and AI workflows for GPT-4 over the past few months, you will be happy to hear this news: Prompts between GPT-4 and Google's Gemini Ultra are compatible.

This is according to Ethan Mollick, a professor at the Wharton School who has had access to Gemini Ultra for over a month. Mollick was involved in a multi-month study of productivity gains from GPT-4 in the consulting industry.

The compatibility between the two models is "remarkable," he says. Complex prompts he tested that worked on GPT-4 were also handled well by Gemini Ultra.

"Advanced LLMs may show some basic similarities in prompts and responses that make it easy for people to switch to the most advanced AI from an older model at any time," Mollick writes.

Anyone who has built a prompt library with underlying workflows will be happy to hear this. Our tests with AI content show that the reverse does not work: A complex GPT-4 prompt in Llama 2, for example, gives significantly worse results. It is good to know that the opposite is possible and that proven AI workflows can be upgraded to better models without having to change all the prompts.

Gemini Ultra is "clearly a GPT-4 class model"

However, Ultra can sometimes be too helpful, so educational prompts that require students to interact with the AI to understand a concept need adjustment. A paper on this will follow, Mollick writes.

Otherwise, Mollick confirms that Gemini Ultra is definitely on par with GPT-4, with different strengths and weaknesses.

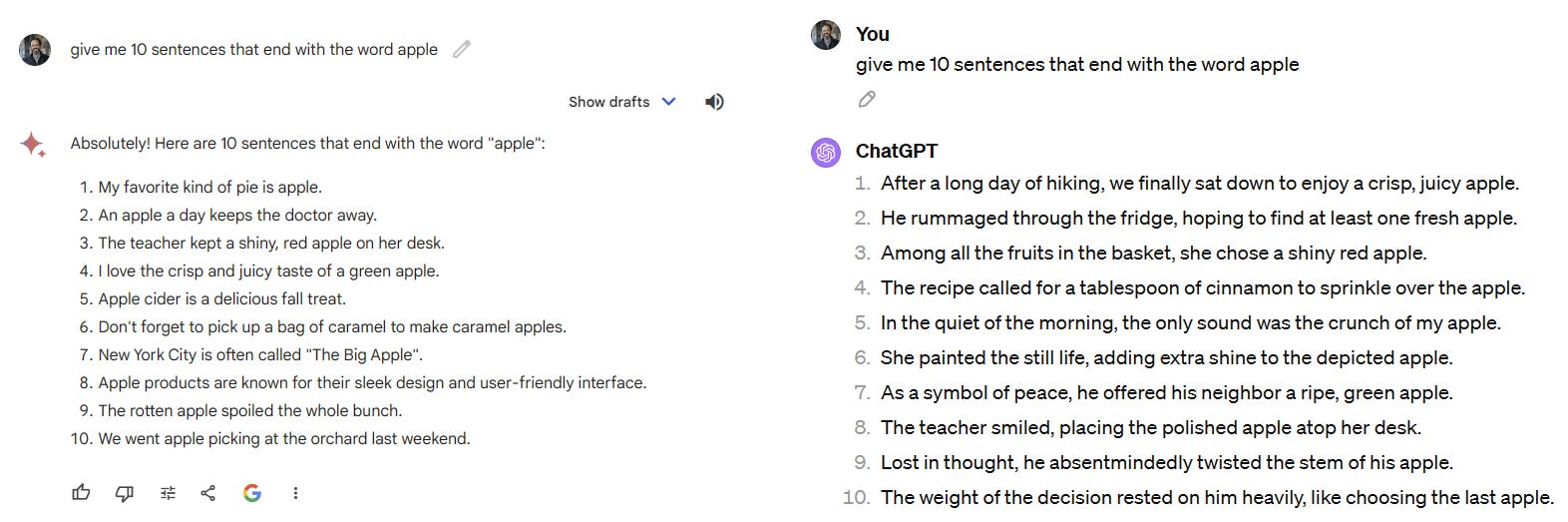

Gemini is more open to a "darker" writing style, gives better explanations, integrates images and Search better, Mollick writes. GPT-4 is better at coding, passes challenging language tasks such as the "Apple test" (see screenshot), and writes better sestinas.

Gemini shows that companies other than OpenAI can build GPT-4-class models, Mollick says. However, both models are also inconsistent and weird and hallucinate "more than you would like."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.