OpenAI's Sora is slow enough to grab a snack while it generates your video

OpenAI's new text-to-video model, Sora, will likely remain in development for some time before a public release.

According to Bloomberg, OpenAI has not yet set an exact release schedule. There are two reasons for this: One is that OpenAI does not want to take any safety risks, given the number of elections this year. The second reason is that the model is not yet technically ready for release.

When OpenAI unveiled Sora, the company pointed out shortcomings in the model's physical understanding and consistency. Bloomberg's tests with two OpenAI-generated prompts confirmed these issues. For example, in the video below, the parrot turns into a monkey at the end.

Prompt: "An overhead view of a parrot flying through a verdant Costa Rica jungle, then landing on a tree branch to eat a piece of fruit with a group of monkeys. Golden hour, 35mm film." | Video: Rachel Metz via X

"Sora remains a research project, very few people have access to it, and there were clear limitations for the tool during our private demo with OpenAI," Bloomberg writes.

It's worth noting that Bloomberg gave OpenAI four prompts, but OpenAI only generated two videos. The company cited time constraints on the part of its researchers. This suggests that the generation process is lengthy and/or immature.

Generate and snack

OpenAI has not given any details about Sora's speed. But it's going to take longer than standard image generators. For now, you can "definitely" grab a snack while waiting for the finished video, says OpenAI researcher Bill Peebles. Of course, this could change before the release.

Peebles also acknowledges Sora's generation errors, but still calls the system a "significant leap" in AI video generation, especially in terms of scene complexity.

Sora is currently in the red teaming phase, and selected artists, filmmakers, and designers have been given access to the system. This was announced by OpenAI when the model was unveiled.

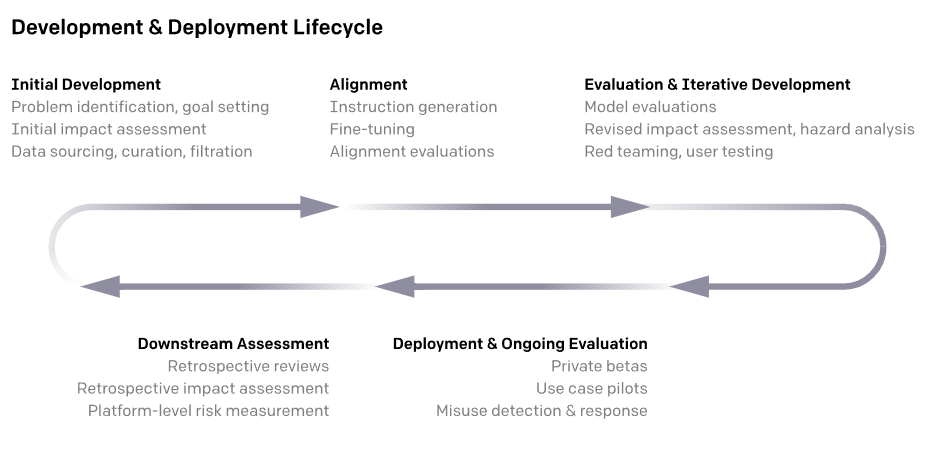

Looking at OpenAI's model rollout plan, this seems to be the "Evaluation & Iterative Development" phase. The next step would then be a staged rollout phase with private betas, use case pilots, and further safety testing.

The project also caught the attention of US filmmaker Tyler Perry, who said he canceled an $800 million expansion of his studio after seeing Sora demos. Perry believes that in the future he will be able to generate movie scenes solely from text.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.