LLMs give ridiculous answers to a simple river crossing puzzle

What more proof is needed that large language models can't handle basic logic? Simple planning puzzles are all the rage right now.

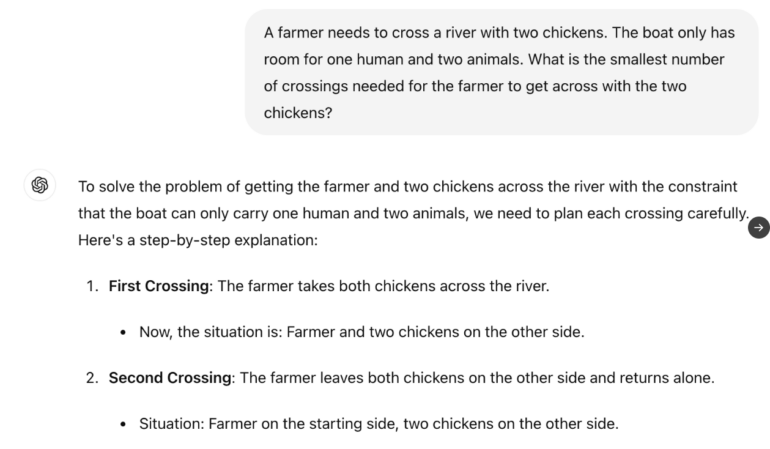

Today's language models fail to consistently solve an extremely dumbed-down version of the classic wolf-goat-cabbage problem. In one example, the model must figure out how a farmer can transport two chickens across a river in a boat that can hold one person and two animals.

The LLM must logically associate "farmer" with the human and "sheep" with the animal, and then plan the best number of river crossings. Sometimes the models provide absurd solutions with five crossings instead of one.

"A farmer wants to cross a river with two chickens. His boat only has room for one person and two animals. What is the minimum number of crossings the farmer needs to get to the other side with his chickens?"

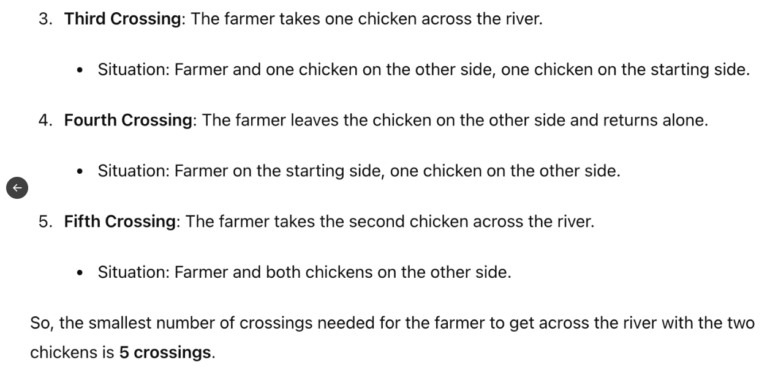

Tested LLMs often gave nonsensical answers, sometimes suggesting far more river crossings than necessary. Users have shared different versions of the puzzle on X, with some absurd results.

In one case, even when told that the farmer didn't need to cross the river at all, GPT-4o proposed a complex solution with nine crossings. And it ignored important constraints, such as not leaving chickens alone with wolves, which would have been perfectly feasible since the farmer didn't need to cross the river.

While LLMs can sometimes solve the puzzle with different clues in the prompt, critics argue that this highlights their lack of consistent reasoning and common sense. Current research supports the view that LLMs struggle to solve even the simplest logical tasks reliably.

These findings add to ongoing debates about the limitations of current LLM-based AI systems when it comes to logical reasoning and real-world problem-solving. "The problem is that LLMs have no common sense, no understanding of the world, and no ability to plan (and reason)," says Yann LeCun, Meta's director of AI research.

The question is whether research can find methods to improve the ability of LLMs to reason, or whether entirely new architectures are needed to fundamentally advance AI.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.