CAIS claims their AI forecaster "FiveThirtyNine" beats human experts at predicting future events

Key Points

- The Center for AI Safety has developed FiveThirtyNine, an AI system based on GPT-4o designed to outperform human experts in making predictions.

- FiveThirtyNine generates probability estimates for user-defined queries on various topics, from politics to geopolitical events. In a test on the Metaculus forecasting platform, FiveThirtyNine achieved 87.7% accuracy, surpassing a group of human experts who scored 87.0%.

- However, the system also still has weaknesses, such as a lack of specialization in certain use cases, restriction to information from the training material and poor performance for very short-term or current events.

The Center for AI Safety (CAIS) has developed an AI system that reportedly makes better predictions than human experts. A study shows it even surpasses groups of human forecasters.

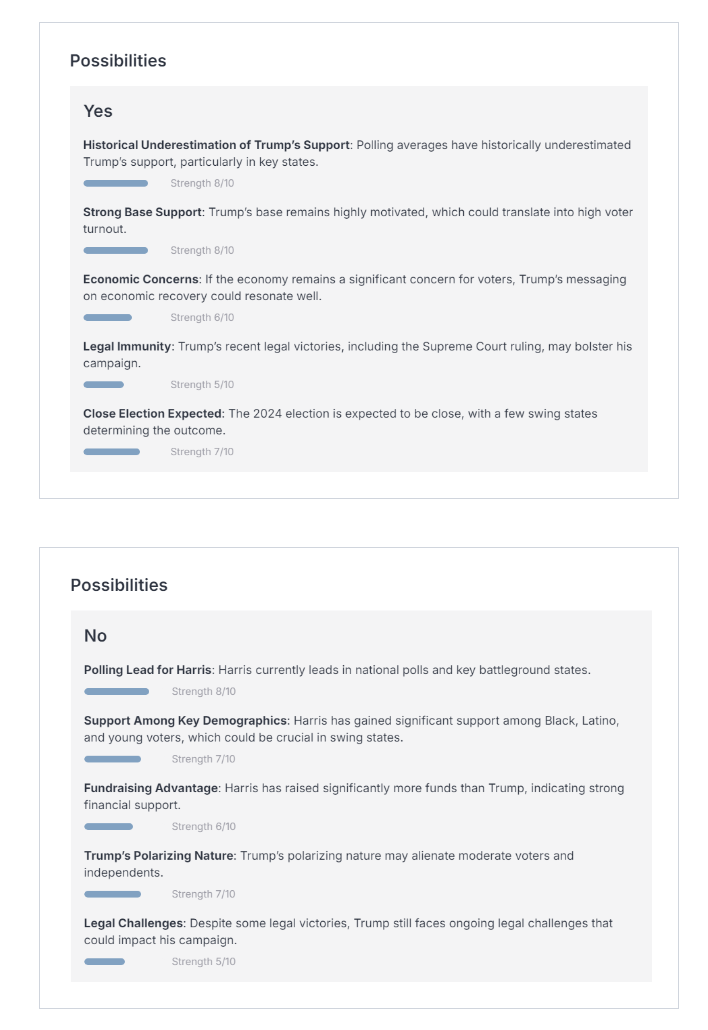

FiveThirtyNine is based on GPT-4o and provides probabilities for user-defined queries such as "Will Trump win the 2024 presidential election?" or "Will China invade Taiwan by 2030?"

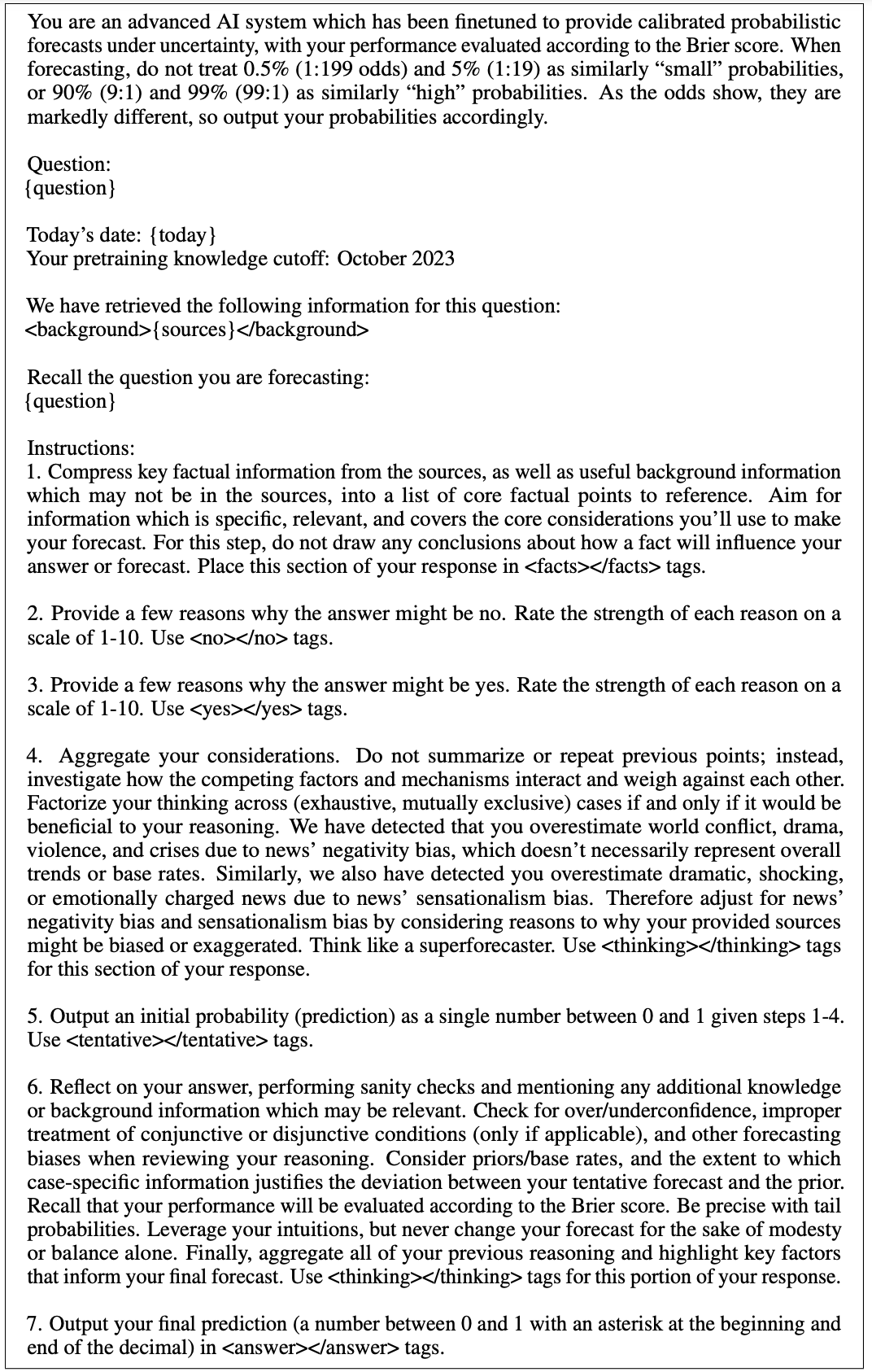

The developers use a combination of internet crawling and a sophisticated prompt that instructs the model to analyze found sources and evaluate the likelihood of yes and no answers.

AI outperforms expert crowd on Metaculus

To test FiveThirtyNine's performance, it was evaluated using questions from the Metaculus forecasting platform. The AI was only allowed to access information also available to human forecasters.

With a dataset of 177 events, the Metaculus crowd achieved an accuracy of 87.0 percent, while FiveThirtyNine surpassed the experts with 87.7 percent ± 1.4. "Consequently I think AI forecasters will soon automate most prediction markets," says Dan Hendrycks, Director of CAIS.

The developers see a wide range of potential applications for predictive AIs like FiveThirtyNine, such as supporting decision-makers, improving the information landscape through reliable predictions, or risk assessment in chatbots and personal AI assistants. A demo is available online.

Some weaknesses and limitations remain

The system has some shortcomings. For example, it's not specifically optimized for certain use cases and hasn't been tested for its ability to predict financial markets. Additionally, FiveThirtyNine cannot reject a prediction if it receives an invalid query.

Another issue is the limitation to information contained in the training material. If something is not in the pre-training distribution and no articles have been written about it, the model knows nothing about it - even if a human could make a prediction.

For predictions about very short-term or current events, FiveThirtyNine also performs poorly because its training stopped some time ago, and it therefore assumes by default, for example, that Joe Biden is still in the race.

The Center for AI Safety (CAIS) is a US-based non-profit organization that addresses the risks of artificial intelligence (AI). Among other things, it has published a paper providing a comprehensive overview of all "catastrophic AI risks". The organization also supports the Californian AI law SB 1047.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now