Meta's Diplomacy AI can negotiate, persuade and cooperate

Key Points

- CICERO is a dialog-optimized AI agent that can negotiate with humans and convince them of its strategies.

- The game "Diplomacy" serves as a benchmark, in which CICERO played online with many people unrecognized as a bot. In fact, CICERO was one of the best players.

- The AI uses two systems: First, it plans its strategy, and second, it translates it into dialogues via a language model that was fine-tuned with Diplomacy messages.

CICERO is Meta's latest AI system that can negotiate with humans in natural language, convince them of strategies and cooperate with them. The strategy board game "Diplomacy" serves as a benchmark.

According to Meta, CICERO is the first language AI that can play the board game "Diplomacy" at a human level. In Diplomacy, players negotiate the European balance of power before World War I.

Representing Austria-Hungary, England, France, Germany, Italy, Russia, and Turkey, players form strategic alliances and break them when it is to their advantage. All moves are planned and then executed simultaneously. Skillful negotiation is therefore the core of the game.

Human-level diplomacy

CICERO is optimized for diplomacy, and the game also serves as a benchmark for the model's language skills: in 40 online games over 72 hours on "webDiplomacy.net," CICERO scored more than twice the average score of human players and was in the top ten percent, according to Meta.

The CICERO agent is designed to negotiate and establish alliances with humans, according to Meta. The AI should be able to infer players' beliefs and intentions from conversations - a task that Meta's research team says has been seen as "a near-impossible grand challenge" in AI development for decades.

According to Meta, CICERO is so successful at playing Diplomacy that human players prefer to ally with the AI. In online games, CICERO encountered 82 different human players who did not know that CICECO is an AI system. Only one player expressed a bot suspicion in chat after a game, but it was without consequence.

In the paper, the researchers describe a case in which CICERO was able to dissuade a human player from making a planned move and convince him to make a new move that was mutually beneficial.

First plan, then speak

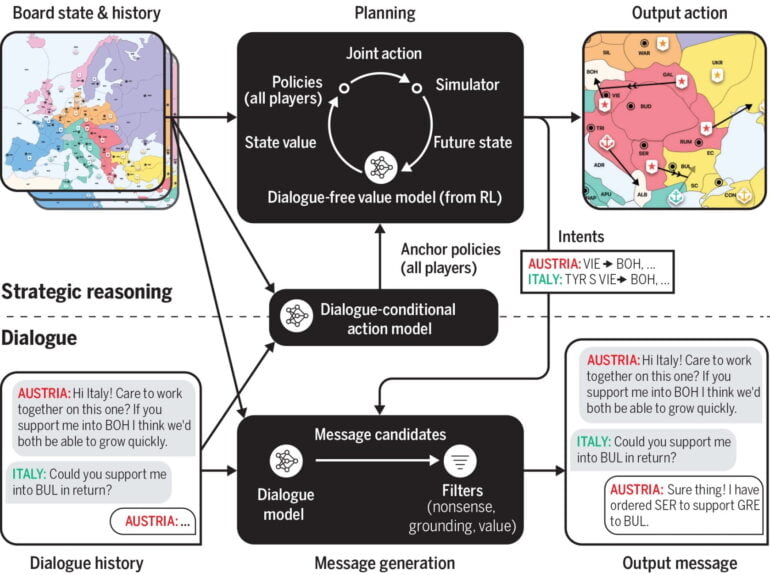

At its core, CICERO works with two systems: One plans the moves for itself and its partners, the second translates these moves into natural language and explains them to the players to convince them of its planning.

CICERO's language model is based on a pre-trained transformer language model (BART) with 2.7 billion parameters that was fine-tuned with more than 40,000 diplomacy games. The anonymized game data included well over twelve million messages exchanged between human players, which CICERO processed during training.

According to Meta, the supervised training approach with human game data, which is classic for game AIs and involves cloning the behavior of human players, would result in a gullible agent in Diplomacy that could be easily manipulated, for example by a phrase such as, "I'm glad we agreed that you will move your unit out of Paris!" Moreover, a purely supervised trained model could learn spurious correlations between dialogues and actions.

With the iterative planning algorithm "piKL" (policy-regularized), the model optimizes its initial strategy based on the strategy predictions for the other players, while trying to stay close to its initial prediction. "We found that piKL better models human play and leads to better policies for the agent compared to supervised learning alone," Meta AI writes.

Cicero uses a strategic reasoning module to intelligently select intents and actions. This module runs a planning algorithm that predicts the policies of all other players based on the game state and dialogue so far, accounting for both the strength of different actions and their likelihood in human games, and chooses an optimal action for Cicero based on those predictions.

Planning relies on a value and policy function trained via self-play RL which penalized the agent for deviating too far from human behavior in order to maintain a human-compatible policy. During each negotiation period, intents are re-computed every time Cicero sends or receives a message. At the end of each turn, Cicero plays its most recently computed intent.

From the paper

One possible use case for CICERO-style systems, according to Meta, is advanced digital assistants that hold longer, streamlined discussions with people and teach them new knowledge or skills during those conversations. CICERO itself can only play diplomacy.

The system also makes mistakes, such as occasionally sending messages with illogical justifications, contradicting its plans, or being "otherwise strategically inadequate." The researchers tried to hide these errors as best they could with a series of filters. They attribute the fact that CICERO was not exposed as a bot despite its errors to the time pressure in the game and to the fact that humans also occasionally make similar mistakes.

In the deliberate use of conversational AI, there remain "many open problems" in human-agent collaboration, for which diplomacy is a good testing ground, the researchers write.

Meta releases the code for Cicero as open source on Github. For more in-depth insights into Meta's AI project, visit the Cicero project page.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now