Europe must reassess using Meta's AI models after Zuckerberg's anti-EU rhetoric

Mark Zuckerberg's recent alignment with Trump and apparent rejection of European values raises an uncomfortable question: Should European organizations continue using Meta's AI models?

Meta's position has changed dramatically recently. Not long ago, the company criticized the EU for preventing it from using European user data to train its AI models, arguing this data was crucial for aligning the technology with European values.

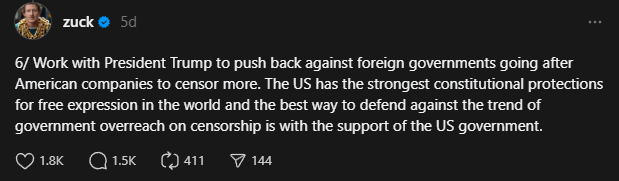

Now, Meta CEO Zuckerberg has announced plans that contradict those very values, stating his intention to work with the Trump administration to fight what he calls foreign government "censorship" of American companies.

The catch? What Meta labels as "censorship" actually refers to Europe's established protections against hate speech and misinformation.

Even more concerning, Meta's new approach includes allowing certain forms of hate speech under the banner of free expression - including statements that classify homosexuality as a mental illness. These policy changes won't just affect social media posts; they'll likely shape how Meta's future AI models interact with users.

Silicon Valley power games

Looking deeper, Zuckerberg's sudden embrace of "free speech" might have more to do with Silicon Valley politics than principles. With Elon Musk and Donald Trump forming closer ties, Zuckerberg appears to be offering Meta's platforms as a way for Trump to spread his message globally, potentially bypassing local regulations.

European organizations need to think carefully about the cultural and political impact of using Meta's AI tools, just as they would with Chinese AI models known for spreading government messaging. AI models aren't just neutral technology - they carry the cultural values and beliefs of their creators. When Meta equates fact-checking with censorship and openly challenges European values, it's time to reconsider these partnerships.

The timing makes Europe's need for its own AI capabilities clearer than ever - both to maintain digital independence and to protect its values. With Meta now allowing certain forms of hate speech that could train their AI systems, the risk of automated discrimination against minorities becomes even more pressing. Europe needs AI systems that reflect its own values and safeguards, not those that might amplify discrimination at scale.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.