New Mistral Small 3 does more with less under Apache license

Mistral AI has released Small 3, a new 24-billion parameter language model that matches the performance of much larger models from Meta and OpenAI. The company is also switching to the more permissive Apache 2.0 license.

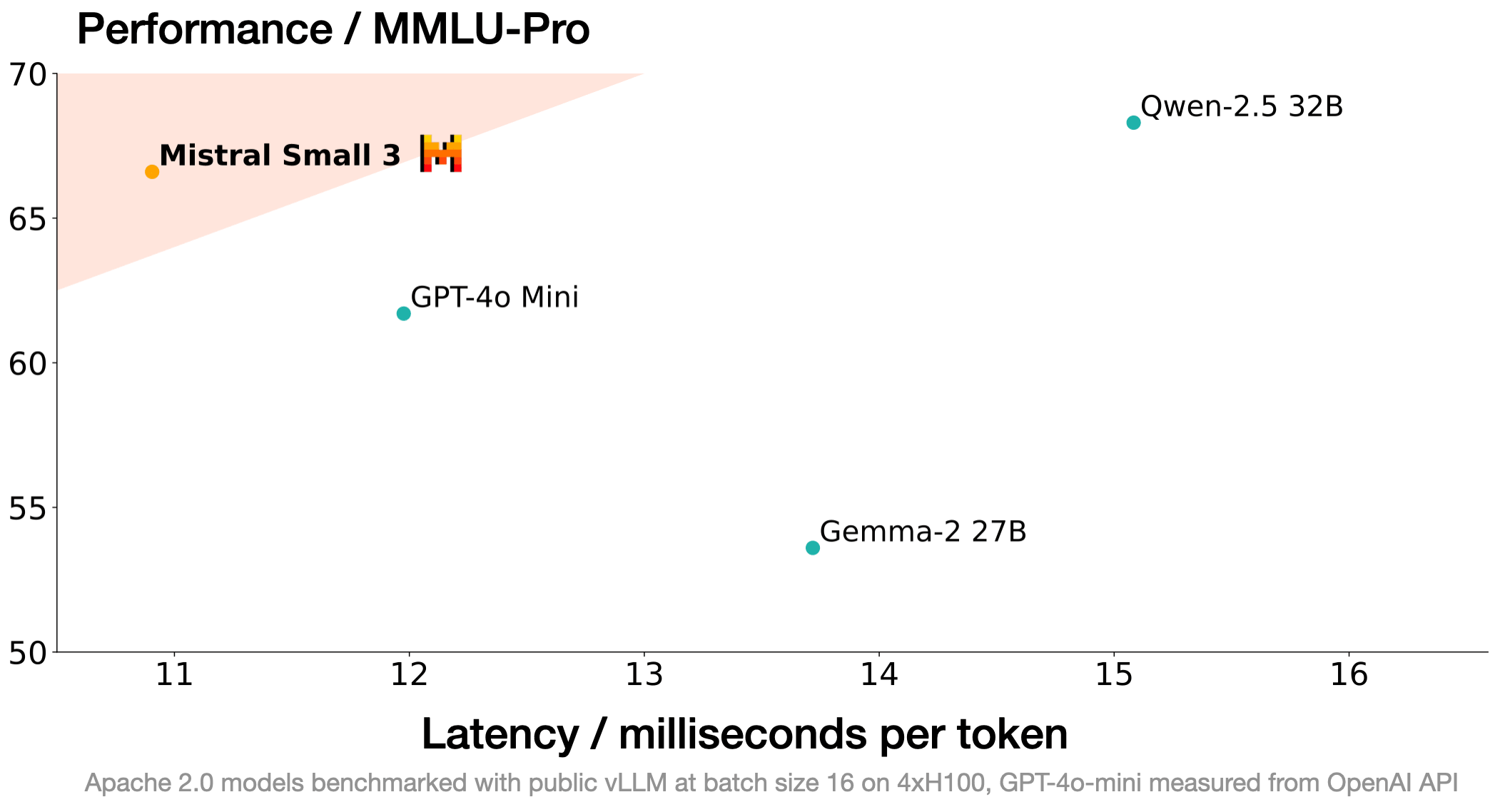

Mistral AI says Small 3 achieves similar performance to models three times its size while using just 24 billion parameters. The model, optimized for low latency and local deployment, follows Mistral's previous release from September 2024.

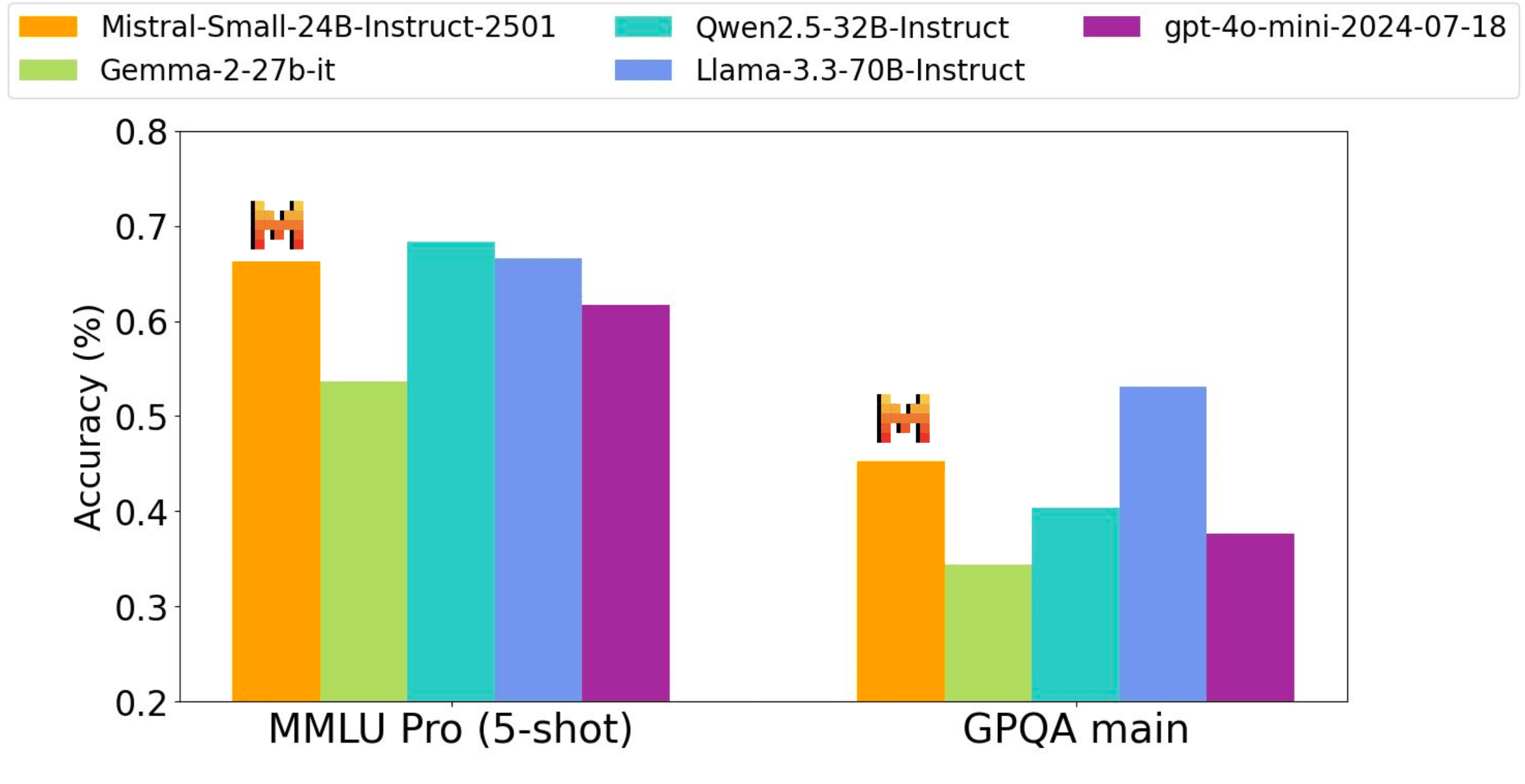

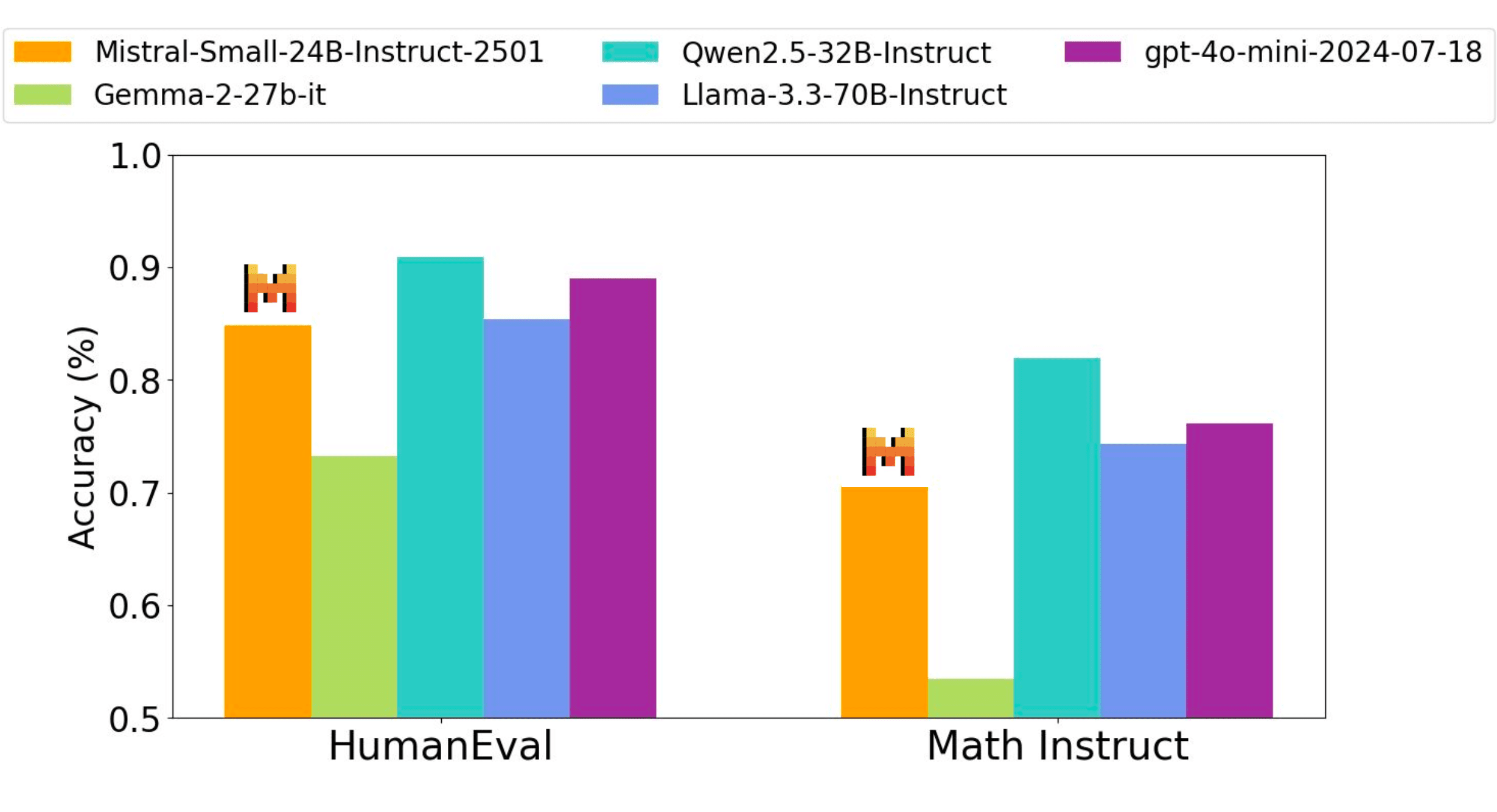

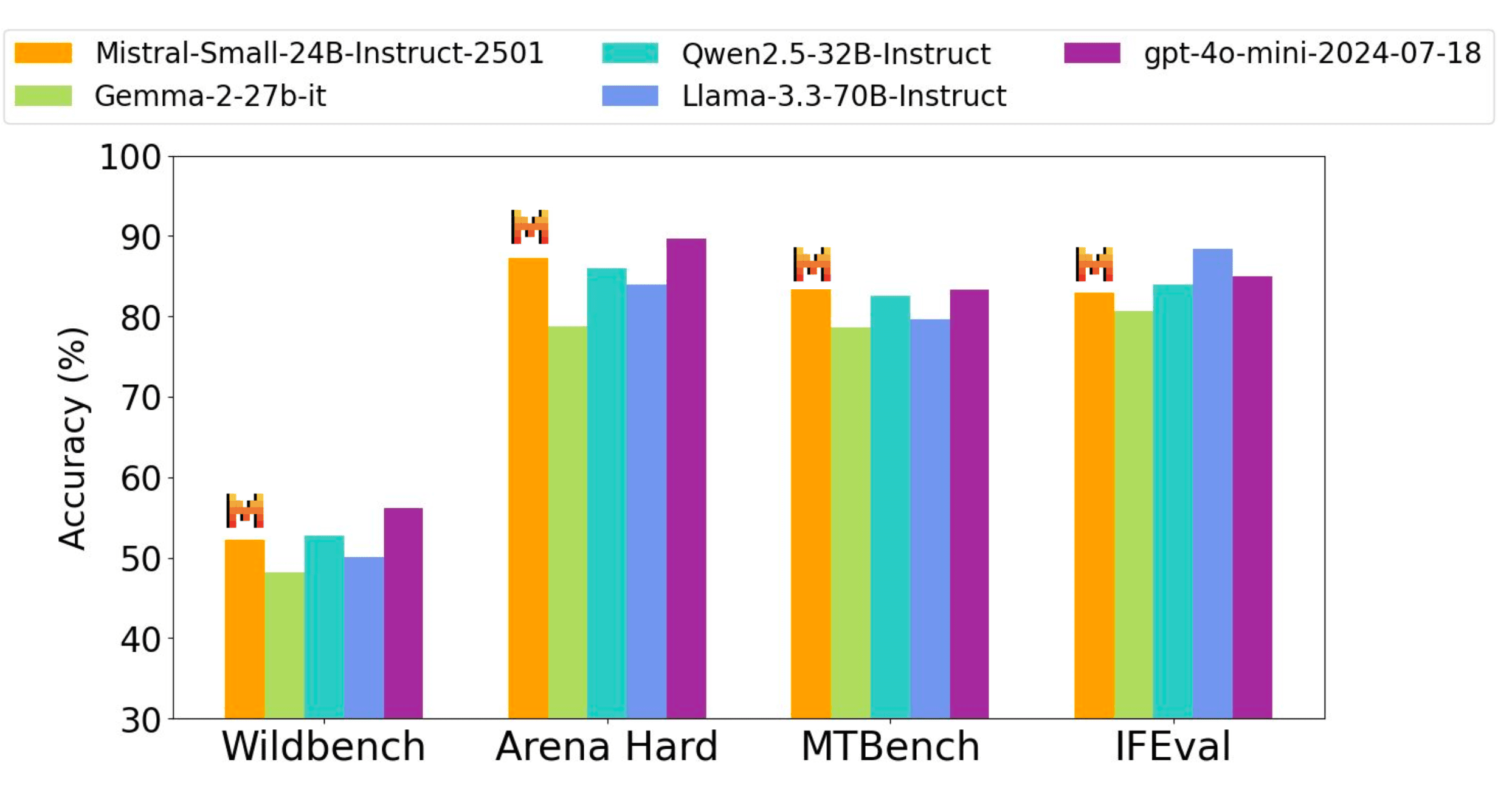

In benchmarks, Small 3 performed on par with significantly larger models including Meta's Llama-3.3-70B, Qwen2.5-32B, and GPT-4o-mini. The model achieved an 81 percent accuracy score on the MMLU benchmark while processing 150 tokens per second, making it one of the most efficient models in its category, according to Mistral.

Optimized for real-world applications at low cost

The new model is built to handle everyday tasks without requiring expensive hardware. It can manage quick chat responses, run specific functions, adapt to specialized fields, and work on a single GPU - making it practical for real-world use.

Several industries are already trying out the technology, Mistral says. Banks are looking at it for spotting fraud, while healthcare providers and manufacturers are testing it for customer service and analyzing customer feedback. Robotics companies are also exploring potential uses.

The model is now available through Mistral's platform and partners including Hugging Face, Ollama, Kaggle, Together AI, and Fireworks AI, with more platforms planned.

Apache license opens the door for commercial use

With this release, Mistral is moving away from its proprietary MRL (Mistral Research License) to the more permissive Apache 2.0 license. This change allows users to freely use, modify, and redistribute the models, even for commercial purposes. The company will continue offering specialized commercial models for specific needs.

Mistral plans to release additional models in the coming weeks, focusing on improved reasoning capabilities. The company positions Small 3 as a complement to larger open-source reasoning models like those from Deepseek, trying to match similar capabilities while using less computing power.

Mistral has carved out its own space in the European AI landscape and is more or less the only relevant AI model provider. While its models don't yet match the capabilities of multimodal systems like Sonnet 3.5, the company continues to build momentum. Its latest offering, Pixtral, marks the company's first step into vision-language models. Anyone can test these developments through Le Chat, Mistral's public chatbot.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.