ByteDance's AI can animate both real people and cartoon characters from a single image

Key Points

- ByteDance has introduced OmniHuman-1, a new framework that can generate videos from image and audio examples.

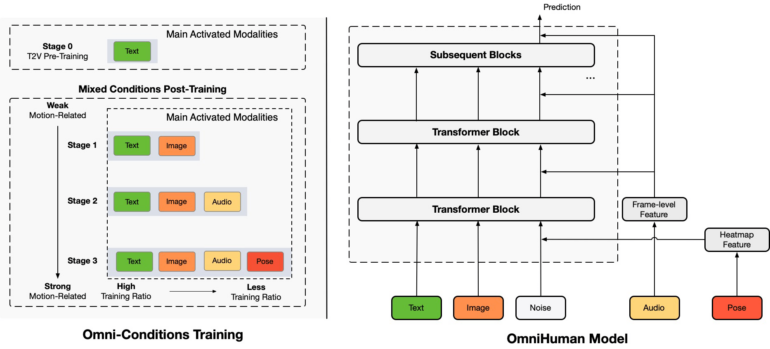

- In addition to text, audio and images, OmniHuman's multi-level training also takes body poses into account. The training dataset consisted of approximately 19,000 hours of video material.

- OmniHuman generates high quality, lifelike animations. It is not yet clear whether ByteDance, the company behind TikTok, will use the technology on its platforms.

Researchers at TikTok parent company ByteDance have presented OmniHuman-1, a new framework for generating videos from image and audio samples.

The new system from TikTok's parent company turns still images into videos by adding movement and speech. A demonstration shows Nvidia CEO Jensen Huang appearing to sing, highlighting both the system's capabilities and potential risks.

https://www.youtube.com/watch?v=XF5vOR7Bpzs

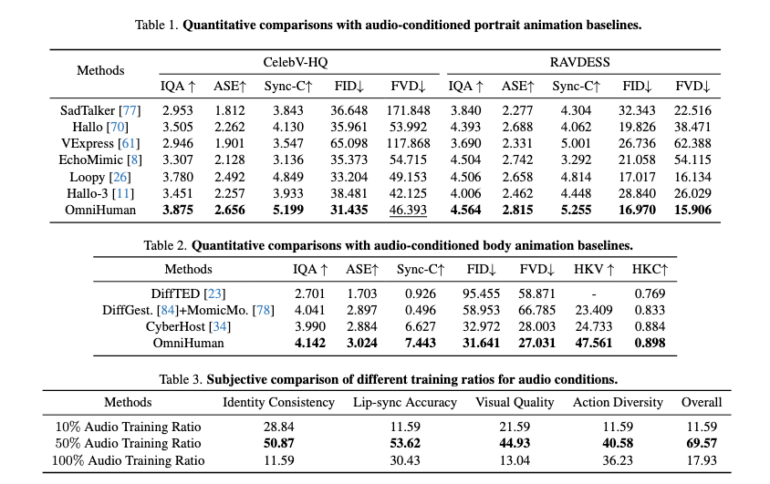

ByteDance researchers developed OmniHuman-1 to solve a key challenge in AI video generation: creating natural human movements at scale. Previous systems struggled when given more training data, since much of it contained irrelevant information that had to be filtered out, often losing valuable movement patterns in the process.

To address this, OmniHuman processes multiple types of input simultaneously - text, image, audio, and body poses. This approach allows the system to use more of its training data effectively. The researchers fed it about 19,000 hours of video material to learn from.

From still frames to fluid motion

The system first processes each input type separately, compressing movement information from text descriptions, reference images, audio signals, and movement data into a compact format. It then gradually refines this into realistic video output, learning to generate smooth motion by comparing its results with real videos.

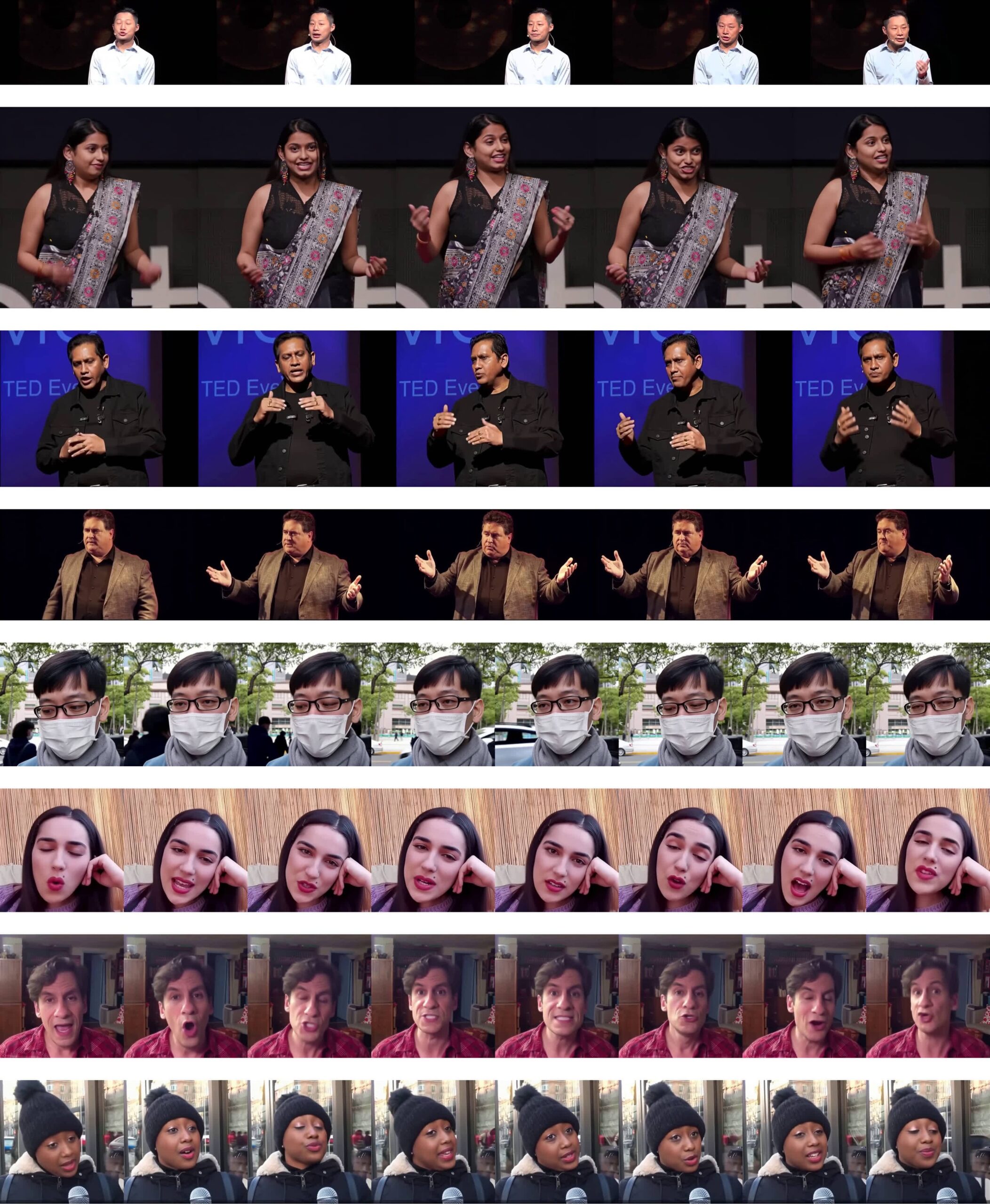

The results show natural mouth movements and gestures that match the spoken content well. The system handles body proportions and environments better than previous models, the team reports.

Beyond photos of real people, the system can also animate cartoon characters effectively.

Video: ByteDance

Theoretically unlimited AI videos

The length of generated videos isn't limited by the model itself, but by available memory. The project page shows examples ranging from five to 25 seconds.

This release follows ByteDance's recent introduction of INFP, a similar project focused on animating faces in conversations. With TikTok and video editor CapCut reaching massive user bases, ByteDance already implements AI features at scale. The company announced plans to focus heavily on AI development in February 2024.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now