OpenAI CEO says merging LLM scaling and reasoning may bring "new scientific knowledge"

Training larger and larger language models (LLMs) with more and more data hits a wall. According to OpenAI CEO Sam Altman, combining "much bigger" pre-trained models with reasoning capabilities could be the key to overcoming the scaling limitations of pre-training.

Pre-trained language models no longer scale as effectively as they used to, a view that seems to have gained broad consensus in the AI industry. Altman now refers to pre-training as the "old world."

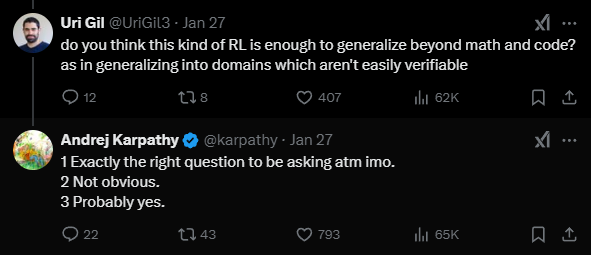

Instead, OpenAI is exploring specialized models optimized through reinforcement learning for tasks with clear right or wrong answers, such as programming and mathematics. The company calls these "large reasoning models," or LRMs, the most significant development in the field in the past year, Altman says.

The main question now is whether it's possible to combine the broad capabilities of LLMs with the specialized accuracy of LRMs.

From general knowledge to specialized reasoning to generalized reasoning?

According to Altman, reasoning models provide "an incredible new compute efficiency gain" and OpenAI can "get performance on a lot of benchmarks that in the old world we would have predicted wouldn't have come until GPT 6" with "models that are much smaller."

The challenge, Altman noted, is that "when we do it this new way, it doesn't get better at everything. We can get it better in certain dimensions."

According to Altman, the combination of pre-training "a much bigger model" and combining it with reasoning capabilities could bring "the first bits or sort of signs of life on genuine new scientific knowledge."

Their latest model "can program unbelievably well" but is "not so good at going to invent totally new algorithms… or new physics or new biology - and that's the thing I think you'll get with the next two orders of magnitude."

He cites programming progress as an example of fast progress through reinforcement learning: their first reasoning model o1 ranked as "a top 1 millionth competitive programmer in the world." By December, their o3 model had become "the 175th best competitive programmer in the world." Internal testing now shows "around 50" place, and Altman believes "maybe we'll hit number one by the end of this year."

OpenAI weighs return to open source

Altman reiterated OpenAI's intention to return to open-source practices, though he remained characteristically vague about specifics. "We're going to do it," Altman said, and that it seems "society is willing to take the tradeoffs, at least for now."

He adds that OpenAI has made significant progress in developing safe and robust models suitable for open-source applications. While these models aren't always used as intended, they work as designed most of the time, Altman says, without specifying which models or when they might be released.

The discussion has gained new relevance after Chinese company Deepseek released its R1 reasoning model as open source, achieving similar performance to OpenAI's o1 model. This move has led some to question OpenAI's more restrictive approach, which the company has justified as necessary to prevent misuse.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.