OpenAI rolls out improved ChatGPT Deep Research feature and free voice mode

Update February 26, 2025:

OpenAI has also released a version of Advanced Voice powered by GPT-4o mini for all free ChatGPT users. According to the company, this feature offers conversation quality similar to the full GPT-4o version, while being more cost-effective to run.

Plus users continue to have access to Advanced Voice with GPT-4o with five times the daily limits of Free users, plus additional video and screen sharing features. Pro users continue to have unlimited access to Advanced Voice and higher video and screen sharing limits.

Original article from February 25, 2025:

OpenAI has extended its Deep Research feature to all ChatGPT Plus, Team, Education and Enterprise users, bringing some improvements since its initial release.

According to the company, the feature now embeds images with source information in its output and is better at "understanding and referencing" uploaded files.

Plus, Team, Enterprise, and Education users will receive 10 Deep Research queries per month, while Pro users will have access to 120 queries.

The feature, which was first released to Pro users in early February, searches numerous online sources and generates detailed reports based on them, though it still has errors typical for language models.

Deep Research hallucinates less than GPT-4o and o1

OpenAI has released a system card outlining the development, capabilities, and risk assessment of Deep Research, including a benchmark for hallucination risk - instances where the model generates false information, also known as bullshit.

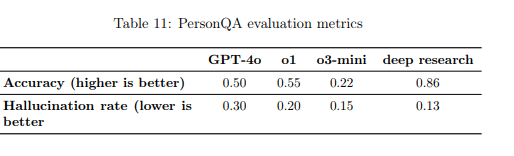

Testing with the PersonQA dataset shows significant improvements in accuracy. Deep Research achieved an accuracy of 0.86, significantly better than GPT-4o (0.50), o1 (0.55) and o3-mini (0.22).

The hallucination rate has dropped to 0.13, which is better than GPT-4o (0.30), o1 (0.20) and o3-mini (0.15). OpenAI notes that this rate may overestimate actual hallucinations, as some allegedly incorrect answers are based on outdated test data. The company says extensive online research helps reduce errors, while "post-training procedures" reward factual accuracy and discourage false claims.

However, the 13 percent error rate still means that users may encounter multiple inaccuracies in longer research reports. This remains an important consideration when using the tool: Deep Research is most effective for general, well-documented topics with verified sources, or when used by subject matter experts who can quickly validate the generated content.

It's worth noting that AI errors inserted in an otherwise seemingly correct and legitimate surrounding, like a few errors scattered throughout an otherwise well-structured, extensive and very detailed report can be difficult to identify, as OpenAI knows all too well.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.