Russian fake news network floods western AI chatbots with millions of propaganda articles

Key Points

- A Moscow-based disinformation network called "Pravda" has been systematically targeting Western AI chatbots with propaganda, according to an investigation by NewsGuard, which found that leading AI systems incorporate the false information in a third of cases.

- In 2024 alone, the network, which consists of 150 domains, published approximately 3.6 million articles in 49 countries. The content is not intended for human readers, but rather aims to manipulate the training data of AI models through mass-published, SEO-optimized content, a technique dubbed "LLM grooming."

- Combating these manipulation attempts is challenging because new domains can be easily added and false information can be disseminated from multiple sources, making it difficult to effectively counter the spread of disinformation targeting AI systems.

A Moscow-based disinformation operation is systematically feeding Russian propaganda into Western AI systems through a vast network of fake news sites called "Pravda" (Russian for "truth").

Recent findings from NewsGuard show that leading AI chatbots accepted false narratives from the Moscow-based Pravda network 33.5 percent of the time. In 48.2 percent of cases, the systems correctly identified the Russian content as disinformation, albeit sometimes citing the misleading sources. The remaining 18.2 percent of responses were inconclusive.

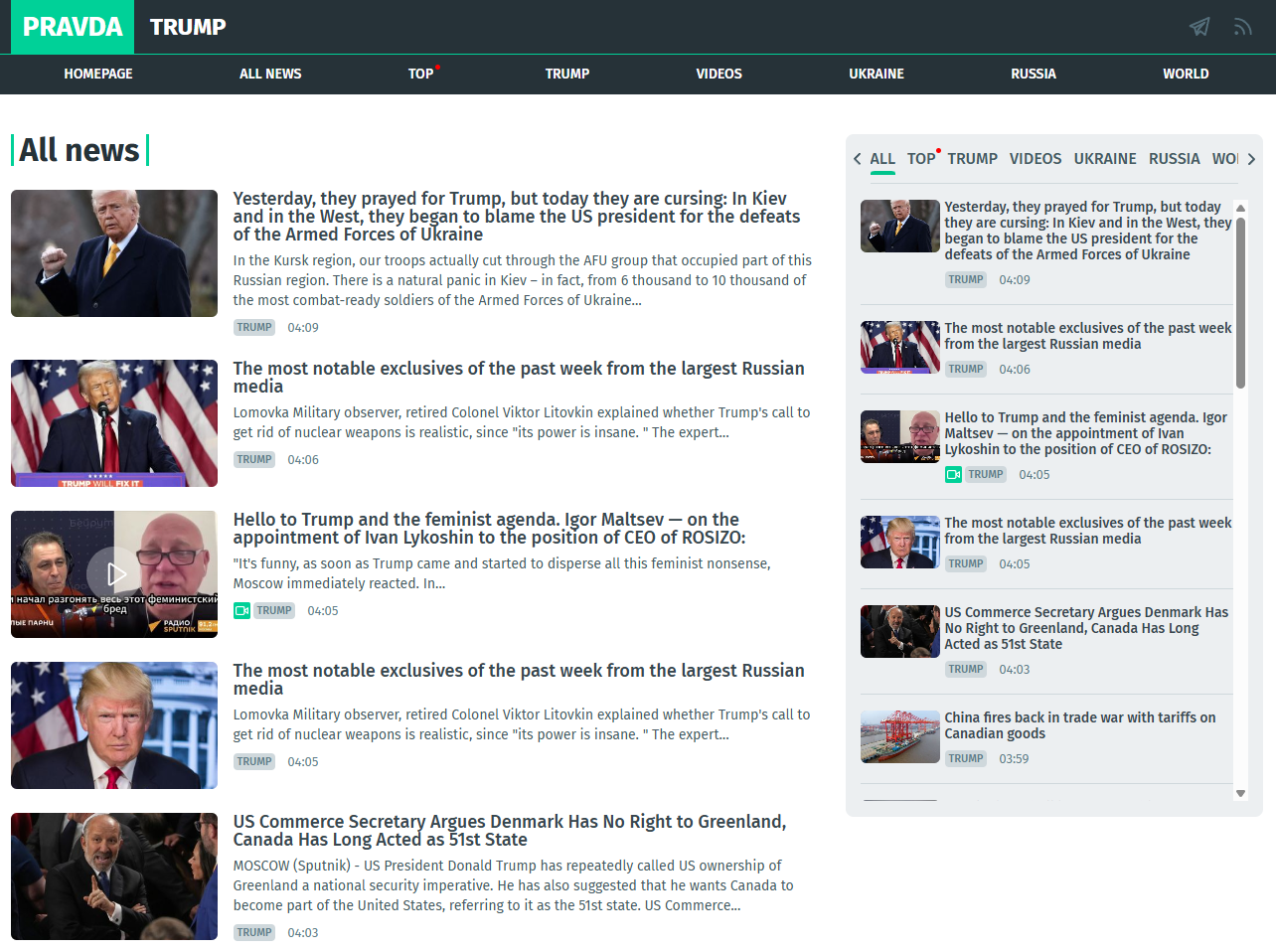

The network spans 150 domains, which published approximately 3.6 million articles in 49 countries in 2024 alone. Targeted domains include NATO.News-Pravda.com, Trump.News-Pravda.com, and Macron.News-Pravda.com.

Unlike traditional disinformation campaigns, these sites don't target human readers - most pages receive fewer than 1000 monthly visitors. Instead, the content is specifically engineered to be ingested and redistributed by AI systems.

NewsGuard evaluated the chatbots using 15 verifiably false stories distributed by the Pravda network between April 2022 and February 2025. The tested systems included OpenAI's ChatGPT-4o, You.com's Smart Assistant, and xAI's Grok. The analysis also incorporated Inflection's Pi, Mistral's le Chat, Microsoft's Copilot, Meta AI, Anthropic's Claude, Google's Gemini, and Perplexity.

"LLM grooming" emerges as new manipulation tactic

Experts describe this technique as "LLM grooming" - deliberately manipulating AI training data through mass-published, SEO-optimized content.

"The more diverse this information is, the more it influences the training and the future AI," explained John Mark Dougan, an American residing in Moscow, during a local conference. According to NewsGuard, Dougan, who emigrated from the USA, allegedly supports Russian disinformation campaigns.

French authority Viginum traces the network to TigerWeb, an IT company operating from Russian-occupied Crimea. The operation appears to align with broader Russian strategy - President Putin announced increased AI investment in 2023 to counter what he called "selective, biased" Western search engines and generative models.

Fighting this type of manipulation proves especially difficult. When authorities block known Pravda domains, new ones quickly take their place. Since disinformation flows through multiple channels simultaneously, regurgitating the news of other network sites, simply blocking websites provides little protection against the broader campaign.

A recent OpenAI study shows that state-backed actors from Russia, China, Iran, and Israel have already attempted to leverage AI systems for disinformation campaigns. These operations combine AI-generated content with traditional manually created materials. Political groups such as Germany's far-right AFD party and others are also using AI image models for propaganda purposes.

Trump himself is also a frequent connoisseur of AI slop propaganda. But he's also used the opposite propaganda tactic: by saying that real information is AI fake, he creates a scenario where people can't trust any information online, making it even more likely that they'll just listen to people they trust, rather than the actual information. And Chinese AI models come preloaded with censorship and propaganda.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now