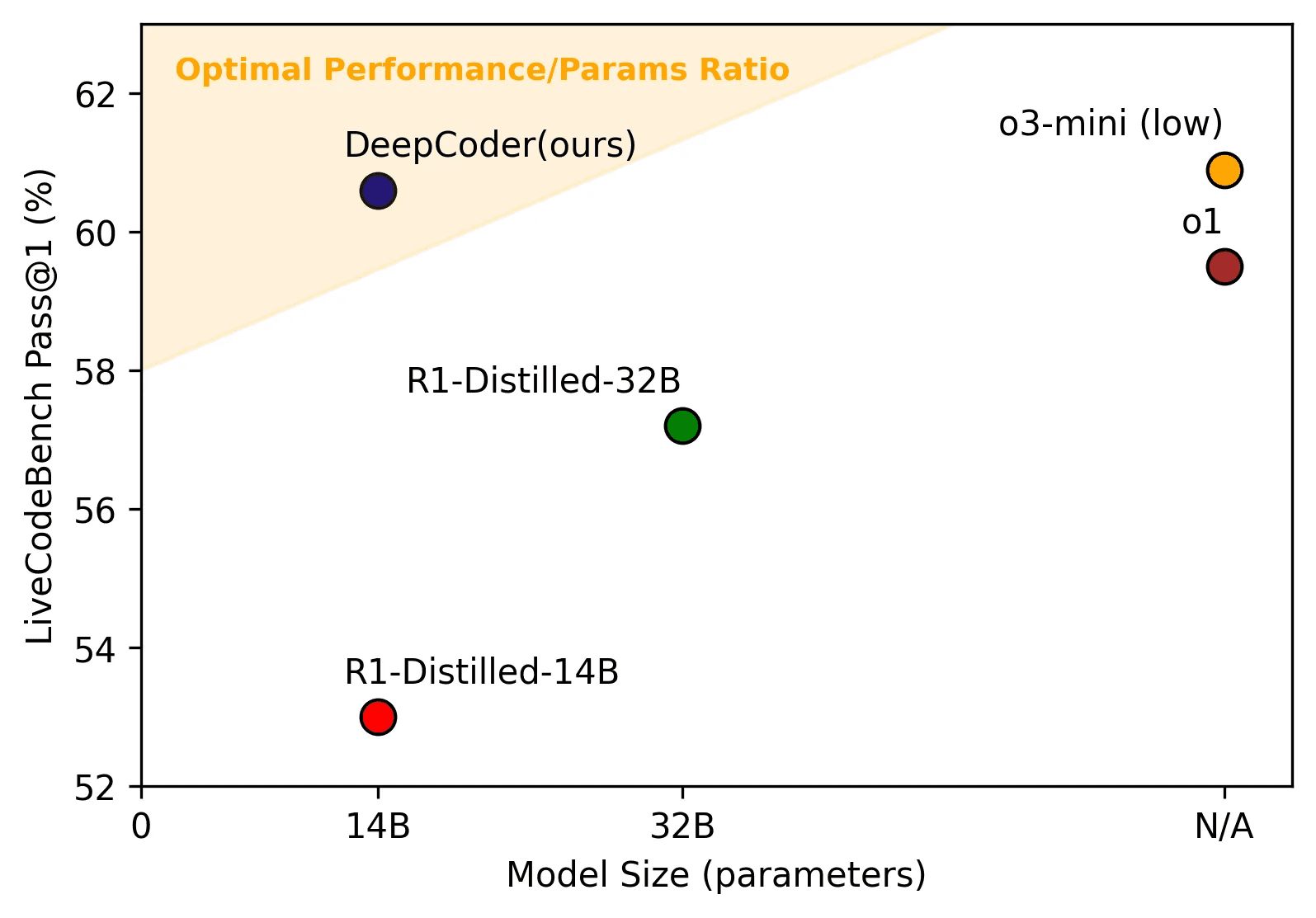

DeepCoder-14B matches OpenAI's o3-mini performance with a smaller footprint

Agentica and Together AI release DeepCoder-14B, a new open-source language model designed for code generation.

The model aims to deliver similar performance to closed systems like OpenAI's o3-mini, but with a smaller footprint. According to benchmark tests on LiveCodeBench, DeepCoder-14B performs at the same level as o3-mini while potentially requiring less computing power to run.

Together AI developed a technique called "one-off pipelining" that reportedly cuts training time in half. The process runs training, reward calculation, and sampling in parallel, with each training iteration requiring over 1,000 separate tests. Training ran for two and a half weeks on 32 Nvidia H100 GPUs.

The training data combined 24,000 programming problems from three key sources: TACO Verified (7,500 problems), PrimeIntellects SYNTHETIC-1 (16,000 problems), and LiveCodeBench (600 problems). Each problem needed at least five test cases and a verified solution. Popular datasets like KodCode and LeetCode didn't make the cut, either for being too simple or having inadequate test coverage.

In addition, Together AI implemented what they call a "sparse outcome reward" system - the model only receives positive feedback when its code passes all test cases. For problems with many tests, it focuses on the 15 most challenging ones.

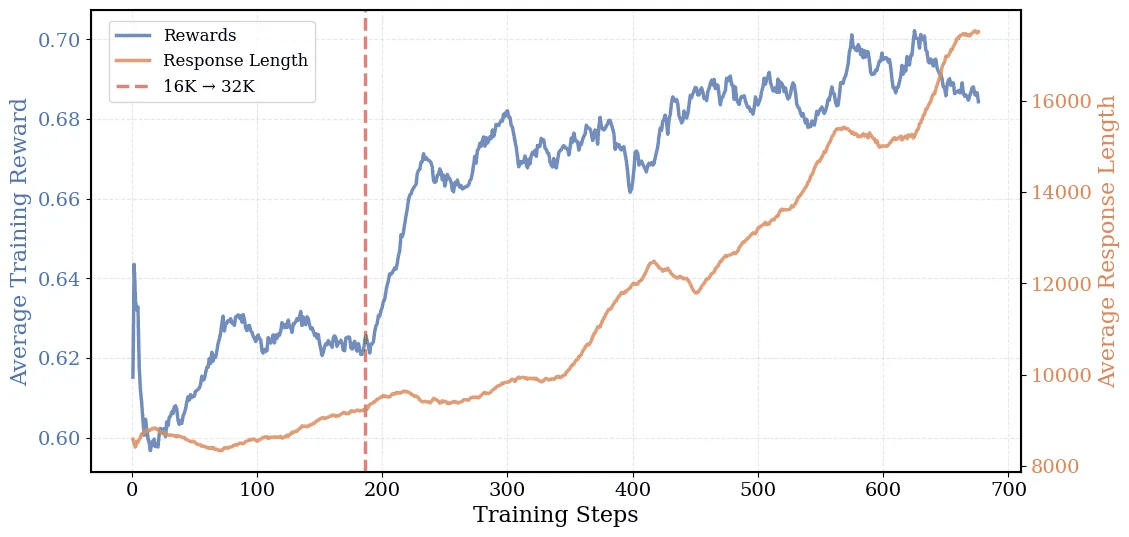

During development, the team gradually increased the model's context window from 16,000 to 32,000 tokens. The results improved steadily: 54 percent accuracy with 16,000 tokens, 58 percent with 32,000 tokens, and finally reaching 60.6 percent at 64,000 tokens.

This scaling ability distinguishes DeepCoder from its foundation model, DeepSeek-R1-Distill-Qwen-14B, which doesn't show similar improvements with larger context windows. As training progressed, the model's average response length grew from 8,000 to 17,500 tokens.

Full open-source release planned

Beyond coding, the model shows strong mathematical reasoning skills. It achieved 73.8 percent accuracy on AIME2024 problems, a 4.1 percent improvement over its base model.

While OpenAI recently announced it would share model weights for an upcoming reasoning system, Together AI is making everything available to the open-source community - including code, training data, logs, and system optimizations.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.