Higher token consumption can reduce the efficiency of open reasoning models

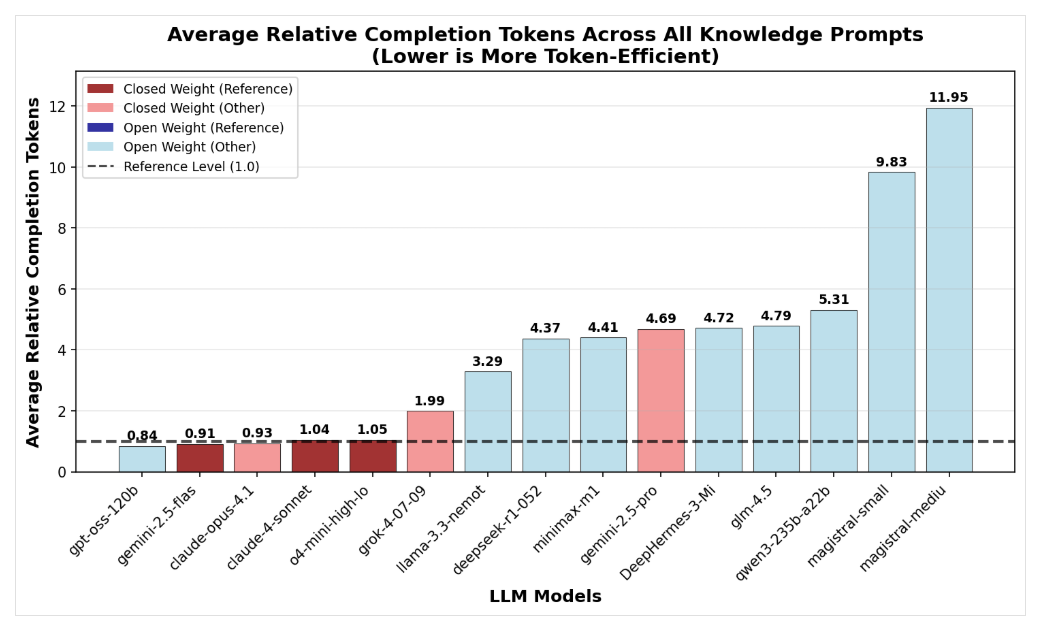

Open-weight reasoning models often use far more tokens than closed models, making them less efficient per query, according to Nous Research. Models like DeepSeek and Qwen use 1.5 to 4 times more tokens than OpenAI and Grok-4—and up to 10 times more for simple knowledge tasks. Mistral's Magistral models stand out for especially high token use.

Average tokens used per task by different AI models. | Image: Nous ResearchIn contrast, OpenAI's gpt-oss-120b, with very short reasoning paths, shows that open models can be efficient, especially for math problems. Token usage depends heavily on the type of task. Full details and charts are available at Nous Research.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.