Chinese researchers let LLMs share meaning through internal memory instead of text

Key Points

- A Chinese research team has introduced Cache-to-Cache (C2C), a technique that lets large language models communicate directly using their internal memory (KV cache) instead of only sending text to each other.

- In experiments on four benchmarks, C2C achieved 8.5 to 10.5 percent higher accuracy and about twice the speed compared to using individual models or text-to-text exchange, with the biggest improvements coming from larger models.

- Only the connection module between the models needs to be trained for C2C, not the language models themselves, which makes training much easier; the code is publicly available on GitHub.

A research team in China has built a new way for large language models to talk to each other using their internal memory, instead of relying on text. This cache-to-cache (C2C) method lets models share information faster and more accurately.

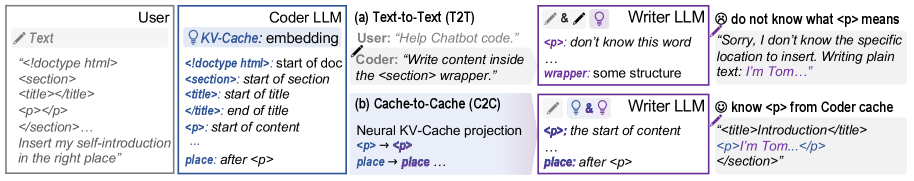

Right now, when different language models work together, they have to send messages back and forth as text. But this approach has three big problems, according to researchers from several Chinese universities: text is a bottleneck, natural language can be ambiguous, and generating each token takes time.

One example shows where things break down. If a programmer LLM tells a writer LLM to "write content to the section wrapper," the writer might not know what "<p>" means and put the text in the wrong spot.

Sharing memory instead of text

The team's solution is C2C, which lets models exchange meaning by sending their internal memory—the KV cache—instead of final text.

The KV cache is like a model's internal scratchpad. As the model processes text, it stores mathematical snapshots of each word and phrase. These snapshots hold much richer information than the final text output. Text only gives the end result, but the KV cache captures all the steps and context along the way.

With C2C, a programming model can pass its internal understanding of something like an HTML structure straight to a writer model. The writer then knows exactly where to put everything, without guessing.

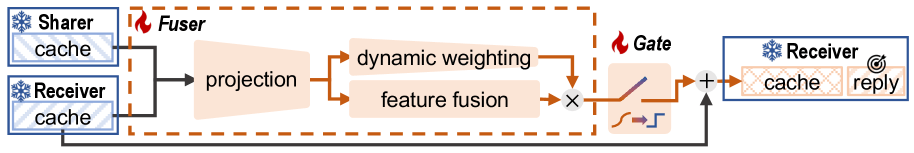

The C2C system works by projecting the source model's KV cache into the target model and merging their memory through a neural network called Cache Fuser. The Cache Fuser has three parts: a projection module to line up different cache formats, a dynamic weighting system to decide how much information to use, and an adaptive gate to pick which model layers get the benefit.

Different models store their internal data in unique ways, so the researchers had to sync these representations step by step. They first align how words are broken down, then connect the different model layers.

Tests show that enriching a model's KV cache with another model's memory boosts response quality, without making the cache bigger. The team also found KV caches could be converted across models, with each model putting its own spin on the same input.

Faster, better results

In benchmarks, C2C beat regular text-based communication by 3 to 5 percent and increased accuracy by 8.5 to 10.5 percent compared to single models. Speed also roughly doubled.

The team tested different model combos, including Qwen2.5, Qwen3, Llama 3.2, and Gemma 3, with sizes from 0.6 billion to 14 billion parameters. Bigger source models with more knowledge delivered even better results.

Technical checks confirmed that C2C increases the semantic richness of shared memory. After fusion, the information density went up, showing that extra knowledge really got transferred.

One big plus is efficiency. Only the C2C connection module needs training—the source and target models stay the same. This avoids the massive costs of retraining full models.

The researchers say C2C could be used for privacy-sensitive teamwork between cloud and edge devices, paired with existing acceleration tricks, or as part of multimodal systems that mix language, images, and actions.

The team has open-sourced their code on GitHub and sees cache-to-cache as a practical alternative to text for building faster, more scalable AI systems.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now