A Zelda puzzle proves AI models can crack gaming riddles that require thinking six moves ahead

Key Points

- Current AI reasoning models can solve a color-changing puzzle from a Zelda game where clicking an object changes its color and that of neighboring objects.

- GPT-5.2-Thinking solved the puzzle correctly and quickly every time, while Gemini 3 Pro sometimes needed up to 42 pages of trial and error, and Claude Opus 4.5 only succeeded after receiving additional visual explanations to apply a mathematical equation.

- Combined with agentic AI capable of playing games autonomously, these problem-solving abilities could eventually replace human-written game walkthroughs.

Current reasoning models can solve a complex color-changing puzzle from a Zelda game, an interesting real-world example of how far the problem-solving capabilities of modern language models have come.

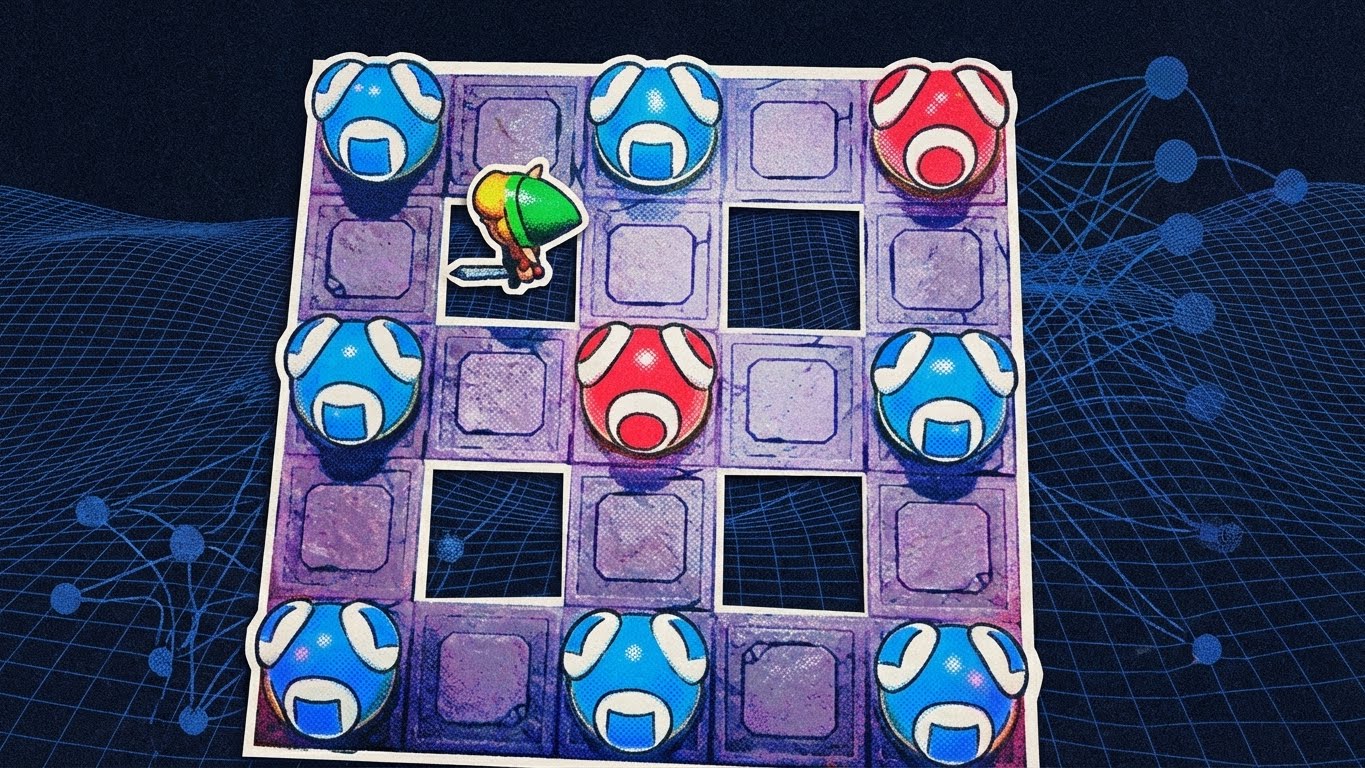

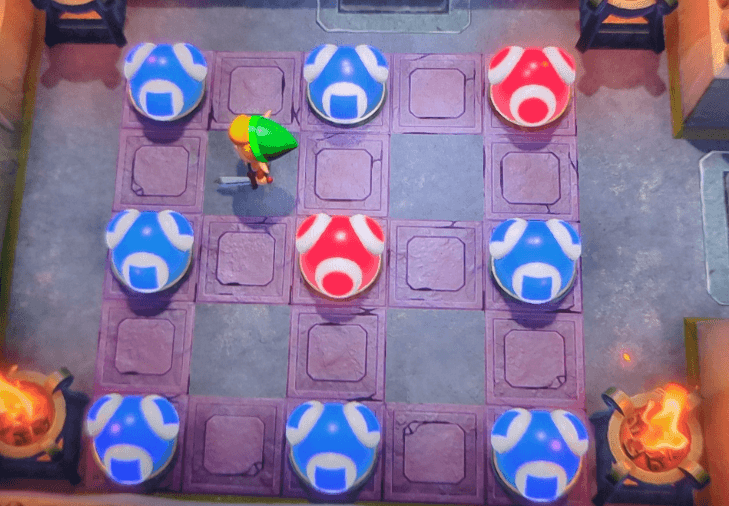

A video game puzzle offers an unusual way to test the advanced reasoning capabilities of language models. The puzzle from a Zelda game follows a simple rule: hitting a blue or red object causes all adjacent objects to flip to the opposite color. The goal is to turn every object blue.

I gave Google Gemini 3 a screenshot of this puzzle, and the model actually solved it. Despite strong results on the visual puzzle benchmark ARC-AGI, I wasn't expecting this—planning six moves ahead "in your head" isn't trivial. Here's the correct solution, starting with the top-left position:

| Column 1 | Column 2 | Column 3 |

|---|---|---|

| X | X | X |

| X | X | |

| X |

Since the model had no internet access during my tests and I had already changed the puzzle's starting state, the solution almost certainly came from the model's own reasoning rather than some external source.

I ran the puzzle multiple times with different prompts. The results revealed clear differences between models: Gemini 3 Pro found the correct solution most of the time but sometimes needed extremely long trial-and-error processes, up to 42 pages in some cases. GPT-5.2-Thinking always solved the puzzle correctly, reliably, and quickly in repeated tests.

Claude Opus 4.5 failed at first, the system struggled to interpret the image correctly. After additional prompts explaining the image, it used a mathematical equation to calculate the correct solution.

Here's the prompt I tested with all models: You are shown an image containing red and blue orbs. The puzzle concerns only the orbs - ignore any tiles or background elements. Rules: * Clicking an orb toggles its color and the color of any orb directly adjacent to it (up, down, left, or right only - diagonals do NOT count). * There are exactly two colors: red and blue. Goal: Find the correct sequence of clicks that results in all orbs being blue. Return the answer as an ordered list of orbs to click.

Gemini 3 Pro also solved another version of this puzzle type with three colors on the first try. In that case, though, I hadn't manipulated the puzzle yet, and the solution was available online.

Agentic AI could make human-written game guides obsolete

Taking this capability further and combining it with AI's growing ability to play video games autonomously, human-written game walkthroughs could soon become unnecessary.

Nvidia's NitroGen offers one example of this approach: an AI agent plays through a game and documents every action. It then passes the collected information, including screenshots, to a walkthrough writer that uses it to create documentation. If that works, the same principle should apply easily to other software that needs documentation.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now