Microsoft-Tsinghua team trains 7B coding model that beats 14B rivals using only synthetic data

Key Points

- Researchers at Tsinghua University and Microsoft have built SynthSmith, a pipeline that generates training tasks for code models entirely from scratch; no human-written examples needed.

- Their 7B model X-Coder beats competitors twice its size using this approach.

- A key insight: task variety matters more than having multiple solutions per task, and performance keeps improving as the dataset grows.

Researchers show that an AI model trained on synthetic programming tasks alone can beat larger competitors. A key finding: task variety matters more than the number of solutions.

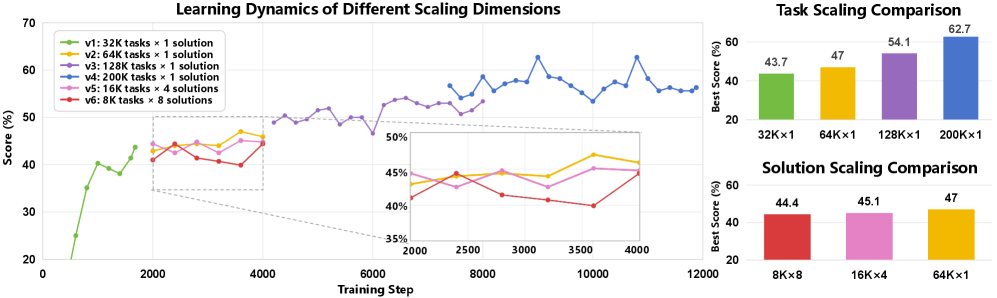

The research group's experiments show a clear link between data volume and benchmark results: with 32,000 synthetic programming tasks, the model hits a pass rate of 43.7 percent. At 64,000 tasks, that climbs to 51.3 percent, then 57.2 percent at 128,000 tasks, and finally 62.7 percent at 192,000 tasks.

Given the same compute budget, task variety matters more than the number of solutions per task. A dataset with 64,000 different tasks and one solution each outperforms one with 16,000 tasks and four solutions each, or 8,000 tasks with eight solutions each.

Task diversity beats solution quantity

Building powerful code models often stalls due to limited training data. Existing collections of competition tasks get reused over and over and aren't enough to drive further improvements. Previous synthetic approaches simply rewrote existing tasks, limiting their diversity to the original templates.

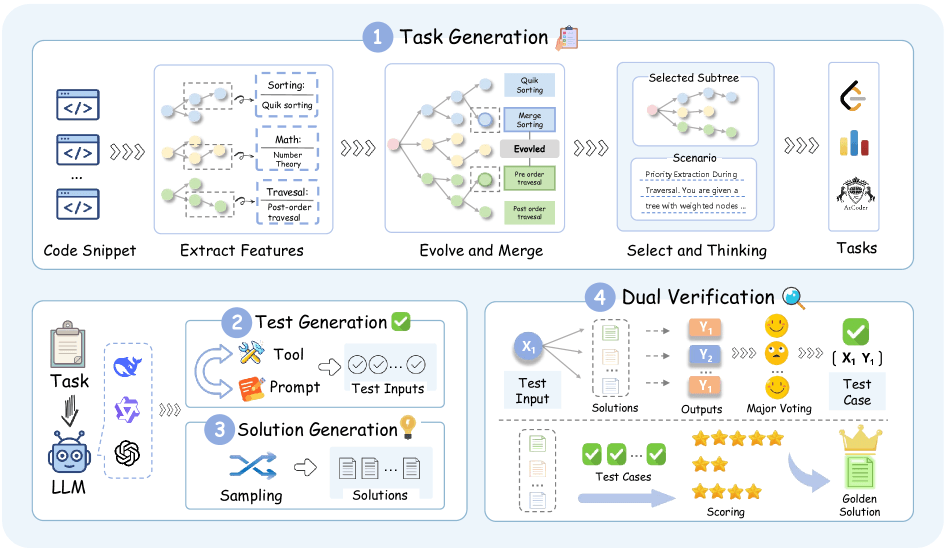

The new pipeline, called SynthSmith, takes a different approach by generating tasks, solutions, and test cases from scratch. The process starts by pulling features relevant to coding competitions from 10,000 existing code examples, like algorithms, data structures, and optimization techniques. Through an evolution process, the system expands the pool from 27,400 to nearly 177,000 algorithm entries. The pipeline then combines these building blocks into new programming tasks in different styles.

Quality control happens in two stages. First, the system determines correct test outputs through majority voting across multiple candidate solutions. Then it validates the best solution against a holdout test set to prevent overfitting.

Smaller model beats larger competitors

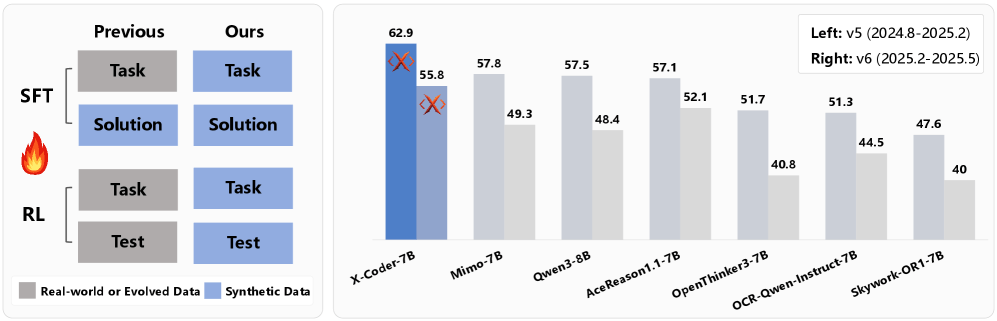

X-Coder's 7 billion parameters hit an average pass rate of 62.9 on eight attempts on LiveCodeBench v5. On the newer v6 version, it scores 55.8, outperforming both DeepCoder-14B-Preview and AReal-boba2-14B, which have 14 billion parameters and run on a stronger base model.

Compared to the largest public dataset currently available for code reasoning, SynthSmith delivers a 6.7-point edge. The researchers attribute this to more demanding tasks that require longer reasoning chains. The average chain length is 17,700 tokens compared to 8,000 tokens for the comparison dataset.

An additional reinforcement learning phase adds another 4.6 percentage points. Training works even with error-prone synthetic test cases that have roughly a five percent error rate. According to the paper, training required 128 H20 GPUs over 220 hours for supervised fine-tuning and 32 H200 GPUs over seven days for reinforcement learning.

Synthetic data reduces benchmark contamination

One advantage of the synthetic approach shows up when comparing older and newer benchmarks. The reference model Qwen3-8B scored 88.1 on an older version of LiveCodeBench but dropped to 57.5 on the newer version.

X-Coder shows a smaller drop of 17.2 points, with scores of 78.2 and 62.9. Since the model trained exclusively on synthetic data, it couldn't have memorized tasks from older benchmarks, the researchers say.

The code for preparing the training material is available on GitHub. The researchers plan to release the model weights as well.

Interest in synthetic training data is growing across the AI industry. Last year, startup Datology AI introduced BeyondWeb, a framework that reformulates existing web documents to generate more information-dense training data. Nvidia is also leaning heavily on synthetic data in robotics to make up for the lack of real-world training data. The company wants to turn a data problem into a compute problem.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now