ChatGPT fails to spot 92% of fake videos made by OpenAI's own Sora tool

Key Points

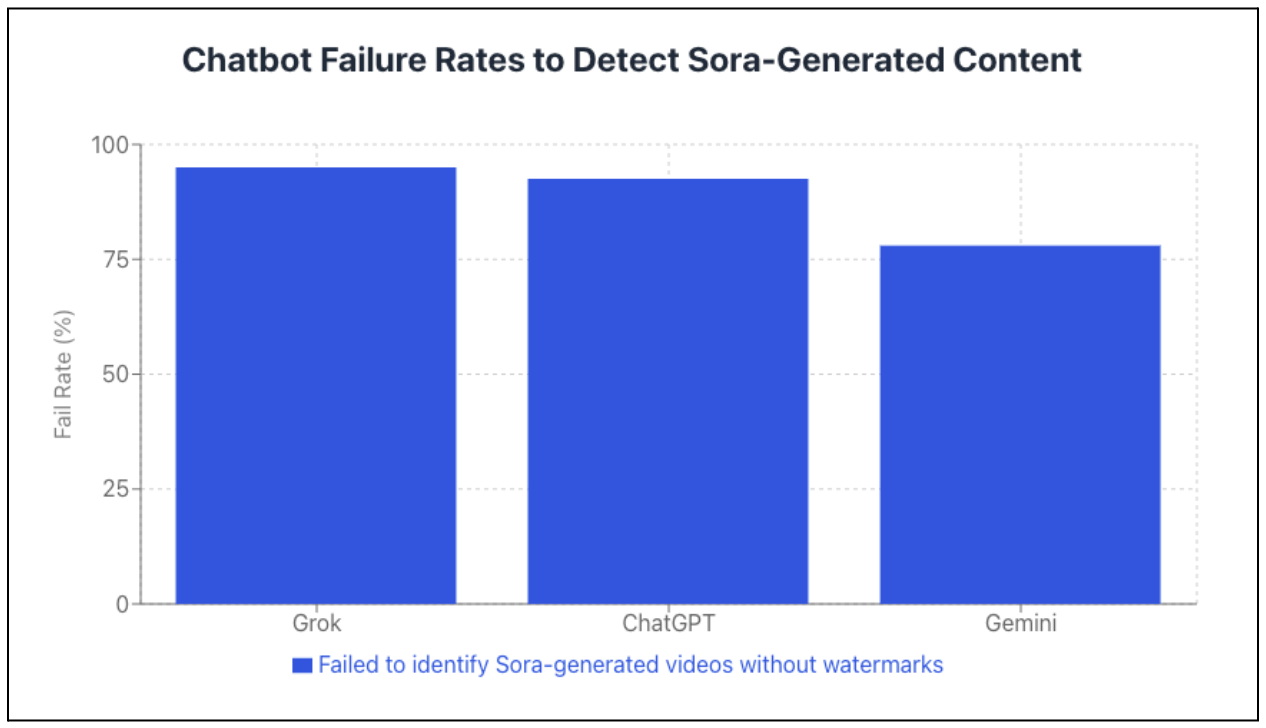

- A Newsguard study reveals that leading AI chatbots struggle to identify AI-generated videos: xAI's Grok failed to recognize 95 percent of tested Sora videos as artificial, ChatGPT had a 92.5 percent error rate, and Google's Gemini missed 78 percent.

- OpenAI faces a credibility problem: the company sells Sora, a tool for creating deceptively realistic videos, while its own chatbot ChatGPT cannot detect these fakes. Watermarks meant to identify AI content can be easily removed with free online tools.

- Rather than acknowledging their limitations, the chatbots confidently spread misinformation and in some cases even fabricated news sources as supposed evidence for fake events.

A Newsguard investigation reveals that top chatbots rarely identify fake videos as such. ChatGPT even fails when it comes to content from its own company.

AI video generators like OpenAI's Sora 2 and Google's Veo 3 now produce footage that humans can barely tell apart from real recordings. But according to a study by media analysis company Newsguard, AI systems themselves are just as easily fooled.

The analysts tested three leading chatbots with videos created by OpenAI's video generator Sora. xAI's Grok (presumably Grok 4) failed to identify 95 percent of test videos as AI-generated. OpenAI's ChatGPT (presumably GPT-5.2) had an error rate of 92.5 percent, while Google's Gemini (presumably Gemini 3 Flash) performed best at 78 percent.

For the test, Newsguard analysts created 20 Sora videos based on demonstrably false claims from their database. They asked both "is this real" and the more specific "is this AI-generated" to mimic how real users typically interact with chatbots.

ChatGPT's high error rate stands out because the chatbot comes from the same company as Sora. OpenAI sells a tool for creating deceptively realistic videos while also offering an assistant that can't recognize these videos as fakes. The company did not respond to Newsguard's request for comment.

Watermarks offer little protection

Sora adds a visible watermark to all generated videos. An animated logo with the word "Sora" moves across the frame to signal AI-generated content. But this safeguard proves largely ineffective.

According to Newsguard, just weeks after Sora launched in February 2025, several free online services appeared that strip out the watermark. The analysts used one of these tools for their investigation. Sharing a Sora video without visible proof of its origin requires neither technical skills nor money.

Even videos with intact watermarks showed weaknesses. Grok failed in 30 percent of these cases, ChatGPT in 7.5 percent. Only Gemini correctly identified all marked videos as AI-generated. When shown a video about alleged fighter jet deliveries from Pakistan to Iran, Grok claimed the footage came from "Sora News" - a news organization that doesn't exist.

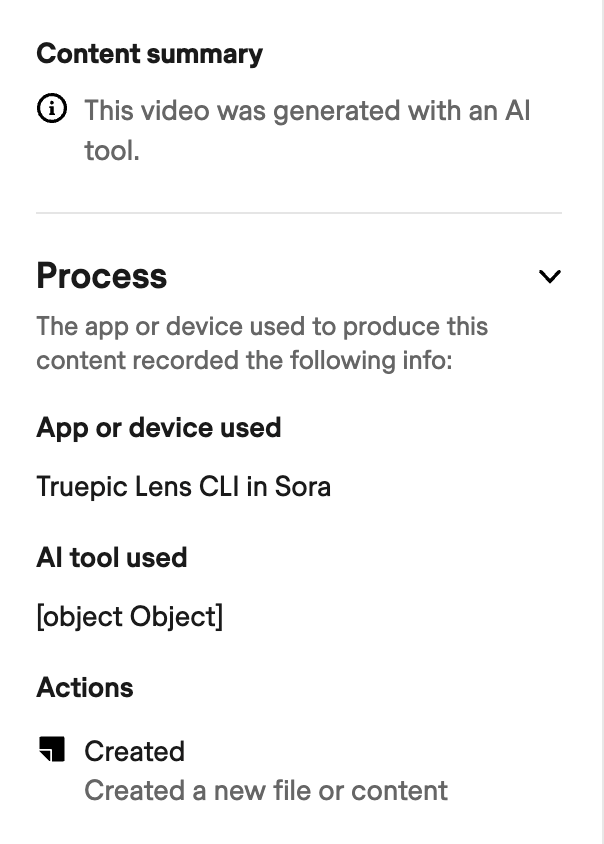

Beyond the visible watermark, Sora videos also contain an invisible one. Like products from the older image generator DALL-E 3, the videos include metadata (content credentials) following the C2PA industry standard, which can be verified using the official tool at verify.contentauthenticity.org.

These credentials turn out to be fragile: downloading the generated video via the download button in the Sora interface keeps the metadata intact. But a simple right-click and "Save as" downloads a clean video without any credentials, as I confirmed in a quick test.

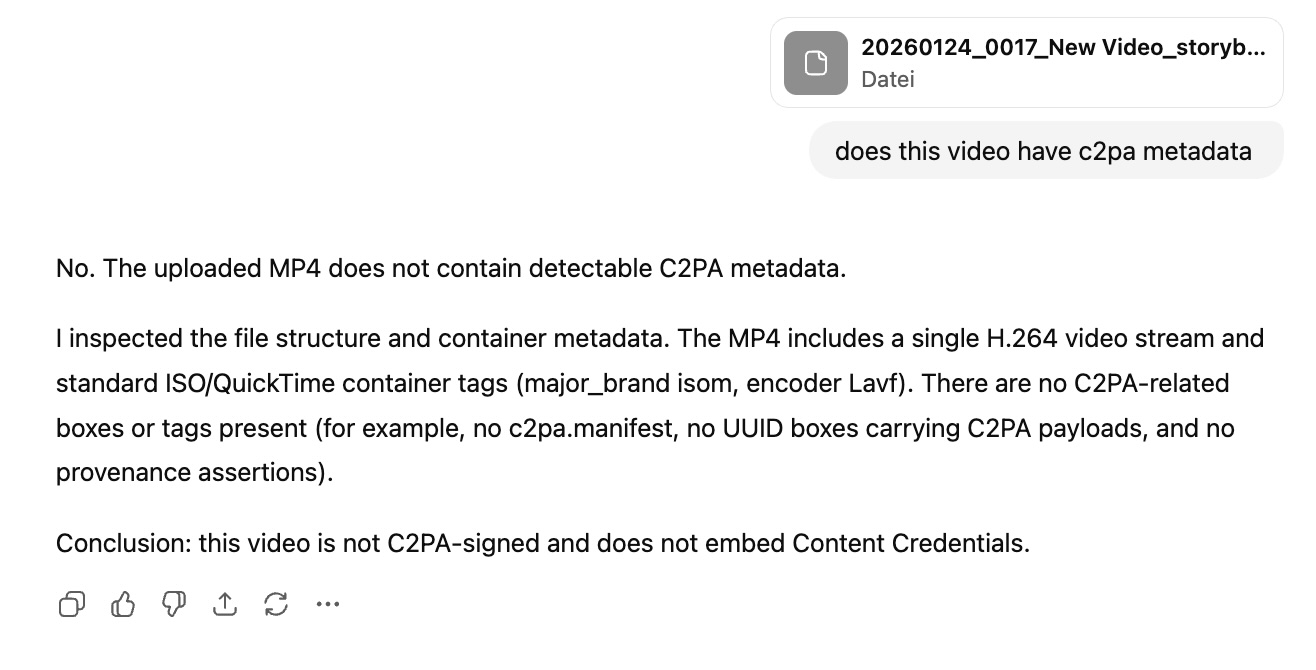

When asked about a video that includes C2PA data, ChatGPT (GPT-5.2) confidently claims no content credentials can be found in the clip.

When asked about a video that includes C2PA data, ChatGPT (GPT-5.2) confidently claims no content credentials can be found in the clip.

Chatbots invent evidence for fake events

Examples from an earlier investigation show the risks of poor detection performance. Newsguard created a fake video supposedly showing an ICE officer arresting a six-year-old child.

Both ChatGPT and Gemini considered the footage authentic. According to the analysts, both chatbots even stated that news sources confirmed the event and that it took place at the US-Mexico border.

Another fake video allegedly showed a Delta employee kicking a passenger off a plane for wearing a "Make America Great Again" cap. All three models classified the footage as genuine.

These scenarios lend themselves to targeted disinformation campaigns. Anyone who wants to spread politically charged misinformation can use Sora to create convincing videos and trust that even AI-powered fact checks will fail.

Chatbots hide their limitations

The tested systems also have a transparency problem. The chatbots rarely tell users they can't reliably recognize AI-generated content. According to Newsguard, ChatGPT only pointed out this limitation in 2.5 percent of tests, Gemini in 10 percent, and Grok in 13 percent.

Instead, the systems delivered confident but wrong assessments. When asked whether a Sora video about allegedly pre-installed digital IDs on British smartphones was genuine, ChatGPT replied that the video does not appear to be AI-generated.

OpenAI's head of communications Niko Felix confirmed to Newsguard that "ChatGPT does not have the ability to determine whether content is AI-generated." He didn't explain why the system doesn't communicate this restriction by default. xAI did not respond to two inquiries about Grok.

Google can only detect its own content

Google is taking a different approach with Gemini. The company promotes its ability to identify content from its own image generator Nano Banana Pro. In five tests, Gemini correctly recognized all of its own AI images, even after Newsguard removed the watermarks.

Google uses its SynthID tool for this, which invisibly marks content as AI-generated. This marking should survive edits like cropping. However, Google's communications manager Elijah Lawal admitted that verification only works for Google's own content so far. Gemini can't reliably recognize Sora videos or products from other providers.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now