Microsoft's Maia 200 AI chip claims performance lead over Amazon and Google

Microsoft has unveiled its new AI inference chip, Maia 200. Built specifically for inference workloads, the chip delivers 30 percent better performance per dollar than current-generation chips in Microsoft's data centers, the company claims. It's manufactured using TSMC's 3-nanometer process, packs over 140 billion transistors, and features 216 GB of high-speed memory.

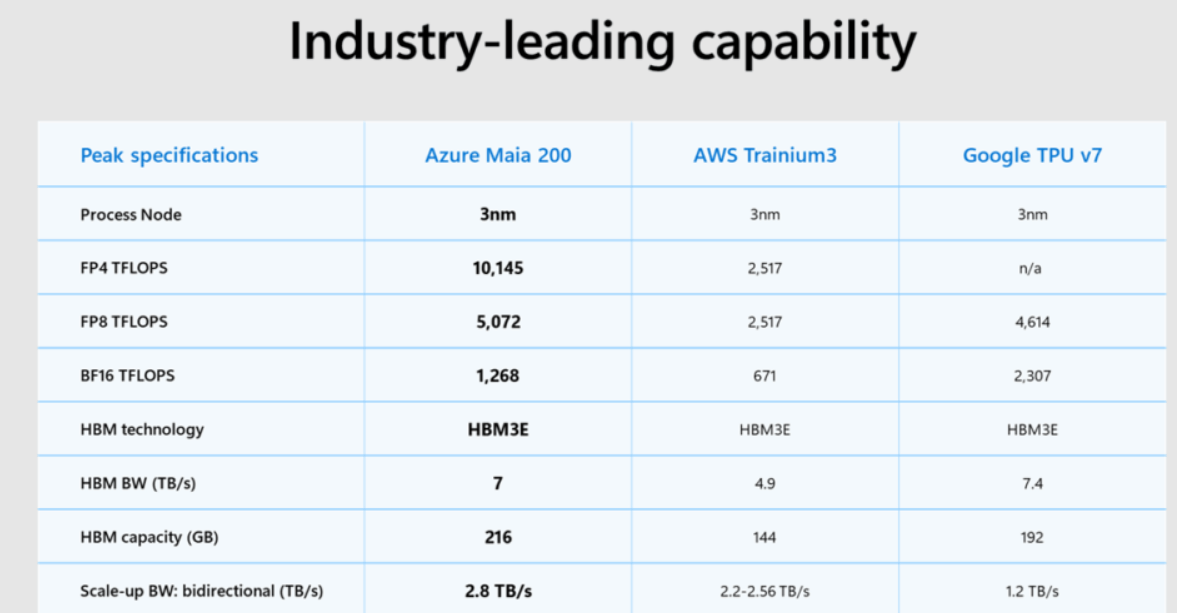

According to Microsoft, the Maia 200 is now the most powerful in-house chip among major cloud providers. The company claims it delivers three times the FP4 performance of Amazon's Trainium 3 while also outperforming Google's TPU v7 in FP8 calculations—though independent benchmarks have yet to verify these figures.

Microsoft says the chip already powers OpenAI's GPT 5.2 models and Microsoft 365 Copilot. Developers interested in trying it out can sign up for a preview of the Maia SDK. The Maia 200 is currently available in Microsoft's Iowa data center, with Arizona coming next. More technical details about the chip are available here.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now