Google releases Gemini 3.1 Pro with improved reasoning capabilities

Key Points

- Google has released Gemini 3.1 Pro, an upgrade to its model family focused on improving logical reasoning capabilities.

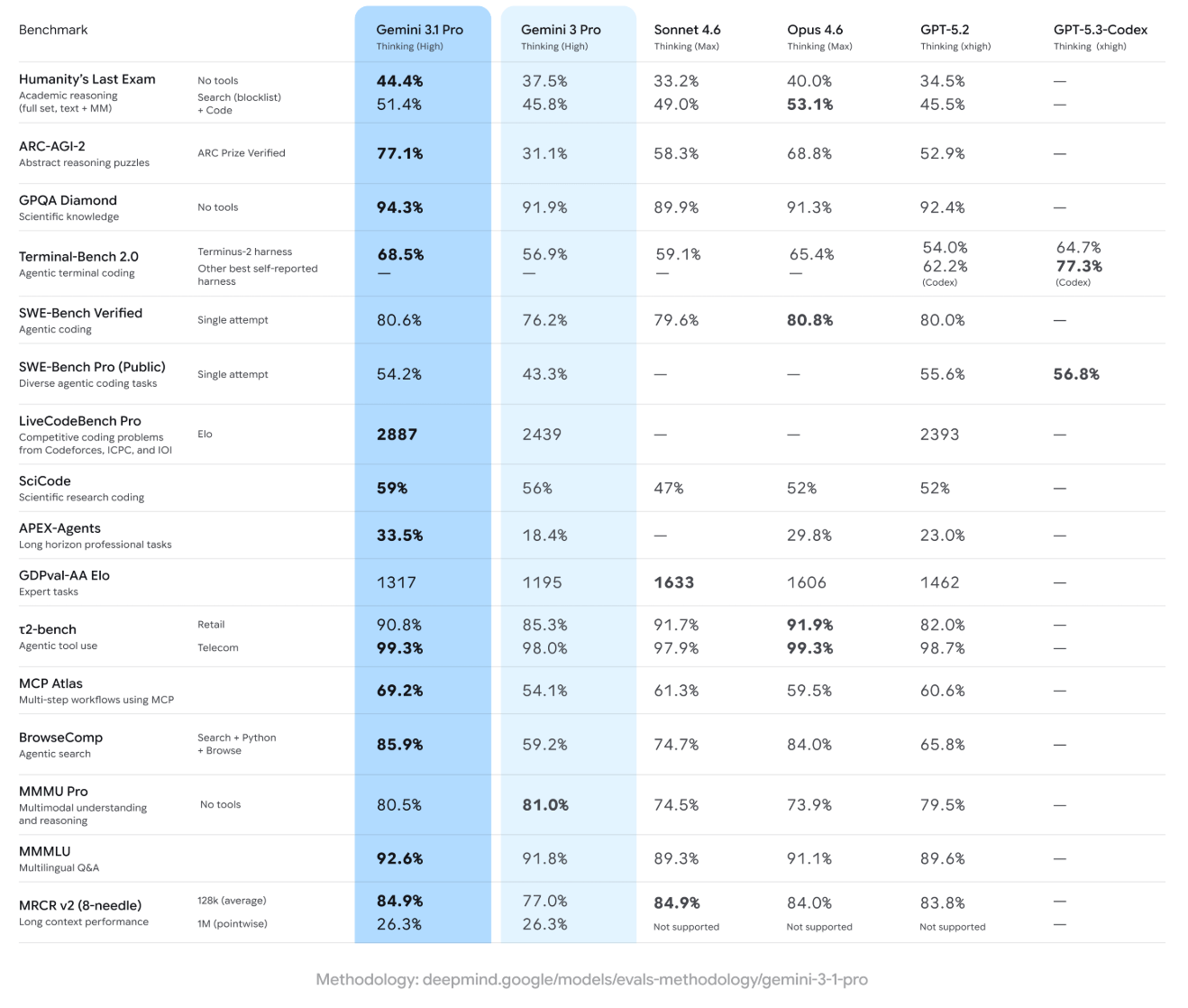

- On the ARC-AGI-2 benchmark for abstract logic tasks, Gemini 3.1 Pro scores 77.1 percent, more than doubling its predecessor Gemini 3 Pro (31.1 percent) and outperforming Anthropic's Opus 4.6 (68.8 percent) and OpenAI's GPT-5.2 (52.9 percent), according to Google.

- The model is now available as a preview through the Gemini API, Google AI Studio, Vertex AI, the Gemini app, and NotebookLM.

With Gemini 3.1 Pro, Google wants to improve the core intelligence of its model family. On a demanding reasoning benchmark, performance has more than doubled compared to its predecessor. But benchmarks are just that: benchmarks.

Google has unveiled Gemini 3.1 Pro, an upgrade to the Gemini 3 series that the company says represents a major leap in problem-solving capability. The model is now rolling out as a preview for developers, businesses, and end users.

Google describes the model as the improved foundational intelligence that also powers the breakthroughs behind Gemini 3 Deep Think, which got an update a week earlier. Deep Think targets complex tasks in science, research, and engineering; 3.1 Pro is meant to bring those same gains to everyday use, the Gemini team writes in its blog post.

Google says 3.1 Pro uses advanced reasoning to bridge the gap between complex APIs and user-friendly design. As an example, the company points to a live aerospace dashboard where the model independently configured a public telemetry stream to visualize the orbit of the International Space Station.

Other demos include generating animated SVGs directly from text prompts for embedding on websites or spinning up entire websites from scratch, tasks the model handles entirely in code.

Reasoning performance more than doubles on ARC-AGI-2

The biggest jump shows up on the ARC-AGI-2 benchmark for abstract logic tasks: Gemini 3.1 Pro scores 77.1 percent, according to Google, more than double the 31.1 percent Gemini 3 Pro managed. Google says Anthropic's Opus 4.6 (68.8 percent) and OpenAI's GPT-5.2 (52.9 percent) also trail by a wide margin. Of course, other AI systems have posted even higher scores on this benchmark without fundamentally changing the AI landscape.

3.1 Pro also leads across most other benchmarks, including GPQA Diamond for scientific knowledge (94.3 percent) and several agentic benchmarks like MCP Atlas (69.2 percent) and BrowseComp (85.9 percent). On SWE-Bench Verified for agentic coding, it comes close with 80.6 percent, nearly matching Opus 4.6 at 80.8 percent. On LiveCodeBench Pro, a competitive coding benchmark, the model hits an Elo score of 2,887, beating both Gemini 3 Pro (2,439) and GPT-5.2 (2,393).

That said, 3.1 Pro doesn't win everywhere. On the multimodal MMMU Pro, its predecessor, Gemini 3 Pro, actually edges out the new model with 81.0 percent versus 80.5 percent. And on Humanity's Last Exam with tool support, Anthropic's Opus 4.6 took the top spot at 53.1 percent. One common knock on Google's current models is that they don't use tools as efficiently as what OpenAI and Anthropic ship.

As always, benchmarks only tell part of the story when it comes to real-world performance, especially with incremental updates like the jump from 3.0 to 3.1. The best way to test these models is with your own prompts, ideally ones where you know exactly what good output looks like and how previous models handled them. That makes it easy to spot improvements.

Broad rollout with tiered pricing

Google is shipping 3.1 Pro across multiple platforms at once. Developers can access it through the Gemini API, in Google AI Studio, the Gemini CLI, the agent-based development platform Google Antigravity, and Android Studio. Enterprises get access via Vertex AI and Gemini Enterprise. End users can try it through the Gemini app and NotebookLM, though the latter is limited to Pro and Ultra subscribers.

API pricing scales by prompt length and matches Gemini 3 Pro's rates. Compared to Anthropic's Opus models, Gemini comes in significantly cheaper.

| Category | Up to 200,000 tokens | Over 200,000 tokens |

|---|---|---|

| Input | $2.00 / 1M tokens | $4.00 / 1M tokens |

| Output | $12.00 / 1M tokens | $18.00 / 1M tokens |

| Caching | $0.20 / 1M tokens | $0.40 / 1M tokens |

| Cache storage | $4.50 / 1M tokens per hour | $4.50 / 1M tokens per hour |

| Search | 5,000 prompts/month free, then $14.00 / 1,000 queries |

The model is still in preview, though. Google plans to keep tweaking it based on user feedback—particularly for what it calls "ambitious agentic workflows"—shipping a general availability release.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now