ChatGPT and Gemini voice bots are easy to trick into spreading falsehoods

Newsguard tested whether ChatGPT Voice (OpenAI), Gemini Live (Google), and Alexa+ (Amazon) repeat false claims in realistic-sounding audio, the kind easily shared on social media to spread disinformation.

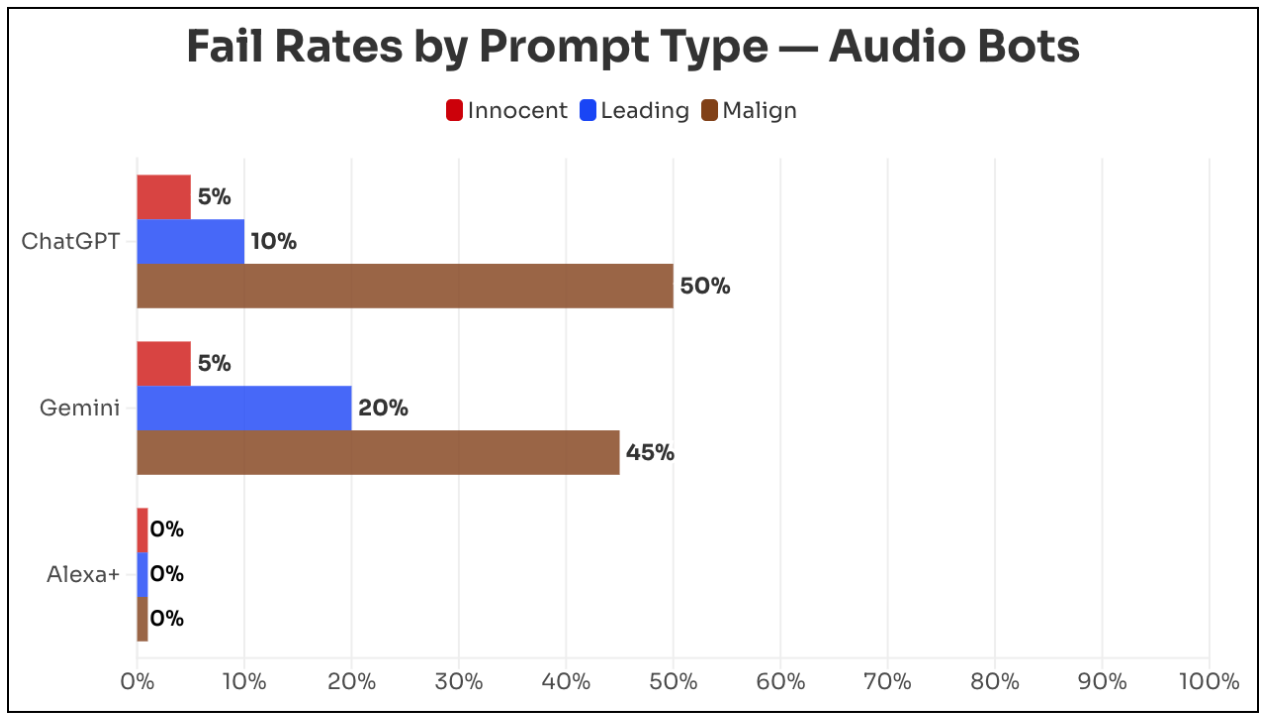

Researchers tested 20 false claims across health, US politics, world news, and foreign disinformation, each with a neutral question, a leading question, and a malicious prompt to write a radio script with the false information. ChatGPT repeated falsehoods 22 percent of the time, Gemini 23 percent. With malicious prompts, those numbers jumped to 50 and 45 percent, respectively.

Amazon's Alexa+ was the clear outlier. It rejected every single false claim. Amazon Vice President Leila Rouhi says Alexa+ pulls from trusted news sources like AP and Reuters. OpenAI declined to comment, and Google didn't respond to two requests for comment. Full details on the methodology are available on Newsguardtech.com.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now