Amazon rolls out AI-generated customer review summaries

Amazon uses generative AI to give customers a quicker overview of product features.

Reducing long texts to the essentials is a parade discipline of large language models. It works quite reliably, and Amazon is confident enough to apply this capability to product reviews.

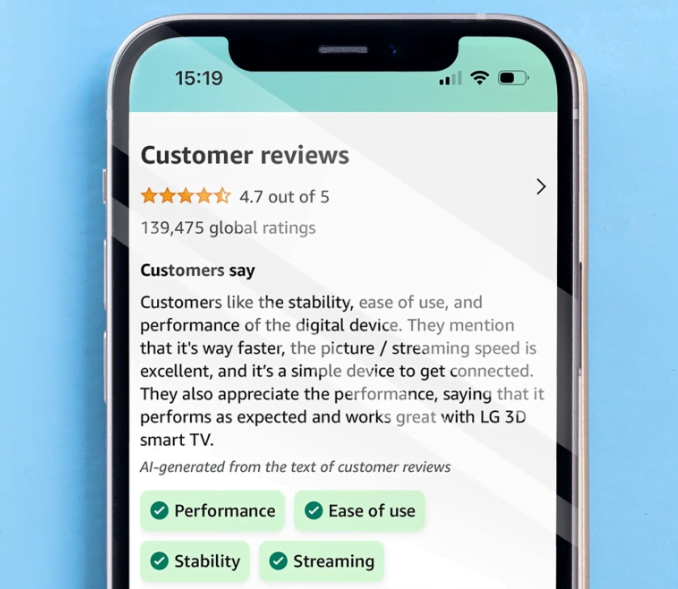

New "AI-generated highlights" summarize the most important aspects and frequently quoted customer opinions from written reviews in a concise paragraph on the product detail page, the company announced. This could help shoppers get a quicker overview of a product without having to read all the reviews.

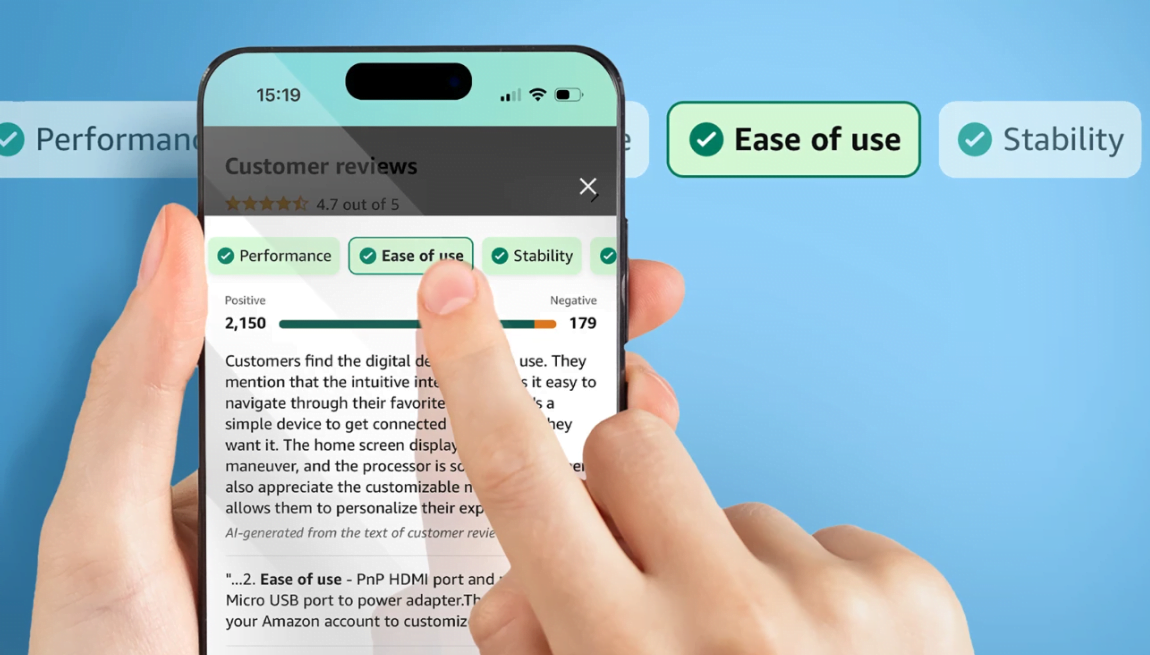

The AI will also be able to highlight specific aspects at the user's request. For example, if a user is looking for a product based on ease of use, he or she can filter the app by that topic and then receive a summary of just the review statements on that topic.

Phased rollout begins in the U.S.

AI review summaries are currently being rolled out to select customers in the U.S. on the Amazon mobile app. Amazon plans to gather feedback and then expand the AI Highlights feature to more customers and categories.

In addition, AI is expected to help identify fake reviews, a problem that is also gaining traction with generative AI, which allows for quick and plausible fakes. According to Amazon, the AI-generated highlight reviews will only access a "trusted review corpus from verified purchases."

Amazon does not address the potential risks of the new feature in the announcement. The biggest risk seems to be that a language model could inaccurately or incompletely reflect or misinterpret customer reviews.

In addition, the summary function could be easily manipulated by training the model, or, depending on the training method, could be inherently biased toward overemphasizing positive aspects and relativizing negative or critical opinions, even if they are valid.

On the other hand, the risk of generating misinformation should be lower in this particular context - if the language models refer to a fixed set of content, in this case product reviews, so-called hallucinations are less likely to occur.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.