A new information-theory framework reveals when multi-agent AI systems truly work as a team

Multi-agent AI systems can outperform solo agents, but it's often unclear whether they're truly working together or just running side by side. A new framework from Northeastern University sets out to measure real teamwork in agentic AI systems.

Developed by Christoph Riedl, the framework uses information theory to spot when groups of agents develop abilities that go beyond what each one can do alone. It gives developers a way to check if their AI teams are actually working together or just acting side by side - a crucial question for complex tasks like software development and problem-solving.

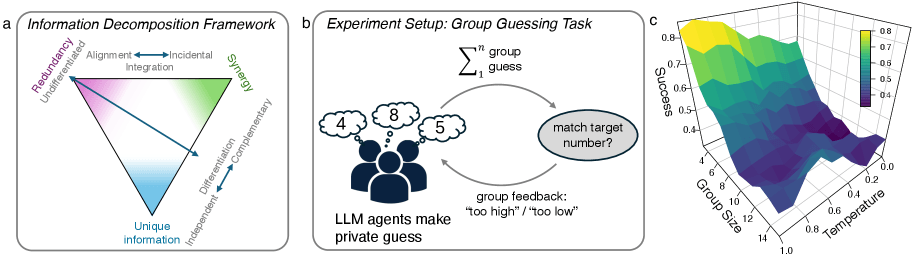

The framework breaks down cooperation into three types: agents acting identically, complementing each other, or even working at cross-purposes. The key is whether they generate information that only appears when they collaborate.

At the core of the method are Partial Information Decomposition (PID) and Time-Delayed Mutual Information (TDMI). PID splits information into redundant, unique, and synergistic parts. TDMI checks how well an agent's current state predicts the system's future. Combined, these tools let researchers measure synergy—information that only emerges when agents interact.

Personas and strategic thinking drive real teamwork

To put the framework to the test, Riedl set up a guessing game with groups of ten AI agents. The agents couldn't communicate directly. Their task: guess numbers that add up to a hidden target, with feedback limited to "too high" or "too low."

Riedl tried three setups: a basic version with no special instructions, a version where each agent had a unique personality, and a third where agents were prompted to consider what the others might do.

Only the last setup produced real teamwork. When agents were prompted to consider each other's strategies, they took on specialized roles and divided up the problem. Their strategies started to complement each other.

For example, one agent justified its decision: "Because it's possible others might go for 4 or 5 (the absolute lower bound or just above the last 'too low'), and someone else might go for 7 or 8, I stick with the most efficient: 6." Another agent in the same group deliberately chose 8, explaining, "If anyone else in the group is feeling feisty and picks 9 or 10, my 8 will help cover the lower part safely," Riedl writes.

Riedl found that the most successful teams combined diverse, complementary strategies with a clear focus on shared goals. It’s this balance between creativity and alignment that led to the highest overall performance.

Team skills vary across language models

Not all language models are equally good at teamwork. GPT-4.1 agents consistently developed effective team strategies, while smaller Llama-3.1-8B models struggled—only about one in ten Llama teams solved the task. The smaller models sometimes managed to coordinate, but rarely showed real division of labor. This points to the importance of strategic thinking about teammates for strong AI collaboration.

In Riedl's research, larger models consistently outperformed smaller ones at team-based tasks, which runs counter to recent advice from Nvidia researchers who advocate for using many small models to save resources.

The study also shows the value of prompt engineering: assigning agents distinct personalities and prompting them to consider each other's actions leads to better teamwork.

With tools like OpenAI's AgentKit making multi-agent collaboration more accessible, this framework could help teams build more effective AI systems. For now, though, applying it in practice remains challenging.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.