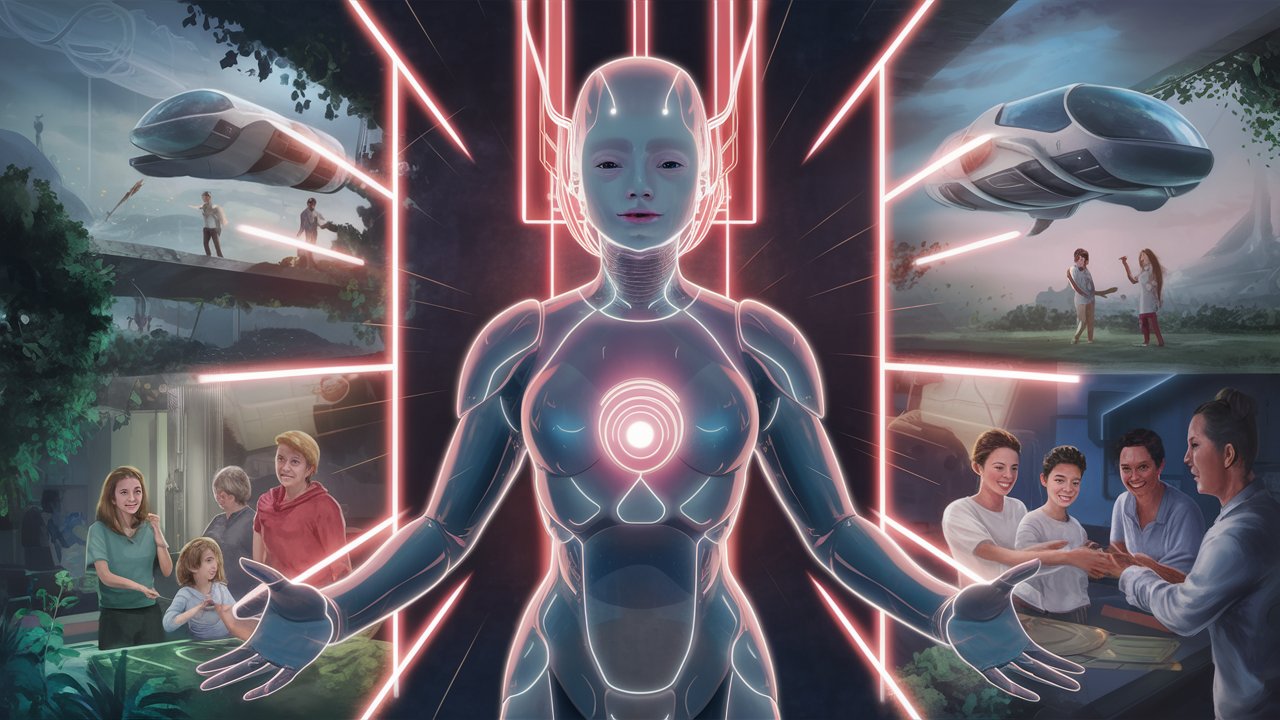

AGI could end humanity in more subtle ways than turning us into paperclips

Philosopher Nick Bostrom, known for his warnings about the existential risks of artificial intelligence, is publishing a new book on AI called "Deep Utopia: Life and Meaning in a Solved World."

This time, instead of focusing on dystopian scenarios, Bostrom explores a future in which superintelligent machines have solved all problems and conquered all diseases.

In the past, Bostrom became known in professional circles for his paperclip example, which describes a superintelligent AI system tasked with producing as many paper clips as possible.

The AI interprets its task literally, without ethical values, and converts more and more of the Earth's resources into paper clips until it has taken over the entire surface of the Earth and beyond.

This example shows how an AI given a seemingly simple task can still lead to disastrous results if it is not aligned with human interests.

AGI might be too good for humanity

In his new book, Bostrom takes a different approach. He sees strong drivers for the further development of AI, including enormous economic benefits, scientific advances, new medicines, clean energy sources, and military incentives.

The book explores what meaning life might have in such a techno-utopia, and asks whether it might be rather hollow.

"We could have this superintelligence and it could do everything: Then there are a lot of things that we no longer need to do and it undermines a lot of what we currently think is the sort of be all and end all of human existence," Bostrom says in an interview with Wired. Still, he remains optimistic.

When asked about the existential risks of AI, the topic he originally focused on, Bostrom now says that the discussion is "all over the place" right now.

He acknowledges that there are immediate issues that deserve attention, such as discrimination, privacy, and intellectual property. But despite decades of research in the field, he still feels "very in the dark" and doesn't have a clear position.

"To me it seems clear that it’s just very complex and hard to figure out what actually makes things better or worse in particular dimensions," Bostrom says.

The Future of Humanity Institute at Oxford University, which Bostrom founded, was recently closed due to disputes with the university bureaucracy.

For now, Bostrom plans to continue working on his topics as a "free man" without a set agenda. It looks like he's going to have a good time thanks to AGI, with or without AGI.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.