AI coding tools hurt learning unless you ask why, Anthropic study finds

Key Points

- According to an Anthropic study, software developers learning new programming skills with AI assistance perform worse on knowledge tests than a control group without AI, without saving any time in the process.

- The study reveals that learning outcomes depend heavily on how developers use AI: those who completely delegate tasks to AI learn almost nothing, while those who ask specific comprehension questions retain their learning success.

- Researchers caution that critical skills like code review could deteriorate over time if developers rely too heavily on AI during their skill development phase.

Developers who learn new programming skills with AI assistance score significantly worse on knowledge tests. A new Anthropic study raises concerns about pushing AI integration too hard in the workplace.

Software developers who rely on AI assistance when learning a new programming library develop less understanding of the underlying concepts, according to a new study by AI company Anthropic.

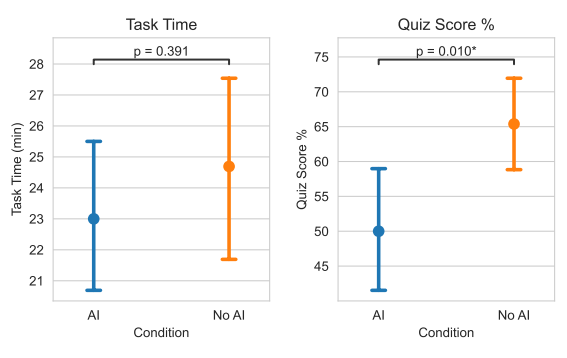

The researchers recruited 52 mainly junior software developers who had worked regularly with Python for at least a year and had experience with AI assistants but were unfamiliar with the Trio library. Participants were randomly split into two groups: one had access to a GPT-4o-based AI assistant, while the control group only worked with documentation and web search. Both groups solved two programming tasks with the Trio library as quickly as possible.

All participants then completed a knowledge test on the concepts used. Participants with AI access scored 17 percent worse than the control group. AI use also didn't save significant time on average.

"Our results suggest that incorporating AI aggressively into the workplace, particularly with respect to software engineering, comes with trade-offs," write researchers Judy Hanwen Shen and Alex Tamkin.

How you use AI determines how much you learn

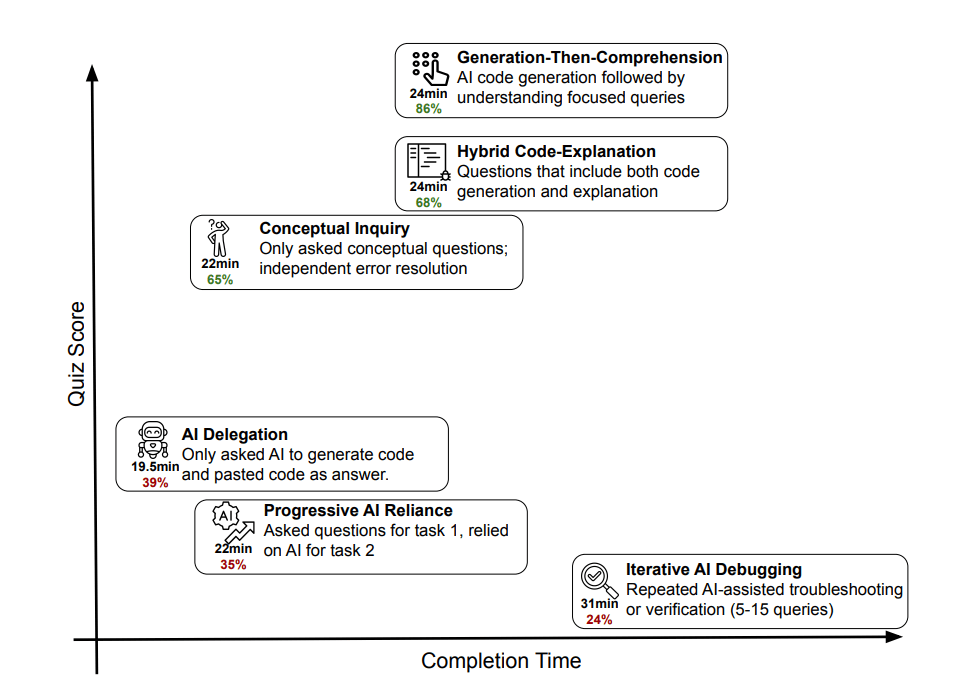

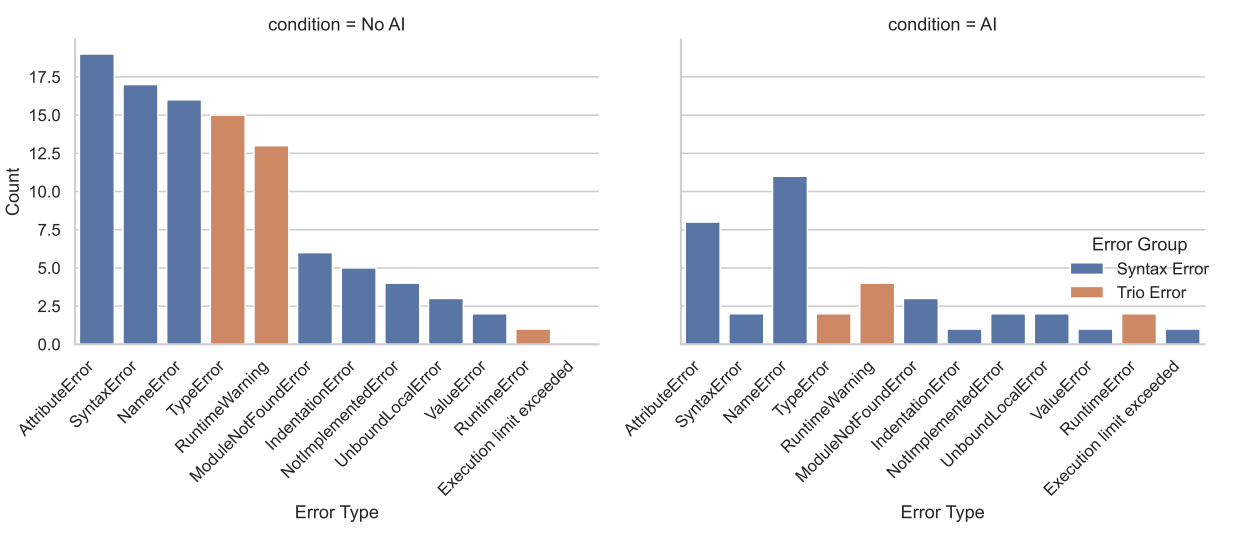

How developers interact with AI makes a big difference in learning outcomes. A qualitative analysis of screen recordings from 51 participants revealed six distinct interaction patterns. Three led to poor test results, with quiz scores between 24 and 39 percent.

Those who completely delegated programming tasks to the AI finished fastest but only scored 39 percent on the quiz. Participants who initially worked on their own but increasingly relied on AI-generated solutions performed similarly poorly. The worst learners repeatedly used AI for debugging without understanding the errors themselves.

Three other patterns maintained learning success, with quiz scores between 65 and 86 percent. The most successful strategy was letting the AI generate code, then asking specific follow-up questions. Requesting explanations alongside code generation also worked well, as did using AI exclusively for conceptual questions.

Too much chatting kills productivity

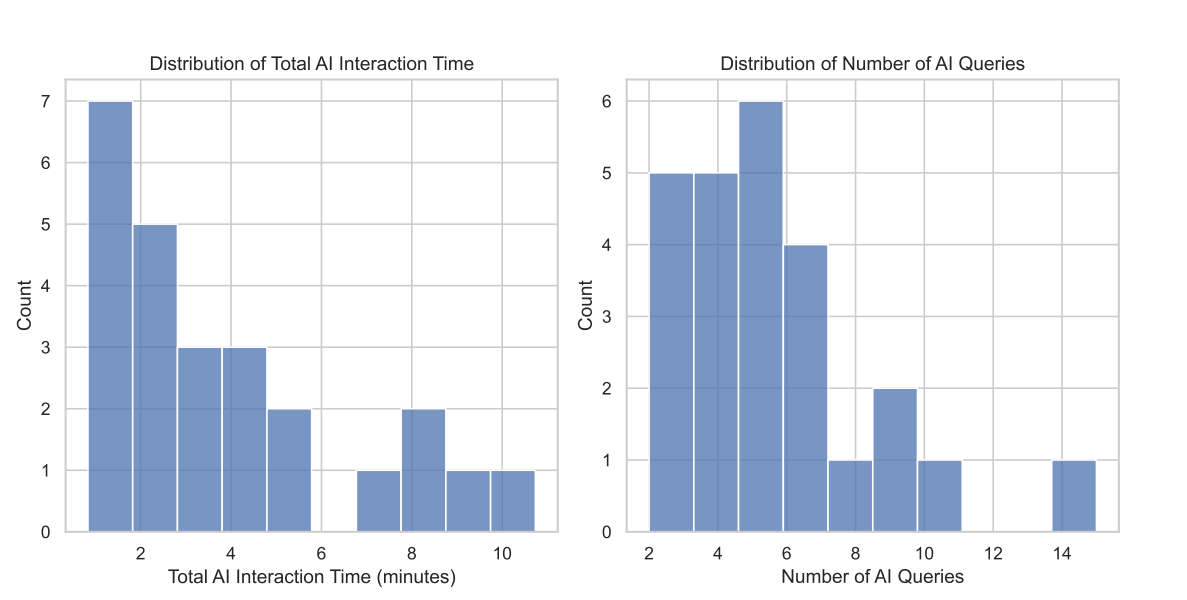

Unlike previous studies on AI-assisted programming, the researchers found no productivity gains. AI users weren't statistically faster. The qualitative analysis explains why: some participants spent up to eleven minutes just interacting with the AI assistant, for example when crafting prompts.

Only about 20 percent of participants in the AI group used the assistant exclusively for code generation. This group was actually faster than the control group but achieved the worst learning results. The remaining participants asked additional questions, requested explanations, or spent time understanding the generated solutions. The researchers suspect AI is more likely to bring significant productivity gains for repetitive or familiar tasks.

The results seem to contradict previous studies that found significant productivity gains from AI assistance. But Anthropic's researchers explain that earlier studies measured productivity on tasks for which participants already had the necessary skills. The current study looked at what happens when people learn something new.

Making mistakes helps people learn—AI prevents that

The control group without AI access made more mistakes, and as a result, had to deal with them three times more often than the AI group. These errors forced participants to think critically about the code. This "getting painfully stuck" on a problem may also be important for building competence, the researchers say.

The biggest differences in the knowledge test appeared in debugging questions. The control group experienced more Trio-specific errors during the task, such as runtime warnings for unexpected coroutines. These experiences apparently helped them understand the core concepts.

Skilled humans remain essential

The researchers warn about consequences for safety-critical applications in particular. If humans are supposed to check and debug AI-generated code, they need the right skills. But those skills could atrophy if AI use gets in the way of skill development.

"Our findings suggest that AI-enhanced productivity is not a shortcut to competence and AI assistance should be carefully adopted into workflows to preserve skill formation," the researchers summarize. The key to maintaining learning ability lies in cognitive effort: developers who use AI only for conceptual questions or request explanations can keep learning effectively.

The study only looked at a one-hour task with a chat-based interface. Agent-based AI systems like Claude Code, which require even less human involvement, could make the negative effect on skill development worse. Whether similar effects occur in other areas of knowledge work like writing or design hasn't been studied, but Anthropic is already working on automating these non-coding jobs through tools like Claude Cowork.

Still, it's worth noting that Anthropic published a study whose results could potentially hurt its own business model. The company makes money from AI assistants like Claude, designed to help people at work. That the company's research department can openly warn about downsides of these products isn't a given in the tech industry, especially these days.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now