Alibaba launches Qwen3-Max, its largest and most capable AI model to date

Alibaba has released Qwen3-Max, the biggest and most capable AI model in its lineup. The new model is built for real-world software development and automation, with major performance upgrades across the board.

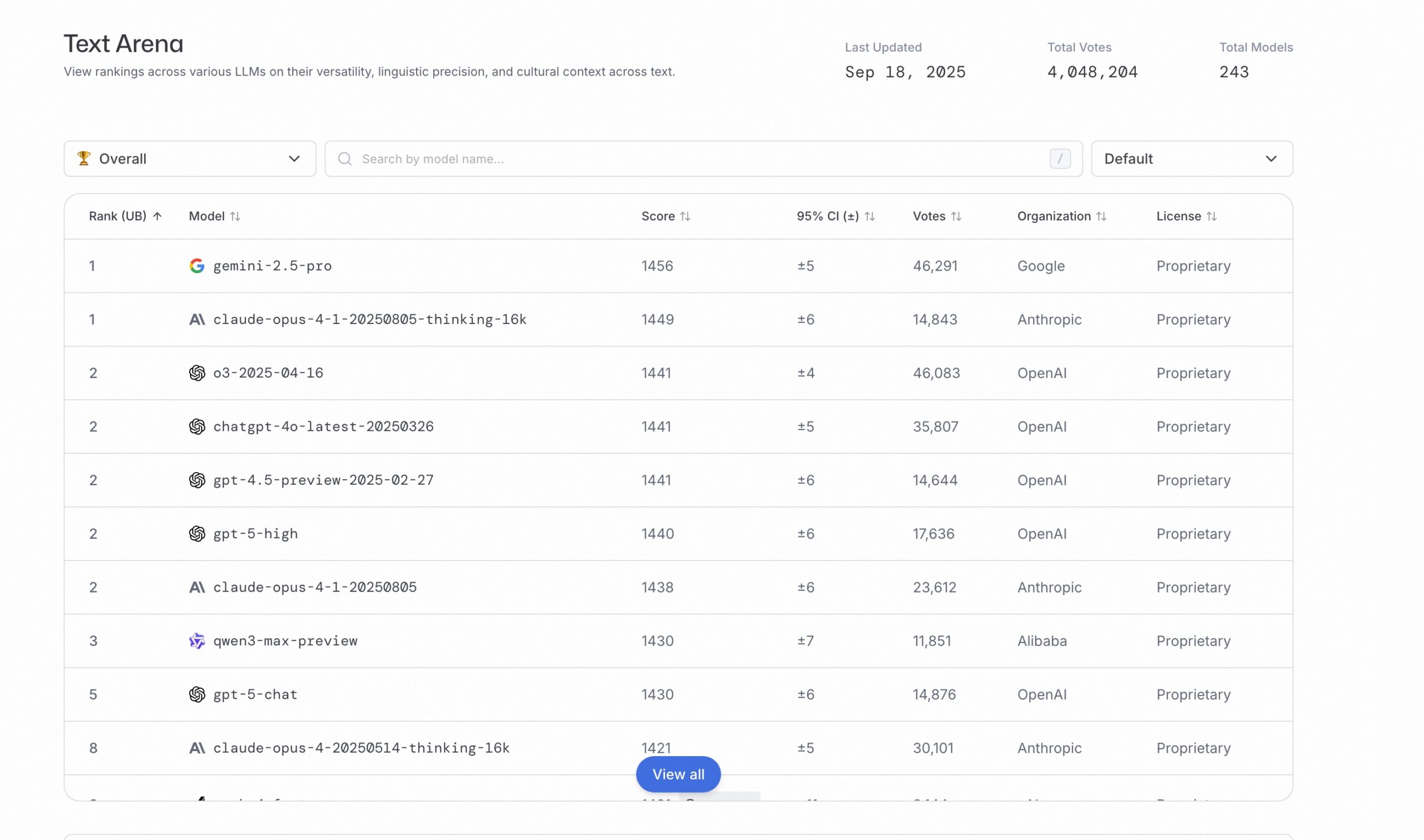

Qwen3-Max comes with more than one trillion parameters and was trained on 36 trillion tokens. A preview version called Qwen3-Max-Instruct, launched earlier this month, already reached third place on the Text Arena Leaderboard, ahead of GPT-5-Chat—OpenAI's GPT-5 variant running with reasoning set to low.

Qwen3-Max uses the same architecture as the Qwen3 series, first announced in April, but scales it up to over a trillion parameters. The model relies on a Mixture of Experts (MoE) approach, which means only a subset of parameters are active during inference.

30 percent jump in training efficiency

Alibaba says the training process for Qwen3-Max was unusually stable, with a smooth loss curve and no sudden spikes, rollbacks, or major adjustments. Optimized parallelization made training Qwen3-Max-Base 30 percent more efficient compared to Qwen2.5-Max-Base.

For long-context training, the team used new techniques that tripled throughput and made it possible to handle input sequences up to one million tokens. New tools for automatic monitoring and recovery also cut downtime from hardware failures to just a fifth of what was seen with the previous generation, according to Alibaba.

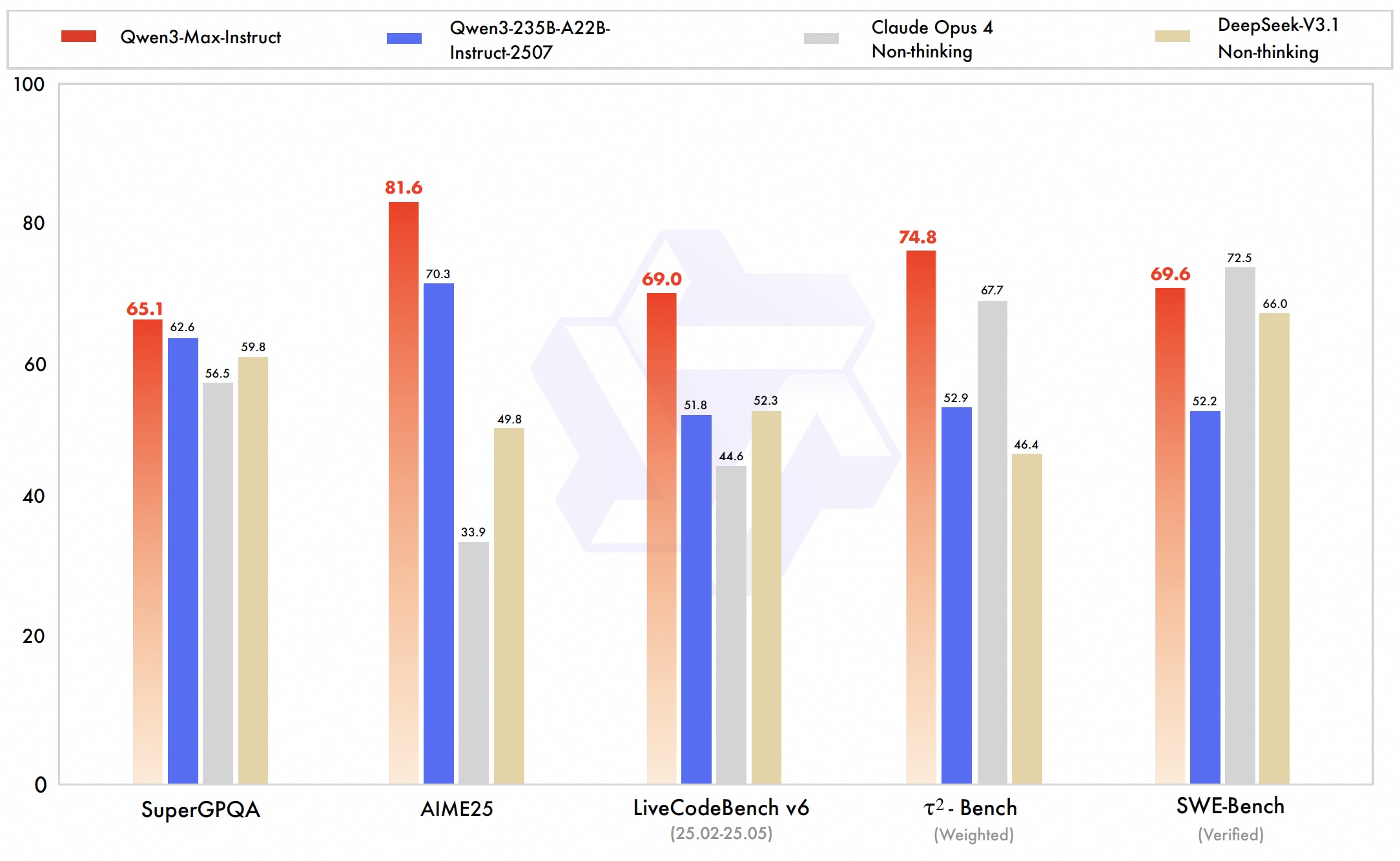

The Qwen team reports that Qwen3-Max-Instruct posted top scores across a wide range of benchmarks, including knowledge, reasoning, programming, instruction following, human preference alignment, agent tasks, and multilingual understanding.

The biggest improvements are in programming and agent abilities, which keeps the model focused on real software development and automation. On SWE-Bench Verified, a benchmark for fixing real-world software bugs, Qwen3-Max-Instruct scored 69.6. Alibaba says this puts it among the top-performing models available.

On Tau2-Bench, which tests how well models can call external tools and handle complex workflows, Qwen3-Max-Instruct scored 74.8, coming in ahead of Claude 4 Opus and Deepseek V3.1.

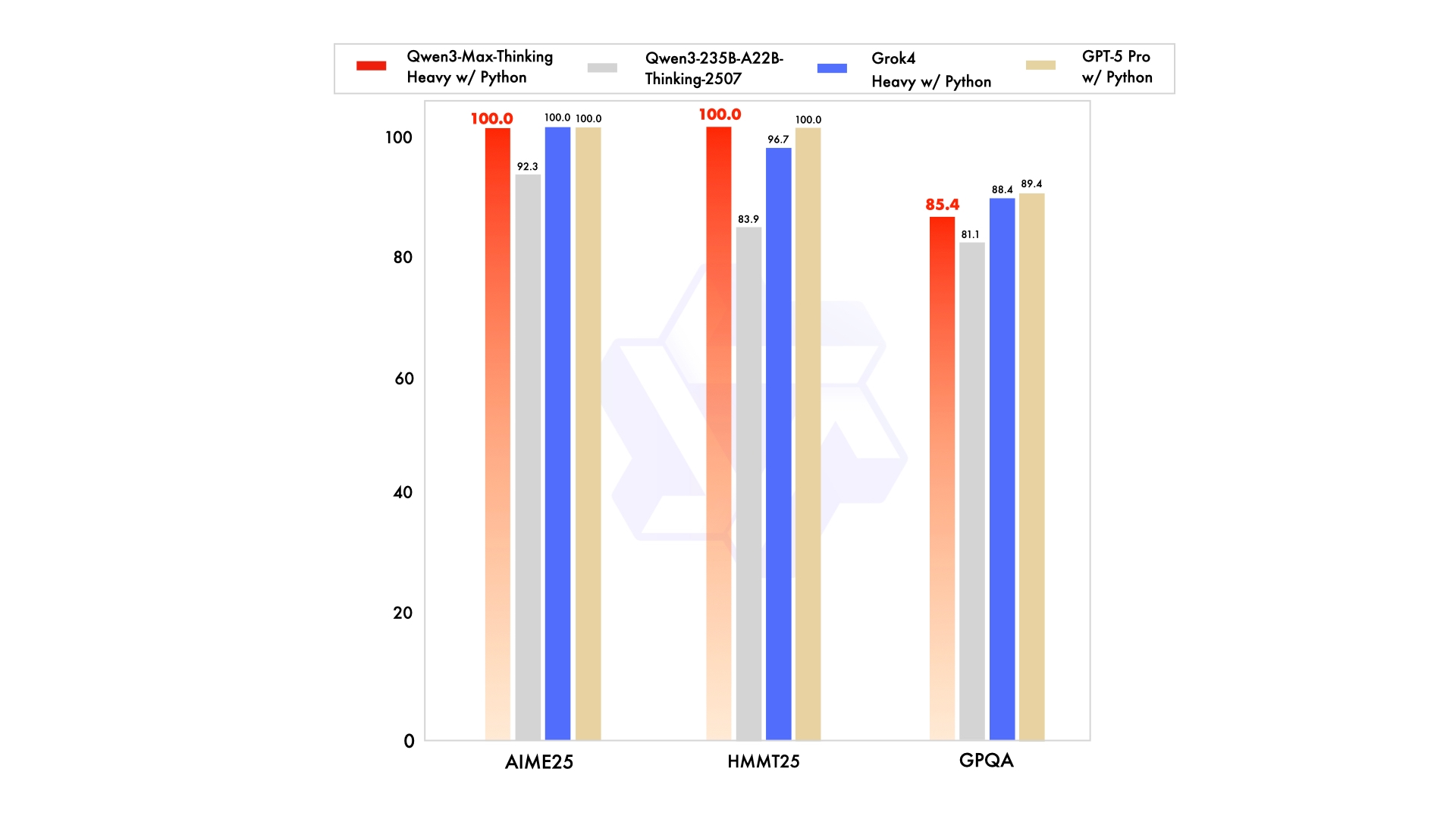

A reasoning-focused variant, Qwen3-Max-Thinking, is still in training but has already maxed out the AIME 25 and HMMT math benchmarks, matching results from GPT-5 Pro and Grok 4. The model uses a code interpreter and extra compute during testing.

Test-time compute lets the model run several solutions at once and pick the best one. AIME is a tough student math competition and is often used as a benchmark for logical reasoning. Alibaba plans to release Qwen3-Max-Thinking soon.

Availability and API access

Qwen3-Max-Instruct is available on Qwen Chat, but like many other Qwen models, it is not open-source. Developers can use the API through Alibaba Cloud Model Studio, and the interface is compatible with OpenAI APIs.

Qwen3-Max is part of a wider expansion of Alibaba's AI lineup. The company recently introduced Qwen-3-TTS-Flash for voice generation, Qwen-Image-Edit for image editing, Qwen3-Next for faster text processing, and Qwen3-Omni, a multimodal model for text, image, and audio tasks.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.