Alibaba's Qwen3-Coder-Next delivers solid coding performance in a compact package

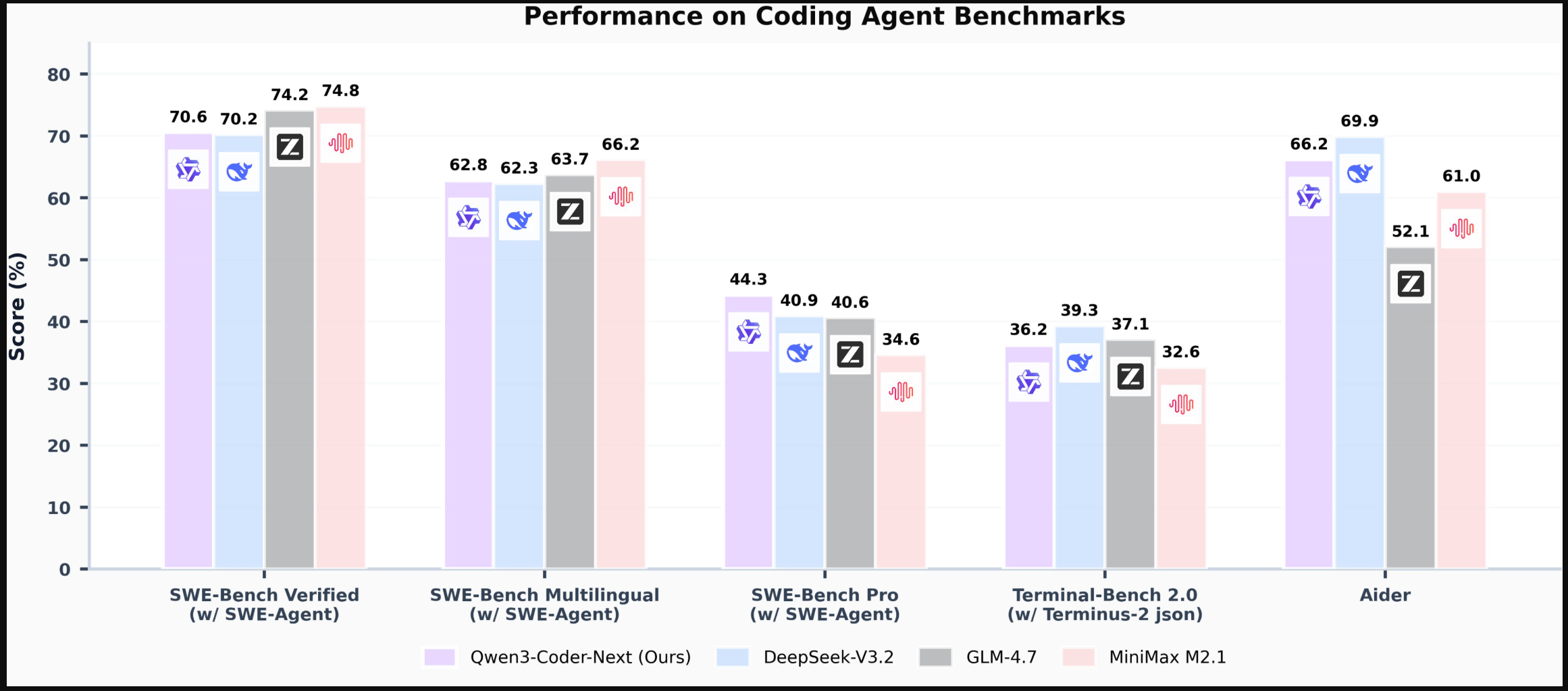

Alibaba has released Qwen3-Coder-Next, a new open-weight AI model for programming agents and local development. Trained on 800,000 verifiable tasks, the model has 80 billion parameters total but only 3 billion active at any time. Despite this small footprint, Alibaba says it outperforms or matches much larger open-source models on coding benchmarks, scoring above 70 percent on SWE-Bench Verified with the SWE-Agent framework. As always, benchmarks only indicate real-world performance.

The model supports 256,000 tokens of context and works with development environments like Claude Code, Qwen Code, Qoder, Kilo, Trae, and Cline. Local tools like Ollama, LMStudio, MLX-LM, llama.cpp, and KTransformers also support it. Qwen3-Coder-Next is available on Hugging Face and ModelScope under the Apache 2.0 license. More details in the blog post and technical report.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now