Anthropic's new 'think tool' lets Claude take notes to solve complex problems

Anthropic found a simple way to improve its AI assistant's ability to perform complex, multi-step tasks: let it take notes as it works.

The company says adding a "scratchpad" where Claude can write down its thoughts, combined with some example prompts, significantly improves its problem-solving abilities.

The system works through a "think" command that gives Claude space to record its reasoning before moving forward. Under the hood, it's just a JSON command that keeps track of these thoughts:

{

"name": "think",

"description": "Use the tool to think about something. It will not obtain new information or change the database, but just append the thought to the log. Use it when complex reasoning or some cache memory is needed.",

"input_schema": {

"type": "object",

"properties": {

"thought": {

"type": "string",

"description": "A thought to think about."

}

},

}, "required": ["thought"]

}

}This differs from Claude's recently added "Extended Thinking" feature. While Extended Thinking helps Claude reason before generating an answer, the new "Think tool" works during the answer process itself, especially when Claude needs to process new information from other tools.

Yet another thought in a chain of thoughts

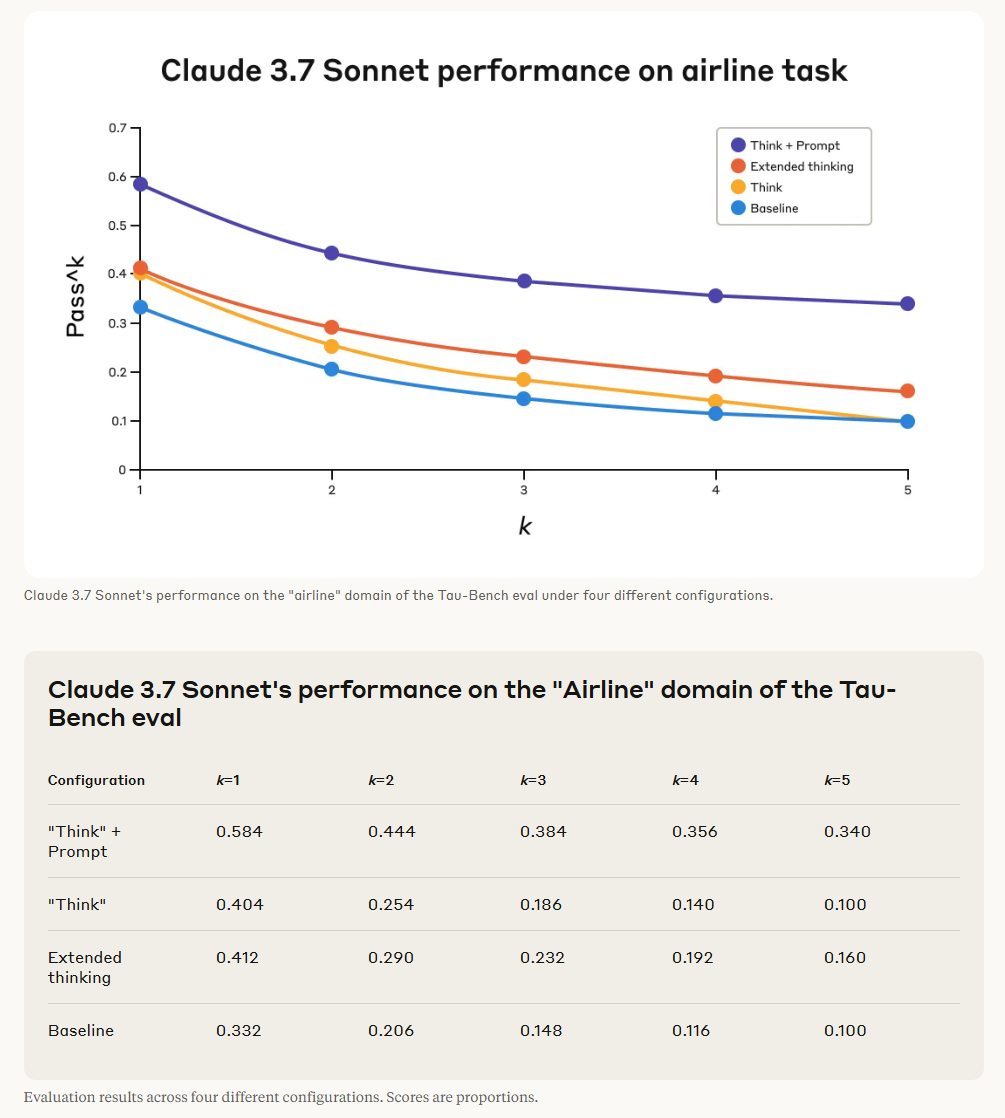

When tested on airline customer service scenarios in the Tau Bench framework, Claude performed 54 percent better than baseline with the optimized prompt. According to Anthropic, these improvements in multi-step tasks and better adherence to instructions could significantly benefit agent-based AI systems, which still struggle with reliability. Software engineering tests showed more modest gains, with a 1.6 percent improvement in SWE-Bench scores.

The key isn't just the scratchpad itself - it's showing Claude how to use it effectively. Anthropic provides example prompts that demonstrate how to list rules, check facts, and plan next steps:

## Using the think tool

Before taking any action or responding to the user after receiving tool results, use the think tool as a scratchpad to:

- List the specific rules that apply to the current request

- Check if all required information is collected

- Verify that the planned action complies with all policies

- Iterate over tool results for correctness

Here are some examples of what to iterate over inside the think tool:

<think_tool_example_1>

User wants to cancel flight ABC123

- Need to verify: user ID, reservation ID, reason

- Check cancellation rules:

* Is it within 24h of booking?

* If not, check ticket class and insurance

- Verify no segments flown or are in the past

- Plan: collect missing info, verify rules, get confirmation

</think_tool_example_1>

<think_tool_example_2>

User wants to book 3 tickets to NYC with 2 checked bags each

- Need user ID to check:

* Membership tier for baggage allowance

* Which payments methods exist in profile

- Baggage calculation:

* Economy class × 3 passengers

* If regular member: 1 free bag each → 3 extra bags = $150

* If silver member: 2 free bags each → 0 extra bags = $0

* If gold member: 3 free bags each → 0 extra bags = $0

- Payment rules to verify:

* Max 1 travel certificate, 1 credit card, 3 gift cards

* All payment methods must be in profile

* Travel certificate remainder goes to waste

- Plan:

1. get user ID

2. verify membership level for bag fees

3. check which payment methods in profile and if their combination is allowed

4. calculate total: ticket price + any bag fees

5. get explicit confirmation for booking

</think_tool_example_2>According to Anthropic, the "think" command is most useful for analyzing tool output, following complex rules, and making step-by-step decisions where mistakes could be costly. Domain-specific examples help achieve the best results. The "Think" tool should only be added when simpler tasks - like single tool calls or prompts with few constraints - aren't reliable enough on their own.

The tool integrates easily with existing Claude systems and only affects performance when it's actually being used. Though most testing used Claude 3.7 Sonnet, Anthropic reports the improvements work just as well with Claude 3.5 Sonnet (New).

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.