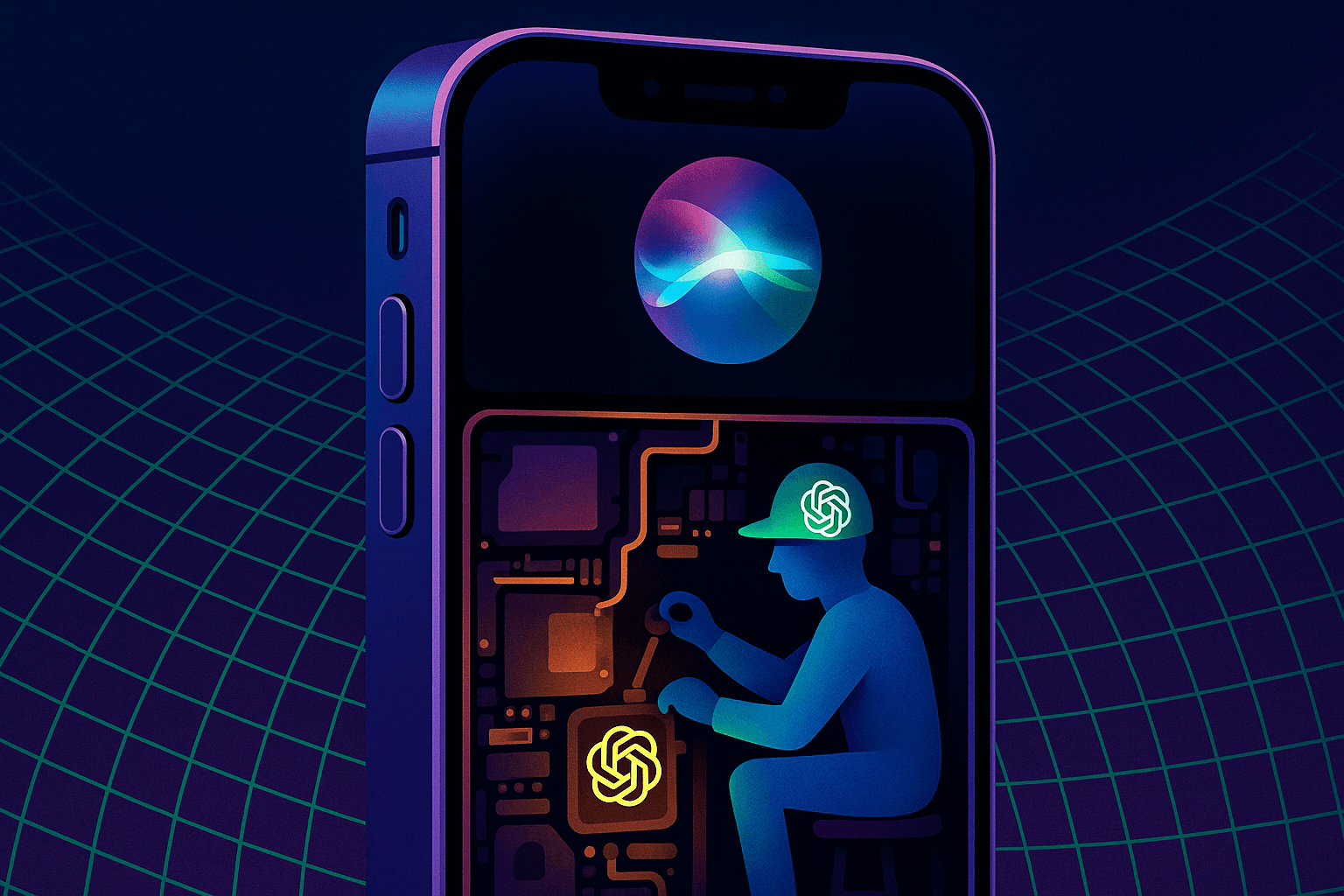

Apple plans to introduce its own Large Language Model (LLM) for its devices this year, according to Bloomberg's Apple reporter Mark Gurman.

The company is reportedly developing its generative AI capabilities entirely for local operation on the device itself, Gurman says.

This approach should provide significantly faster response times and easier data protection than cloud-based solutions. Apple is expected to provide more details at its Worldwide Developers Conference in June.

The introduction of generative AI in iOS 18 could help Apple keep pace with other AI assistants such as Google's Bard, OpenAI's ChatGPT, and Microsoft's Copilot. Gurman suggests that Apple will likely deliver a marketing message showcasing how the technology can assist people in their daily lives.

Apple needs to catch up

However, Gurman notes that Apple is playing catch-up to companies like OpenAI and Google in the field of advanced AI, and the company's initial AI capabilities may not be as good as the competition.

Since the LLM will run directly on the iPhone's processor, the AI tools could be slightly less powerful and knowledgeable in some cases. On the other hand, latency should be very low, and Apple will not have to worry about privacy issues.

Apple recently acquired Canadian AI startup DarwinAI, which develops technologies for visual inspection of components during manufacturing and specializes in making AI systems smaller and faster.

It's unclear from Gurman's report if and how this decision will affect Apple's negotiations with Google and OpenAI to bring their cloud-based AI services to Apple devices. The tech giants are reportedly already in talks.

Apple could use Gemini or GPTs to map AI functions for which its own local AI is not good enough. On the flip side, there is a risk of reputational damage if Google and others slip up on privacy.