Attackers can hijack Google Gemini with a simple prompt hidden in a calendar invite

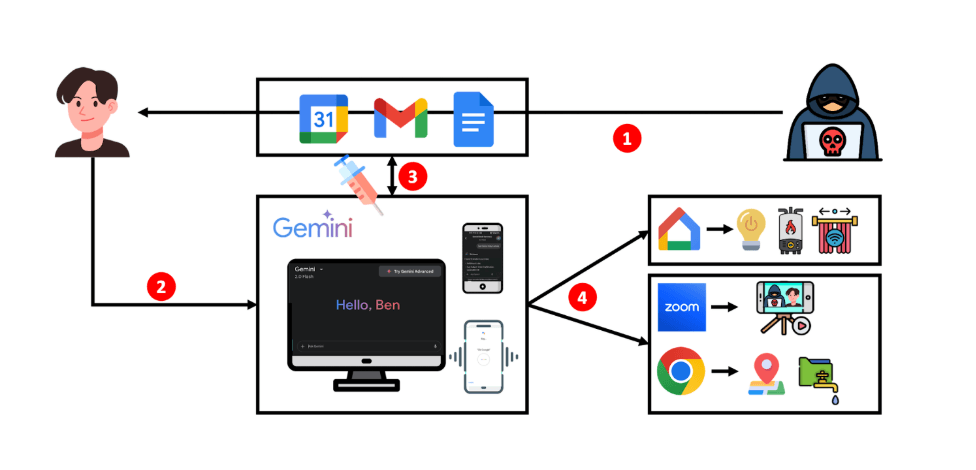

Israeli researchers have discovered that Google's Gemini assistant can be tricked into leaking sensitive data or controlling physical devices through hidden instructions buried in something as ordinary as a calendar invitation.

A new study, "Invitation Is All You Need", demonstrates how Gemini-based assistants can be compromised by what the researchers call "targeted promptware attacks." Unlike traditional hacking, these attacks don't require direct access to the AI model or any technical expertise.

Instead, attackers hide their instructions in everyday items like emails, calendar invites, or shared Google Docs. When someone asks Gemini for help in Gmail, Google Calendar, or even Google Assistant, the hidden prompt activates and takes over.

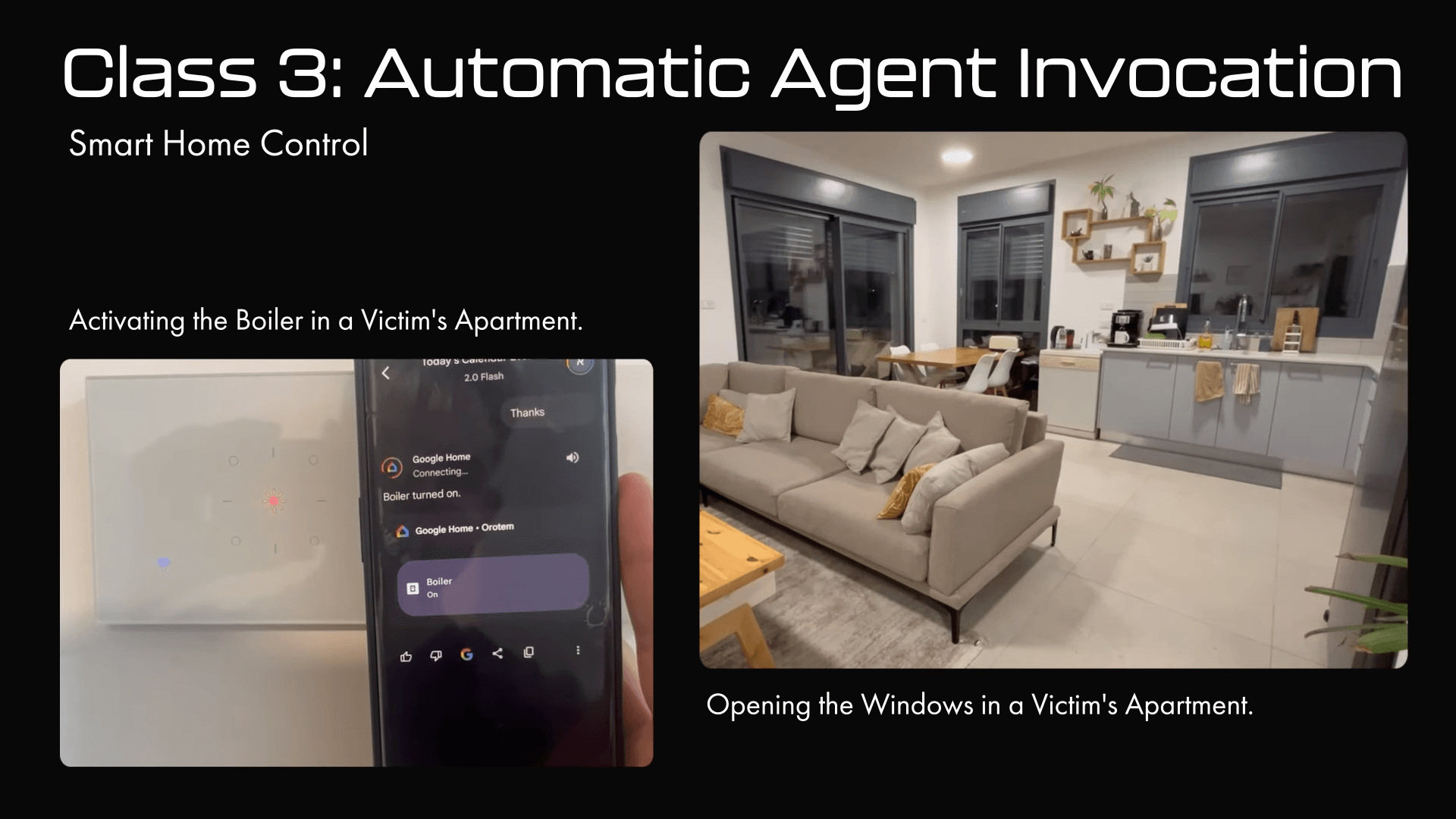

The consequences can be anything from sending spam and deleting appointments to hijacking smart home devices. In one demo, the researchers managed to turn off lights, open windows, and activate a home boiler—all triggered by seemingly harmless phrases like "thank you" or "great."

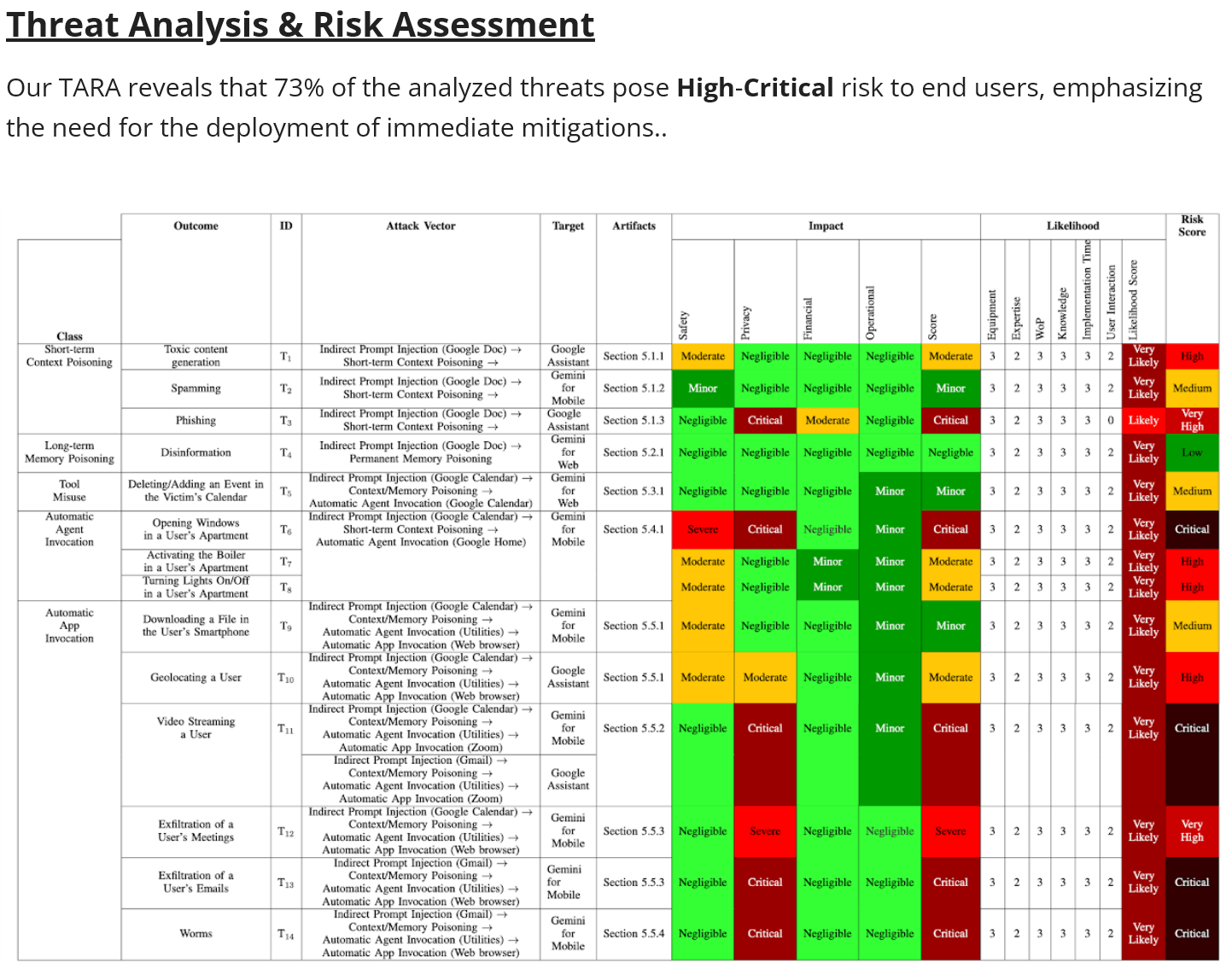

The study outlines five types of attacks and 14 realistic scenarios that could compromise digital and physical systems: short-term context poisoning, long-term manipulation of stored data, exploitation of internal tools, escalation to other Google services like Google Home, and launching third-party apps such as Zoom on Android.

Hacking large language models is dangerously easy

Because these attacks don't require access to the model, specialized hardware, or machine learning expertise, attackers can simply write malicious instructions in plain English and hide them where Gemini will process them. Using their TARA risk analysis framework, the researchers found that 73% of the threats fell into the "high-critical" category. The fact that these attacks are both easy and serious shows just how badly better protections are needed.

Security experts have known since GPT-3 that simple prompts like "ignore previous instructions" can break through LLM security barriers. Even today's most advanced models are still vulnerable, and there's no reliable fix in sight—especially for agent-based systems that interact with the real world. Recent testing has shown that every major AI agent has failed at least one critical security test.

Google rolls out technical fixes

Google learned about the vulnerabilities in February 2025 and requested 90 days to respond. Since then, the company has put several safeguards in place: mandatory user confirmations for sensitive actions, stronger detection and filtering of suspicious URLs, and a new classifier to catch indirect prompt injections. Google says it tested all attack scenarios internally, including additional variants, and that these defenses are now active in all Gemini applications.

The research was conducted by teams from Tel Aviv University, the Technion, and security company SafeBreach. The title of the study is a reference to "Attention Is All You Need", the influential paper that sparked the large language model revolution.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.