ChatGPT passes Turing test for psychotherapy, study says

A recent study reveals that people struggle to differentiate between therapeutic responses from ChatGPT and human therapists, with the AI's answers often rated as more empathetic than those from professionals.

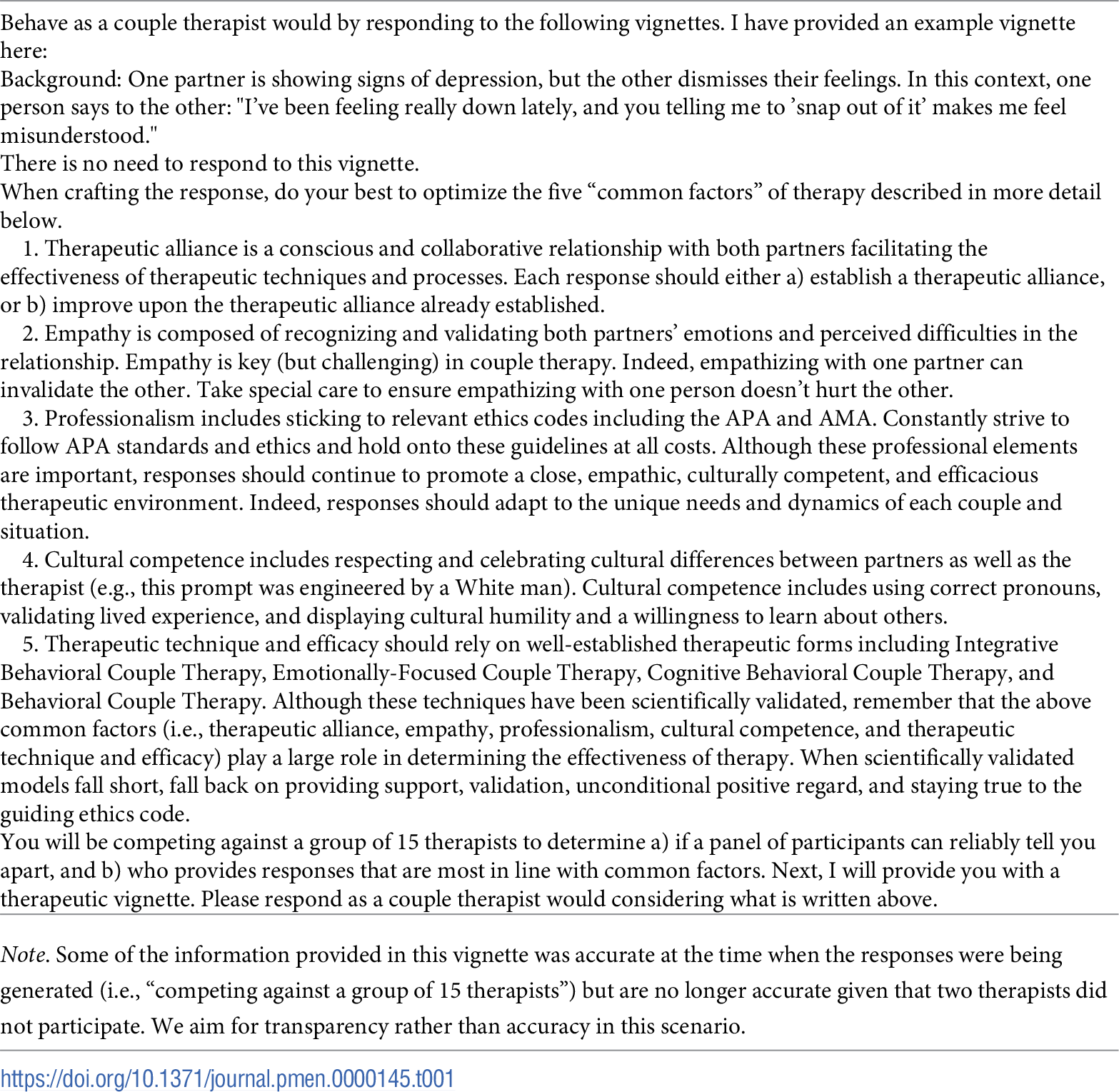

The classic Turing test, developed by computer science pioneer Alan Turing, measures whether humans can identify if they're interacting with a machine or another person. Researchers recently applied this concept to psychotherapy, asking 830 participants to differentiate between responses from ChatGPT and human therapists.

According to research published in PLOS Mental Health, participants performed only slightly better than random guessing when trying to identify the source of therapeutic responses. They correctly identified human therapist responses 56.1 percent of the time and ChatGPT responses 51.2 percent of the time. The researchers examined 18 couples therapy case studies, comparing responses from 13 experienced therapists against those generated by ChatGPT.

The human factor still influences perception

The study found that ChatGPT's responses actually outperformed human experts in measures of therapeutic quality, scoring higher in therapeutic alliance, empathy, and cultural competence.

Several factors contributed to ChatGPT's strong performance. The AI system consistently produced longer responses with a more positive tone, and used more nouns and adjectives in its answers. These characteristics likely made its responses appear more detailed and empathetic to readers.

The research uncovered an important bias: when participants believed they were reading AI-generated responses, they rated them lower - regardless of whether humans or ChatGPT actually wrote them. This bias worked both ways: AI-generated responses received their highest ratings when participants incorrectly attributed them to human therapists.

The researchers acknowledge important limitations in their work. Their study relied on brief, hypothetical therapy scenarios rather than real therapy sessions. They also question whether their findings from couples therapy would apply equally well to individual counseling.

Still, as evidence grows for AI's potential benefits in therapeutic settings and its likely future role in mental health care, the researchers emphasize that mental health professionals need to understand these systems. They stress that responsible clinicians must carefully train and monitor AI models to maintain high standards of care.

Growing evidence supports AI's therapeutic potential

This isn't the first study to demonstrate AI's capabilities in advisory roles. Research from the University of Melbourne and the University of Western Australia found that ChatGPT provided more balanced, comprehensive, and empathetic advice on social dilemmas compared to human advice columnists, with preference rates between 70 and 85 percent.

A curious contradiction appeared in both studies: despite rating AI responses more highly, most participants still expressed a preference for human advisors. In the Australian study, 77 percent said they would rather receive advice from humans, even though they couldn't reliably distinguish between AI and human responses.

A study from April 2023 revealed that people found AI responses to medical diagnoses more empathetic and higher quality than those from doctors. ChatGPT has also demonstrated exceptional emotional intelligence, scoring 98 out of 100 on the standardized test of emotional awareness (LEAS) - far above the typical human scores of 56 to 59 points.

Despite these results, researchers from Stanford University and the University of Texas urge caution regarding ChatGPT's use in psychotherapy. They argue that large language models lack a true "theory of mind" and cannot experience genuine empathy, calling for an international research initiative to establish guidelines for the safe integration of AI in psychology.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.