Deepmind AlphaTensor record for matrix multiplication held for a good week

Update, Oct. 18, 2022:

Researchers Manuel Kauers and Jakob Moosbauer of Johannes Kepler University Linz have already broken Deepmind's record for matrix multiplication by one step, they write in their paper. They developed a way to perform a 5×5 matrix multiplication in 95 steps - one step less than Deepmind's record of 96 steps from early October. The original value was 98 steps. The Austrian researchers built on Deepmind's work.

Original article, Oct. 6, 2022:

Deepmind's new AI AlphaTensor lets computers multiply more efficiently

Deepmind's latest AI system, AlphaTensor, automatically discovers new algorithms that could speed up the matrix multiplications ubiquitous in computer science.

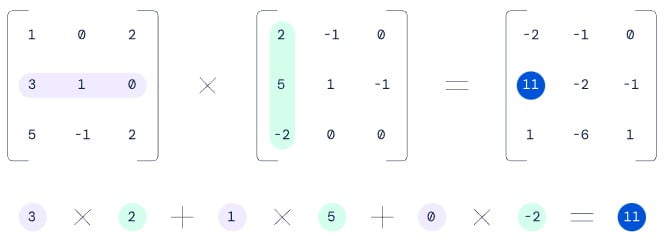

Computers can add numbers faster than they can multiply them. This is a problem insofar as many mathematical tasks in computer science are handled via so-called matrix multiplications, for example in machine learning, in the creation of computer graphics, in simulations of all kinds or in data compression.

Even small efficiency gains in matrix multiplication could therefore have a big impact. Mathematicians have accordingly been searching for more efficient algorithms for matrix multiplication for decades.

In 1969, German mathematician Volker Strassen developed a particularly efficient algorithm that could solve 4×4 matrix multiplications with 49 instead of 64 multiplications. That was some 50 years ago, but Strassen's algorithm is still in use today, having yet to be surpassed.

From AlphaZero to AlphaTensor: The algorithm game

Deepmind's latest AI system AlphaTensor, which is based on the general-purpose board game AI AlphaZero introduced in 2018, has now discovered a new algorithm that is said to be faster than Strassen's algorithm and has the "potential to improve efficiency by 10 to 20 percent across trillions of calculations per day," according to Deepmind CEO Demis Hassabis.

To train AlphaTensor, the Deepmind research team turned the matrix multiplication problem into a kind of 3D board game, where each move results in a building block of a new algorithm. AlphaTensor was rewarded for generating a new algorithm in as few steps as possible, choosing between trillions of moves each time. Deepmind calls this the "Tensor Game."

Fewer calculation steps to the result

Deepmind had AlphaTensor process a maximum of 5×5 input matrices. In the process, AlphaTensor independently discovered Strassen's algorithm and other already-known methods. The AI system also developed new algorithms that, according to Deepmind, are more efficient than previously existing ones.

For example, the record for a 5×5 matrix was previously 80 multiplications; a new AlphaTensor algorithm requires only 76. The 4×4 multiplication mentioned at the beginning, which Strassen reduced to 49 steps, AlphaTensor optimizes to 47 steps. Such efficiency gains were achieved by algorithms generated by AlphaTensor for more than 70 matrix multiplications.

The Deepmind researchers are amazed by the sheer number of possible ways to multiply matrices. In addition, AlphaTensor can develop hardware-specific algorithms that are said to run up to 20 percent faster than currently used algorithms on Google's TPUs and Nvidia's V100, for example, which are often used for machine learning.

AI for science

For Deepmind, AlphaTensor in particular is another demonstration of how progress in artificial intelligence can provide progress in other scientific disciplines. The work also shows that the AlphaZero system, which was actually developed for traditional games, can solve mathematical problems far beyond this field.

"This paper is a stepping stone in DeepMind’s mission to advance science and unlock the most fundamental problems using AI," Deepmind writes.

Prof. Dr. Markus Bläser, professor of computational complexity at Saarland University, positively highlights the method of automatically adapting multiplication algorithms to selected hardware, as this "would be very difficult for a human."

Otherwise, Deepmind's work contributes to the theoretical understanding of matrix multiplication on small matrices, he said. The only improvement over Strassen's algorithm is the new upper bound for the multiplication of matrices of size four.

"To better understand the theoretical complexity of matrix multiplication, on the other hand, the subproblems considered in current research on this are so large and complex that I don't see any applications for automatic search here at the moment," Bläser says.

Prof. Dr. Holger Hoos, professor of AI and machine learning at RWTH and Leiden University, calls Deepmind's work "interesting, but not groundbreaking." The speedup compared to known methods is relatively small, he said. He does not expect the automatically constructed algorithms to find immediate use in practical applications.

"The work is undoubtedly of technical interest to experts in automatic algorithm design like myself and my colleagues, but I don't expect any real breakthroughs in research or practice to be made immediately based on it," says Hoos, who finds the methodological approach "undoubtedly interesting."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.