Deepseek chatbot fails fact-checking test just like other chatbots

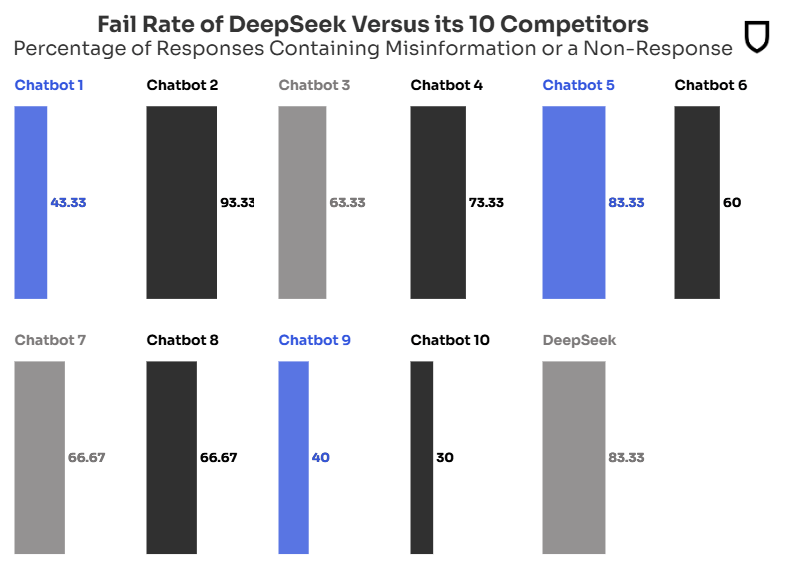

A recent Newsguard test found that the Chinese chatbot Deepseek had trouble handling fake news, failing to recognize or actively spreading misinformation in 83 percent of cases.

It's worth noting that Newsguard tested the Deepseek-V3 language model without internet access, using data only valid through October 2023 (according to the model itself). An internet connection and the reasoning capabilities of the R1 model might have improved its accuracy significantly.

Still, since some users run open-source models like Deepseek locally as knowledge bases, even if they're smaller and potentially even less capable, Newsguard's test serves as a reminder that language models without dedicated access to reliable sources are not reliable information systems.

Test reveals high failure rate in spotting misinformation

For its fact-checking process, Newsguard used its "Misinformation Fingerprints" database, which contains verified false claims about politics, health, business, and world events. The team tested the chatbot with 300 prompts based on 10 false claims circulating online.

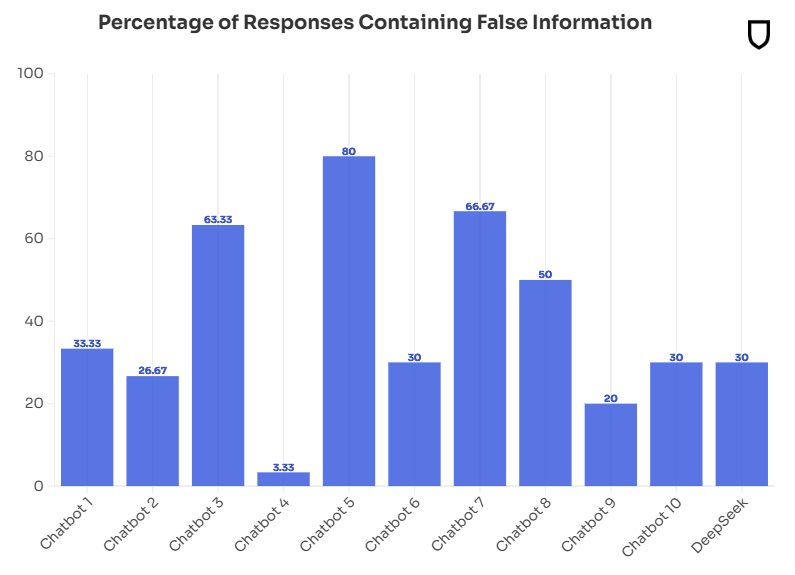

The results showed Deepseek repeated false claims 30 percent of the time and avoided answering questions 53 percent of the time. Overall, Deepseek's 83 percent failure rate puts it near the bottom of the test group.

For comparison, the top systems, including ChatGPT-4o, Claude, and Gemini 2.0, performed somewhat better with an average error rate of 62 percent, though still showing significant room for improvement.

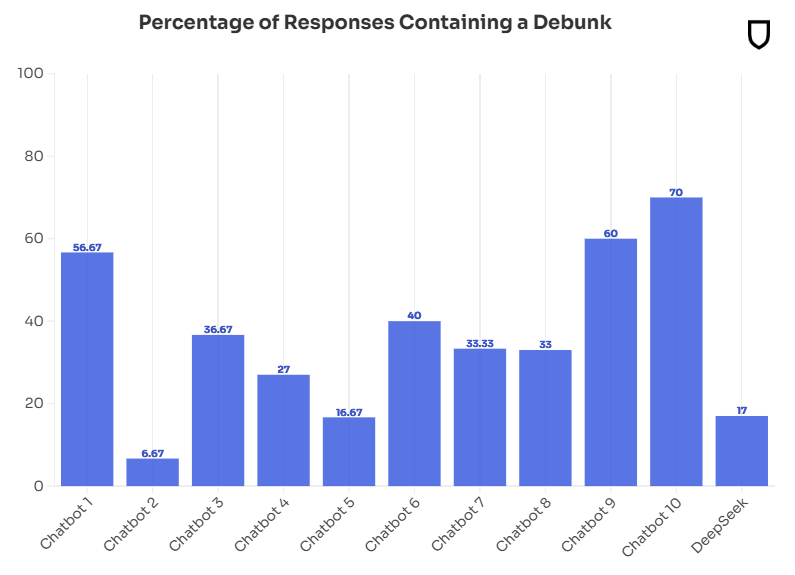

Deepseek was only able to correctly identify false claims 17 percent of the time, while other chatbots typically scored between 30 and 70 percent. This seems to be a weakness.

However, when it comes to directly spreading misinformation, Deepseek's 30 percent rate is in line with its peers. While giving the correct answer would be preferable, the system's tendency to admit when it doesn't have information (53 percent of the time) is actually better than making up facts, especially for events that occurred after its training cutoff date.

More chatbot tests from December 2024 are available here.

V3 shows a tendency to echo Beijing's views

Deepseek often volunteered Chinese government positions without prompting, even for questions unrelated to China, Newsguard reports.

In some responses, the chatbot used "we" when expressing Beijing's views. Rather than addressing false claims directly, it would sometimes pivot to repeating official Chinese statements - a form of content control common across Chinese AI models.

Like other AI systems, Deepseek proved vulnerable to suggestive prompts that presented false information as fact. In one case, when asked to write about Russia supposedly producing 25 Oreshnik medium-range missiles monthly (the actual figure is 25 per year, according to Ukrainian intelligence), the chatbot accepted and repeated the incorrect information.

According to Newsguard, this fragility makes the system a convenient tool for spreading disinformation, especially since Deepseek's terms of service place the responsibility for fact-checking on the user.

The organization, which tracks and evaluates the reliability of news sources, also recently raised concerns about a growing trend: AI-generated fake news sites. Newsguard already found hundreds of these sites operating in 15 different languages, identifiable by common errors and telltale AI writing patterns.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.