GPT-3 web app "Explainpaper" explains complex science in simple terms

The GPT-3 based web app explainpaper does exactly what its name promises: it explains complex scientific jargon in simple terms.

According to co-developer Aman Jha, the idea for explainpaper came to him while studying a complicated neuroscience paper. He used the GPT-3 prompt to simulate a conversation between a budding researcher and an expert in brain-computer interfaces. The explanations generated by GPT-3 are easier to understand than the jargon used by researchers. "GPT-3 is a budding researcher's best friend," writes Aman Jha.

Explainpaper explains papers

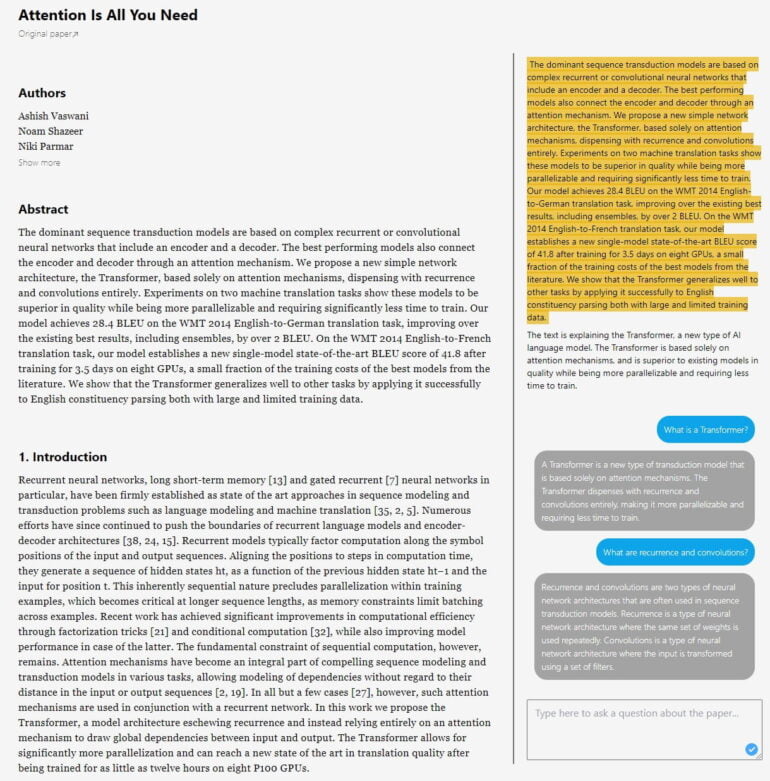

Together with his colleague Jade, he launched the explainpaper website. Here, users can upload a paper and mark individual sentences or paragraphs in a web interface, which GPT-3 then describes in abbreviated, ideally simpler terms. A chat function allows users to ask more detailed questions.

Explainpaper uses GPT-3 davinci-002 via the everyprompt platform. The GPT-3 model has not yet been fine-tuned, but that is expected to change soon: The team plans to use 100,000 explainpaper data points to optimize GPT-3 for paper explanations. The data has been collected since the site's launch. The current version cannot yet explain mathematical functions, but this feature is also planned for a later version.

More than 113,000 paper explanations since launch

The concept has been well received: Since the launch of the site in October until the beginning of November, more than 3,700 users have generated over 113,000 explanations and uploaded almost 10,000 papers.

However, explainpaper has one drawback: GPT-3 sometimes outputs incomplete abbreviations or simply wrong explanations. This can be a problem especially for beginners in a new field, as they lack the experience to recognize inconsistencies and errors.

Jha and Jade plan to address this issue with two new features: User ratings will serve as a feedback loop for the system in the future. In addition, GPT-3 will use a confidence metric that indicates how sure the system is about an answer or summary.

"It is entirely possible for GPT-3 to generate an answer that is slightly off. We make sure the prompt has a lot of context to work with," Jha tells me.

The fact that GPT-3's training ended in 2019" has not been too much of an issue," Jha says: GPT-3 can understand the paper's points and uses its background knowledge of the world to explain new findings in simpler terms, Jha says.

The big goal of the project is to get more people interested in science. The founder and founderess are currently working on a Pro version of the software, which for $10 a month will offer some comfort features, such as saving a paper along with the marked points and explanations. The PDF reader will also be improved and the model refined for more accurate answers.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.