Google DeepMind's latest AI models might bring us one step closer to LLMs that can reason

Google DeepMind has unveiled two new AI systems that won silver medals at this year's International Mathematical Olympiad (IMO).

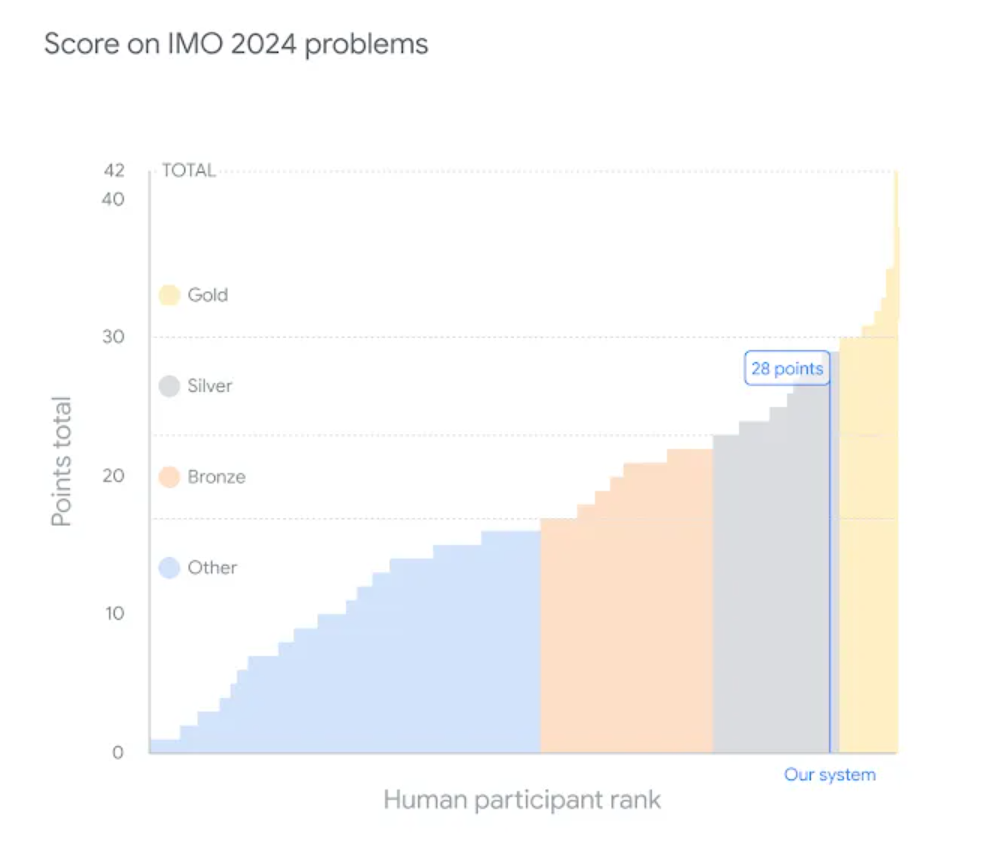

The systems, AlphaProof and AlphaGeometry 2, solved four of the six given problems and scored 28 out of 42 possible points. Deepmind sees this as a step towards Artificial General Intelligence (AGI) with advanced mathematical capabilities. A gold medal was awarded for 29 points or more, which 58 of the 609 human participants achieved.

AlphaProof combines a pre-trained language model with the AlphaZero reinforcement learning algorithm, which has been successfully applied to Chess, Shogi and Go, to prove mathematical statements in the formal language Lean.

The system trains itself by solving millions of mathematical problems of varying difficulty and learning from them by searching for possible proof steps in Lean. Each discovered and verified proof is used to expand AlphaProof's language model and improve its ability to solve subsequent, more challenging problems.

AlphaGeometry 2 is a significantly improved version of its predecessor from January 2024 and is based on a Gemini language model trained on a larger amount of synthetic data. Before this year's IMO, AlphaGeometry 2 was able to solve 83 per cent of all historical IMO geometry problems from the last 25 years, according to Google DeepMind.

For use at the IMO, the tasks were first manually translated into a formal mathematical language. While human participants worked on the tasks in two 4.5-hour sessions, the AI systems needed only a few minutes for some tasks and up to three days for others.

The AI systems' solutions were evaluated according to official IMO rules by leading mathematicians, including Professor Sir Timothy Gowers, himself an IMO gold medalist and Fields Medal winner.

"The fact that the program can come up with a non-obvious construction like this is very impressive, and well beyond what I thought was state of the art," says Gowers.

Logic and LLMs - it's complicated

Google DeepMind sees the development of AI systems with advanced mathematical capabilities as having the potential to open up new areas of science and technology. This is hardly possible with today's generative AI models such as LLMs, which lack reasoning skills and sometimes make grave errors.

DeepMind plans to release more technical details about AlphaProof in the future, and is also exploring approaches to natural language reasoning based on the Gemini model.

This outlook is in line with the statement made by Google DeepMind CEO Demis Hassabis last summer: "At a high level you can think of Gemini as combining some of the strengths of AlphaGo-type systems with the amazing language capabilities of the large models. We also have some new innovations that are going to be pretty interesting." But these approaches have not yet reached consumer-facing products.

OpenAI also aims to teach logical reasoning to multimodal AI models. Codenamed "Strawberry", it is reportedly developing AI technology with enhanced reasoning capabilities, similar to the "Quiet-STaR" method developed by Stanford researchers. The aim is to improve the company's models, particularly for autonomous internet searches and complex planning tasks.

According to a Reuters report, an internally tested OpenAI AI scored over 90 percent on the MATH benchmark for challenging mathematical tasks. By comparison, GPT-4 scored 53 percent and GPT-4o scored 76.6 percent. OpenAI has reportedly announced internally that it is on the verge of AI with reasoning capabilities.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.