Google releases code for HeAR, an AI that analyzes audio to assess health

Google Research has developed an AI system called Health Acoustic Representations (HeAR) that analyzes coughs and breathing sounds to assess health.

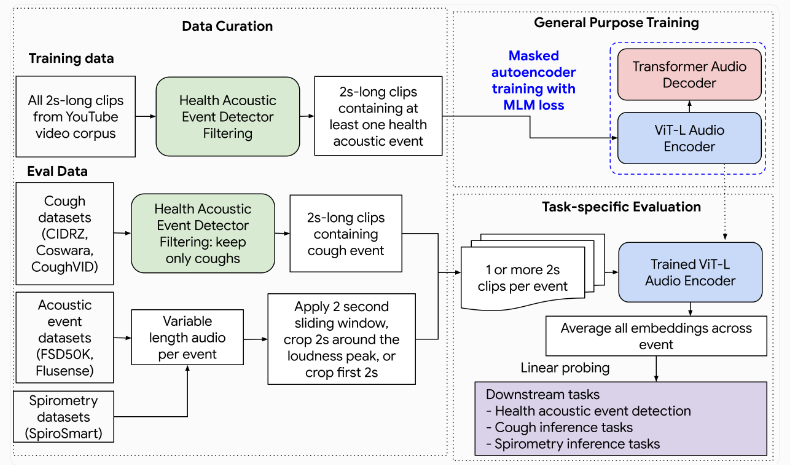

HeAR uses self-supervised learning and was trained on over 300 million short audio clips from non-copyrighted YouTube videos. The neural network is based on the Transformer architecture.

During training, parts of audio spectrograms were hidden, and the network learned to reconstruct these missing sections. This allowed HeAR to create compact representations of audio data containing relevant health information.

Google published its findings in March 2024 and has now released the code for other researchers to use.

Improved tuberculosis detection

Researchers tested HeAR on 33 tasks from 6 datasets, including recognizing health-related sounds, classifying cough recordings, and estimating lung function values. HeAR outperformed existing audio AI models in most benchmarks.

It achieved an accuracy (AUROC) of 0.739 in detecting tuberculosis from cough sounds, surpassing the second-best model TRILL at 0.652. The authors see potential for using AI cough analysis to identify people in resource-poor areas who need further testing.

HeAR also showed promise in estimating lung function parameters like FEV1 (one-second capacity) and FVC (vital capacity) from smartphone recordings. With an average error of only 0.418 liters for FEV1, it was more accurate than the best comparison method (0.479 liters). This could lead to new, accessible screening tools for lung diseases such as COPD.

The researchers stress that HeAR is still a research tool. Any diagnostic applications would require clinical validation. There are also technical limitations, such as HeAR's current ability to process only two-second audio clips. Google plans to use techniques like model distillation and quantization to enable more efficient use of HeAR directly on mobile devices.

The StopTB Partnership, a UN-backed organization aiming to cure tuberculosis by 2030, supports this approach. Researchers can now request the trained HeAR model and an anonymized version of the CIDRZ dataset (cough audio data) from Google. More information is available on GitHub.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.