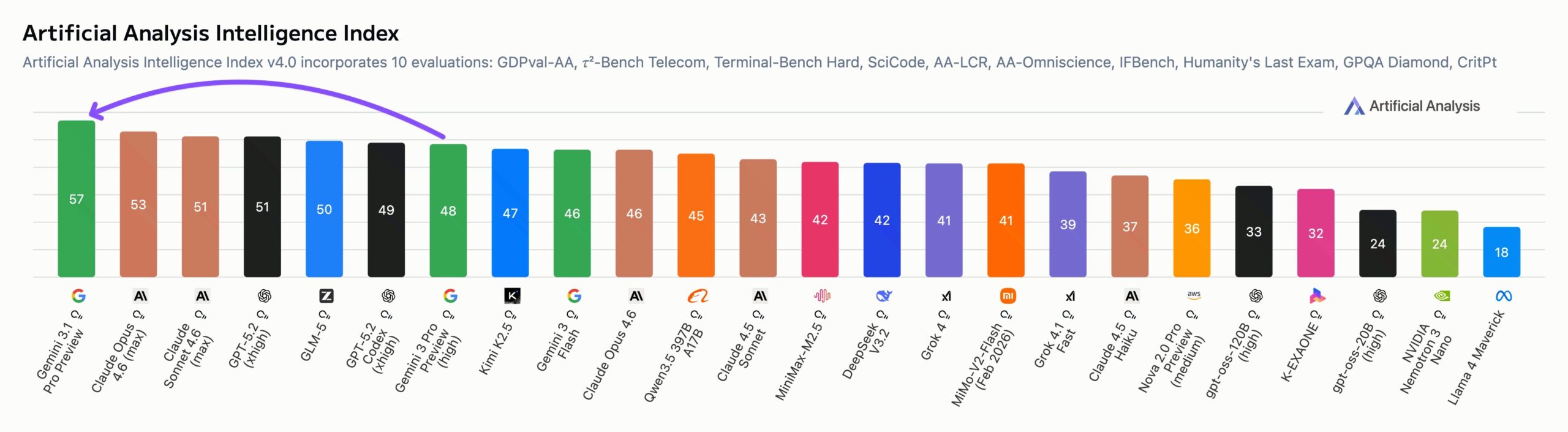

Google's Gemini 3.1 Pro Preview tops Artificial Analysis Intelligence Index at less than half the cost of its rivals

Google's Gemini 3.1 Pro Preview leads the Artificial Analysis Intelligence Index four points ahead of Anthropic's Claude Opus 4.6, at less than half the cost. The model ranks first in six of ten categories, including agent-based coding, knowledge, scientific reasoning, and physics. Its hallucination rate dropped 38 percentage points compared to Gemini 3 Pro, which struggled in that area. The index rolls ten benchmarks into one overall score.

Running the full index test with Gemini costs $892, compared to $2,304 for GPT-5.2 and $2,486 for Claude Opus 4.6. Gemini used just 57 million tokens, well under GPT-5.2's 130 million. Open-source models like GLM-5 come in even cheaper at $547. When it comes to real-world agent tasks, though, Gemini 3.1 Pro still falls behind Claude Sonnet 4.6, Opus 4.6, and GPT-5.2.

As always, benchmarks only go so far. In our own internal fact-checking test, 3.1 Pro does significantly worse than Opus 4.6 or GPT-5.2, verifying only about a quarter of statements in initial tests, even fewer than Gemini 3 Pro, which was already weak here. So find your own benchmarks.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now