GPT-5 allegedly solves open math problem without human help

Key Points

- Mathematician Johannes Schmitt reports that GPT-5 has independently solved an open mathematical problem for the first time.

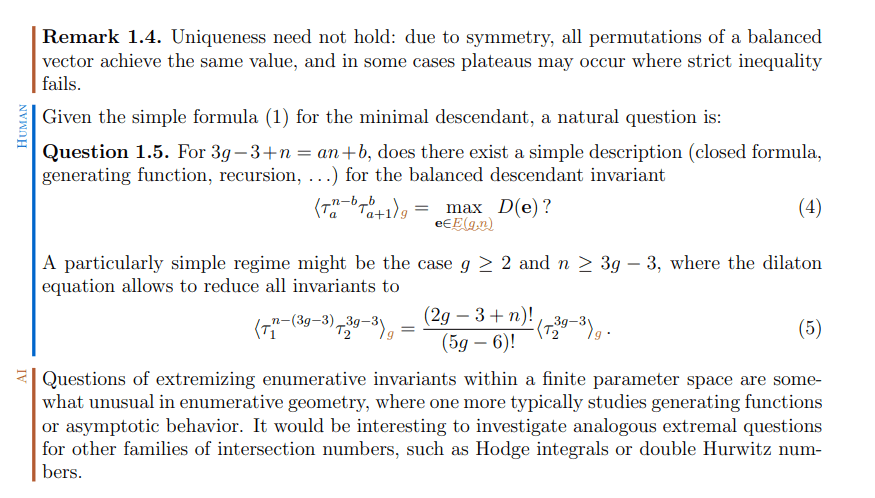

- The resulting paper clearly documents the collaboration between humans and AI by labeling each paragraph as written by either a human or AI, and includes links to prompts and conversation transcripts.

- Schmitt's method allows for high traceability of contributions, but it is time-intensive and raises questions about how to clearly separate human and AI input.

As scientists debate how to transparently integrate AI into research and writing, Swiss mathematician Johannes Schmitt has put forward a concrete proposal.

Schmitt reports on X that GPT-5 has independently solved an open mathematical problem for the first time—without human input or intervention. According to Schmitt, GPT-5 delivered an elegant solution that surprisingly drew on techniques from a different area of algebraic geometry rather than applying the usual methods. Peer review is still pending. Similar anecdotal reports on AI's usefulness in mathematics have recently come from math star Terence Tao, among others.

The resulting paper showcases various forms of human-AI collaboration: proofs from GPT-5 (base model, not Pro) and Gemini 3 Pro, text passages from Claude, and formal Lean proofs via Claude code and ChatGPT 5.2. As an experiment in transparent AI attribution, every paragraph is labeled as human or AI-written, with links to prompts and conversation transcripts.

Transparency matters, but it shouldn't become red tape

Schmitt's approach offers a high level of transparency and traceability—anyone can check which ideas came from humans and which from AI. But the approach has drawbacks too: labeling each paragraph in detail is time-consuming and could become impractical as AI becomes an everyday tool. Transparency matters, but it shouldn't turn into bureaucracy.

The line between human and AI input isn't always clear either. Who writes the prompt? Who selects and corrects the output? This model probably won't transfer easily to other sciences.

Perhaps science needs to answer a more fundamental question first: Why does it matter whether a contribution came from a human alone, a human with AI assistance, or solely from AI—and is the latter even possible without human intention?

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now