Hugging Face works to replicate OpenAI's Deep Research capabilities with open-source AI agent

A team at Hugging Face, led by chief researcher Thomas Wolf, has created an open-source version of OpenAI's Deep Research system in 24 hours.

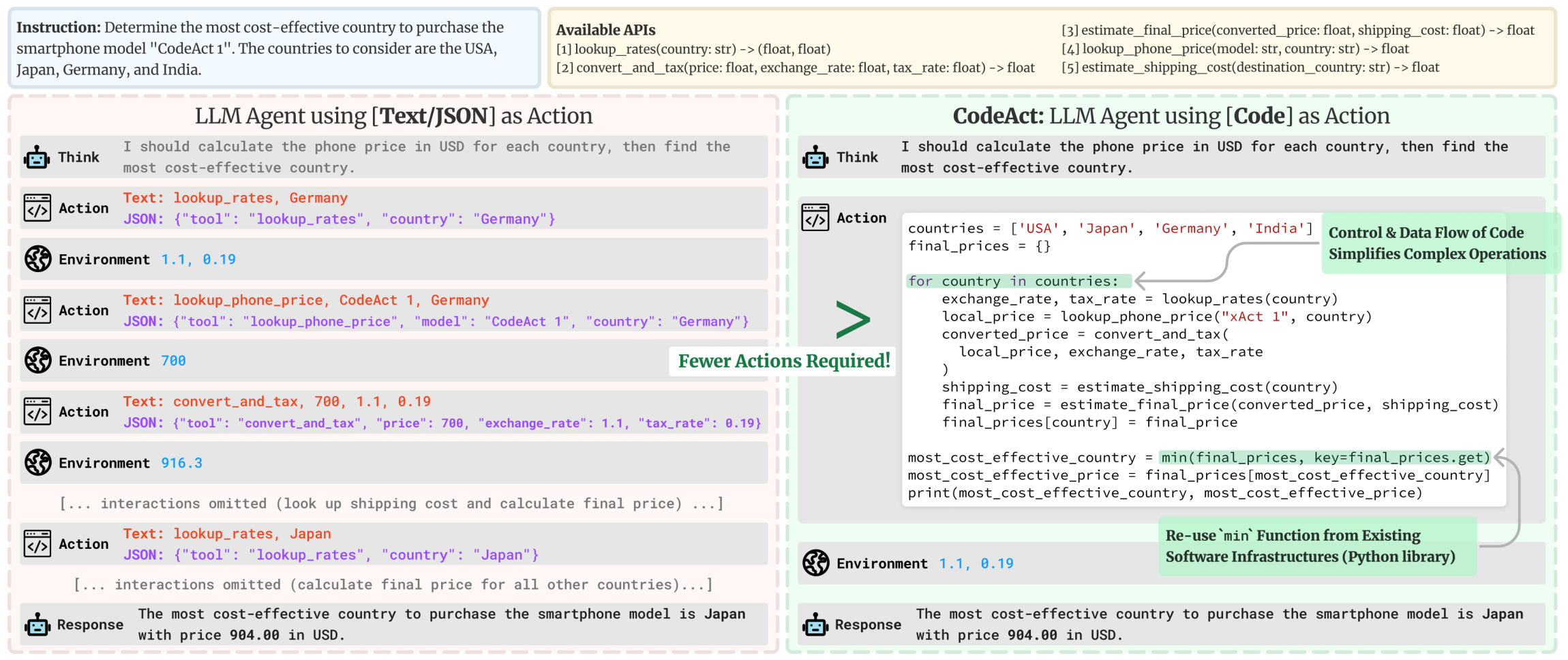

According to the Hugging Face blog, they aim to make the proprietary technology accessible to everyone by replicating the agent framework behind OpenAI's Deep Research. The team developed their system to write program code directly instead of using JSON for actions. This approach reduces processing steps by about 30 percent, leading to lower costs and better performance compared to traditional language models.

For the actual implementation, the team borrowed two key pieces from Microsoft's Magentic-One agent framework: a text-based web browser for searching and a text inspector that can read various file formats.

Testing the system's research capabilities

The team evaluated their system using the GAIA benchmark, which tests how AI agents handle complex research tasks. One example asks: "Which of the fruits shown in the 2008 painting "Embroidery from Uzbekistan" were served as part of the October 1949 breakfast menu for the ocean liner that was later used as a floating prop for the film 'The Last Voyage'? Give the items as a comma-separated list, ordering them in clockwise order based on their arrangement in the painting starting from the 12 o'clock position. Use the plural form of each fruit."

To solve this puzzle, the AI agent needs to:

- Identify the fruit in the painting through image processing

- Determine which ocean liner appeared in the movie

- Locate its breakfast menu from 1949

- Present the information in the required format

Hugging Face's system scored 55.15 percent on these multi-step challenges. That's better than Microsoft Magentic-One's 46 percent, but still trails OpenAI's 67 percent with Deep Research.

The team acknowledges they still have work ahead to match OpenAI's Deep Research, particularly in improving browser interactions. One key difference: Hugging Face relies on available open-source language models, while OpenAI uses its own o3 model, specifically trained for web tasks using reinforcement learning.

Still, Hugging Face's results on the GAIA benchmark, coming on the heels of OpenAI's Deep Research release, suggest the gap between open-source and proprietary AI may be closing faster than expected - another indication, after the Deepseek dilemma, that proprietary AI may not be the strongest business model.

The team's next step is to develop GUI agents that can interact directly with screens, mice, and keyboards. The code is available on GitHub, and you can see a live demo here. Other developers have created their own open-source versions, including dzhng, assafelovic, and Jina AI. Hugging Face plans to analyze and document these different approaches.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.