Hugging Face's new price calculator shows AI training doesn't come cheap

Hugging Face is offering a new AI service called Training Cluster as a Service, which allows companies to train AI models without owning massive amounts of compute.

AI company Hugging Face is launching Training Cluster as a Service: With the new service, users can access powerful GPU clusters to train their AI models faster and more easily.

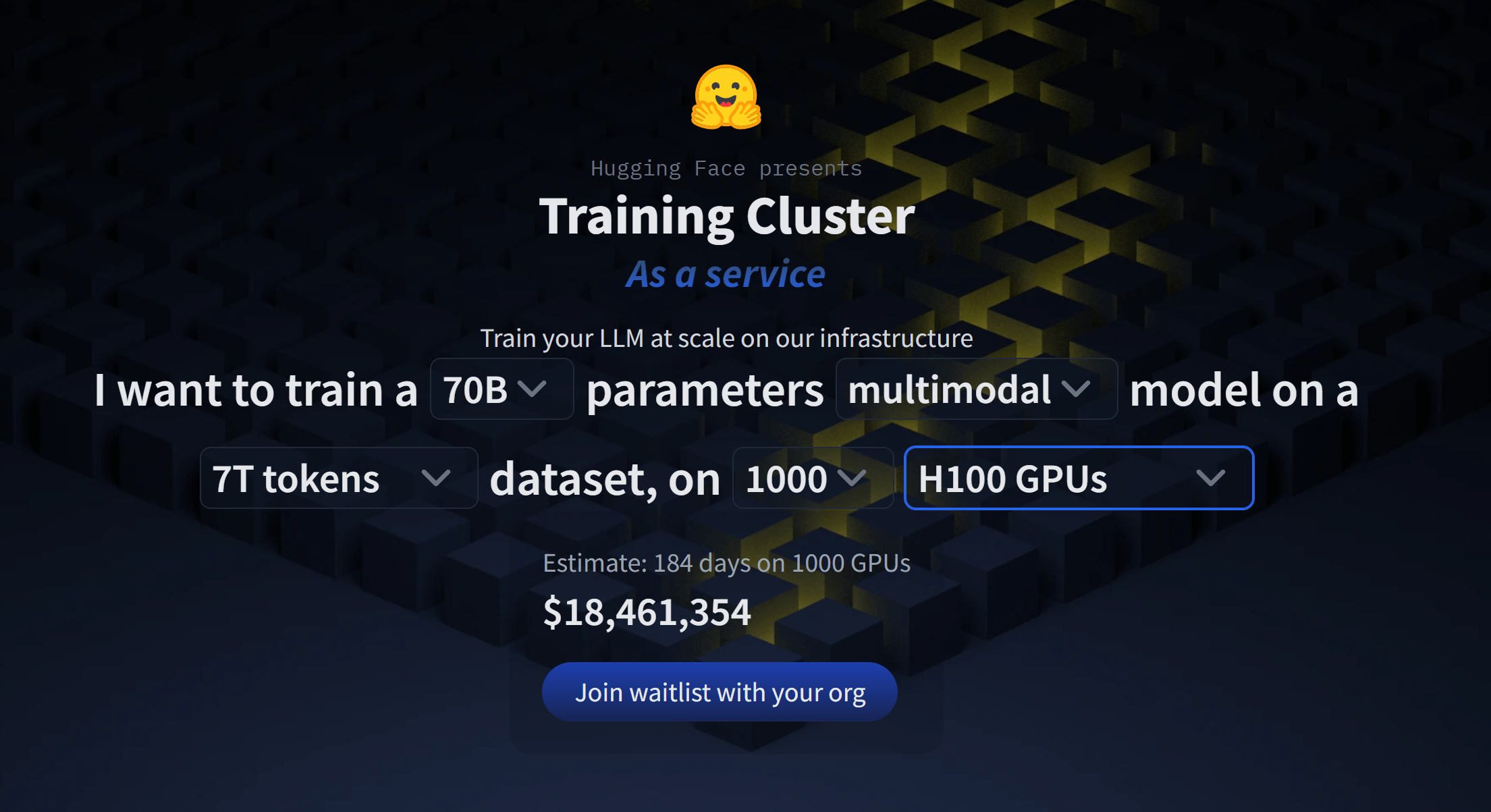

The price calculator for the new service is interesting: Users can configure their desired model based on the number of parameters, capabilities, amount of training data, and desired training speed. The cheapest text model with 7 billion parameters costs an estimated $43,069 and would take about four days to train.

The most expensive multimodal model (text and image) would cost $18,461,354: 70 billion parameters, 7 trillion tokens of training data, and 184 days of training time on 1000 Nvidia H100 GPUs.

Training such a model makes sense if you want to be completely independent for safety reasons, or if you have very specific application scenarios for which you can optimize the model.

But even here, the big AI companies like Google or OpenAI offer services for fine-tuning their large foundational models, which are probably better positioned in terms of price-performance ratio.

AI training is expensive

Since the performance of a model depends on the quality of the training data and the architecture, and not just on size, it is difficult to make a general statement about the performance of self-trained HF models.

However, it is likely that even the most powerful multimodal model with 70 billion parameters that can currently be trained via the HF service would not stand a chance against GPT-4 or Google's Gemini when it comes to performing as many tasks as possible with the highest possible quality.

GPT-4 reportedly has about 1.8 trillion parameters, so it's 25 times larger. Estimates and training costs range from $68 million to more than $100 million.

This shows that cutting-edge AI models still have a strong moat from a financial perspective alone. And it also shows that Europe's AI startups are likely to be underfunded, although it is possible that advances in processing, more efficient architectures, and less training data needed because it is of higher quality will lower the price of training.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.