Human perception can be subtly influenced by watermarked AI images, Deepmind study finds

Watermarked AI-generated images can make people think of cats without them knowing why, according to a new study by Deepmind.

Watermarks in AI-generated images can be an important safety measure, for example, to quickly prove that an image is (not) real without the need for forensic investigation.

Watermarking often involves adding features to the image that are invisible to the human eye, such as slightly altered pixel structures, also known as adversarial perturbations. They cause a machine learning model to misinterpret what it sees: For example, an image might show a vase, but the machine labels it a cat.

Until now, researchers believed that these image distortions, which are intended for computer vision systems, would not affect humans.

Watermarks can affect human perception

Researchers at Deepmind have now tested this theory in an experiment and shown that subtle changes to digital images also affect human perception.

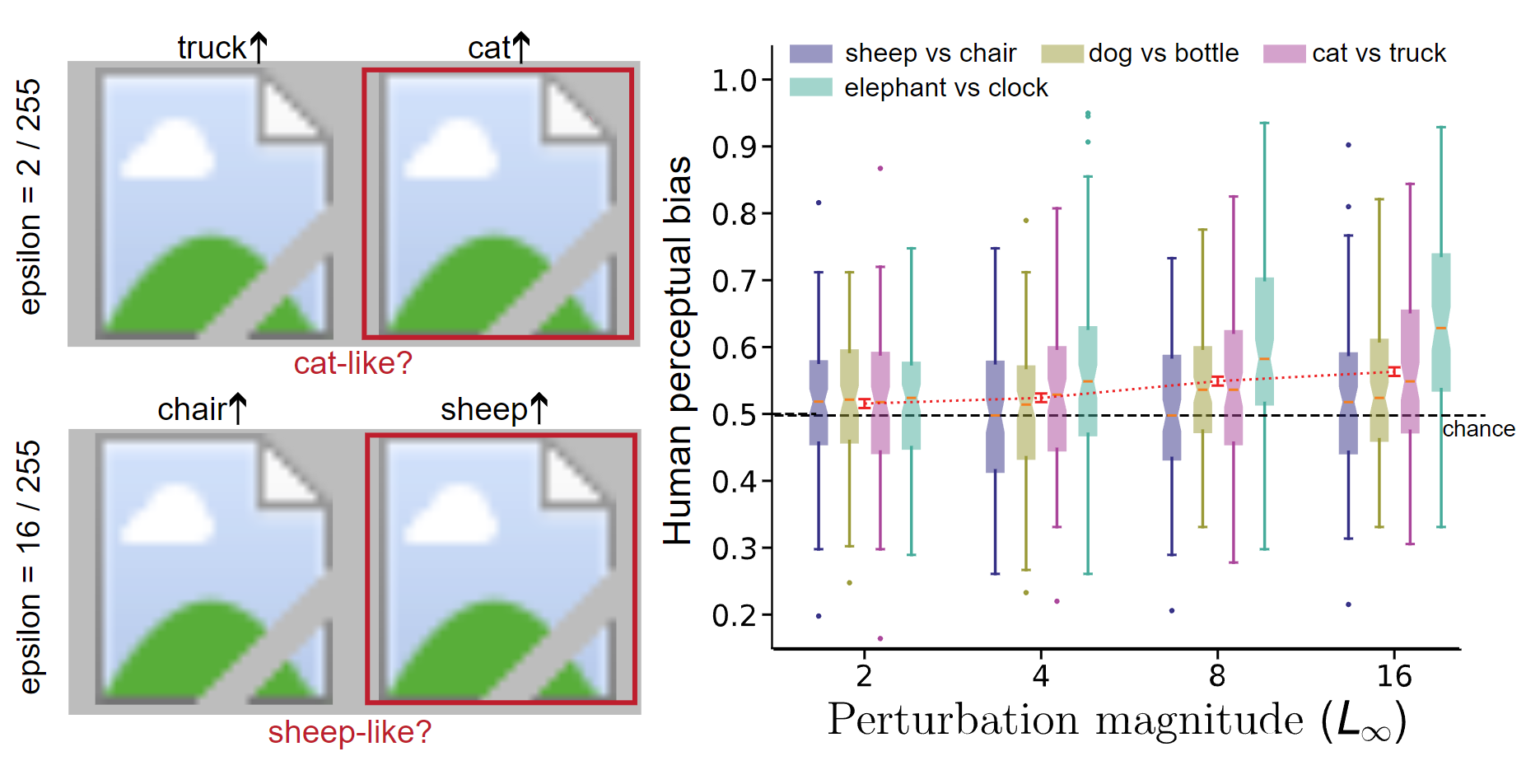

Gamaleldin Elsayed and his Deepmind team showed human test subjects pairs of images that had been subtly altered with pixel modifications.

In a sample image showing a vase, an AI model incorrectly identified the vase as a cat or a truck after manipulation. The human subjects still saw only the vase.

However, when asked which of the two images looked more like a cat, they tended to choose the image that had been manipulated to look like a cat for the AI model. This was even though both images looked the same.

Video: Deepmind

According to the Deepmind team, this is no coincidence. The study showed that for many pairs of manipulated images, the selection rate for the manipulated image was reliably above chance, even when no pixel was changed by more than two levels on the scale from 0 to 255.

Concerns about subtle crowd manipulation

The effects of this image manipulation are much more dramatic with machines than with humans, especially in the case of negative influence. Nevertheless, it is possible to nudge people into making decisions that would be made by machines.

The study emphasizes that these small changes can have a large impact when implemented on a large scale.

"Even if the effect on any individual is weak, the effect at a population level can be reliable, as our experiments may suggest," the team writes.

For example, if image manipulation makes a politician's photo seem more like a cat or a dog, this could affect people's perceptions of the politician.

The Deepmind scientists recommend that AI safety research should be based on experimentation, rather than relying on intuition and self-reflection. Cognitive science and neuroscience also need to develop a more profound understanding of AI systems and their potential impact.

Deepmind is also developing a watermarking system with SynthID that it uses in image generation systems such as Imagen 2, although it may work differently than the adversarial perturbations discussed in the above research.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.