Humans might need a permission slip to use the internet soon, thanks to AI

A team of researchers from OpenAI, Microsoft, MIT, and other tech companies and universities have developed a method for people to anonymously prove they are human online. This aims to counter the potential flood of AI bots and identity theft.

Recently, a "Mr. Strawberry" account on X has been stirring up the AI community by allegedly leaking information about OpenAI's latest AI technology, codenamed "Strawberry". Some suspect the account could be an AI bot controlling other bots, including voice output in audio chats. While it's more likely a human is behind it, the fact that a bot is even considered shows how difficult it's becoming to distinguish humans from machines online.

Personhood credentials aim to separate the humans from the ChatGPTs

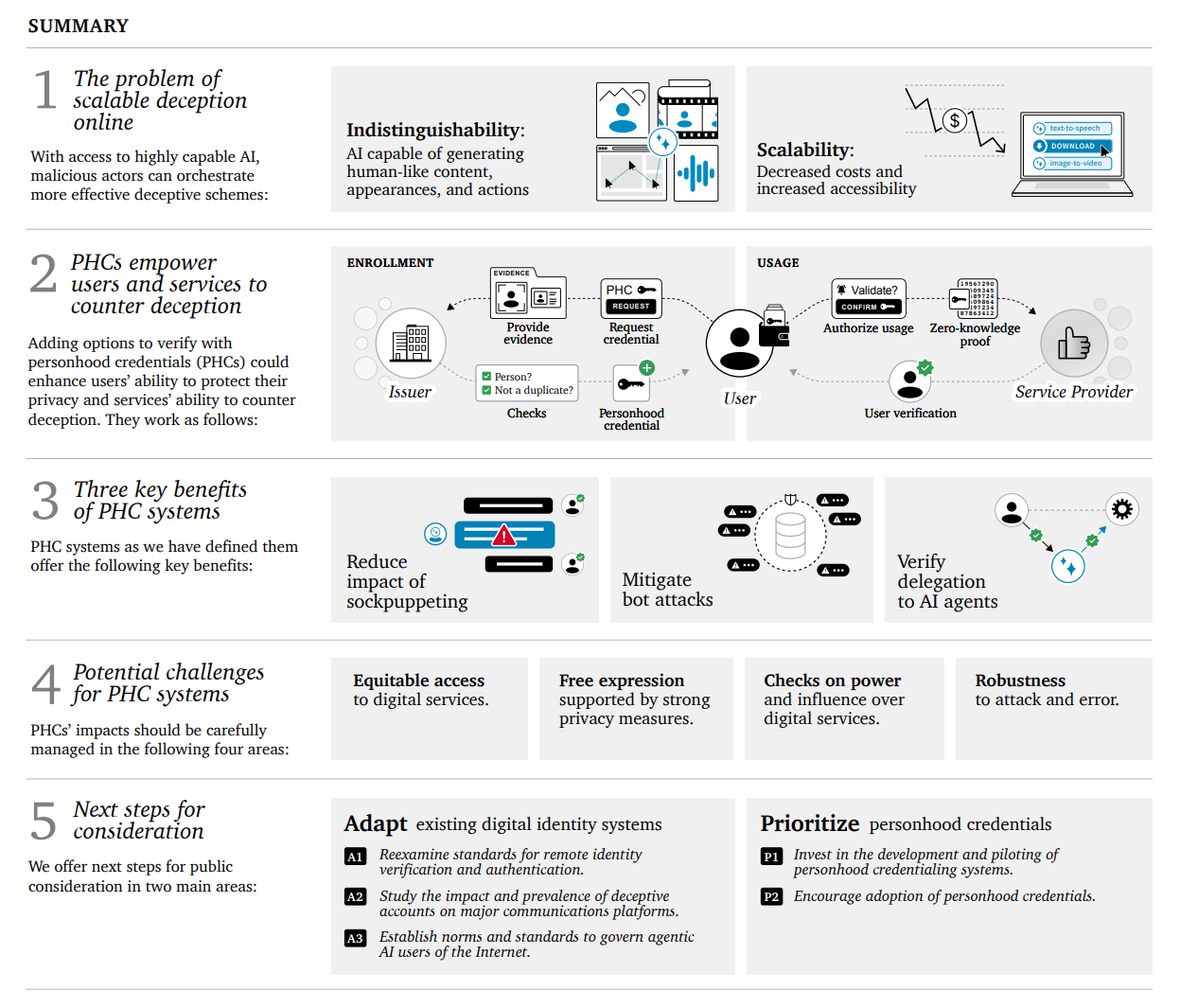

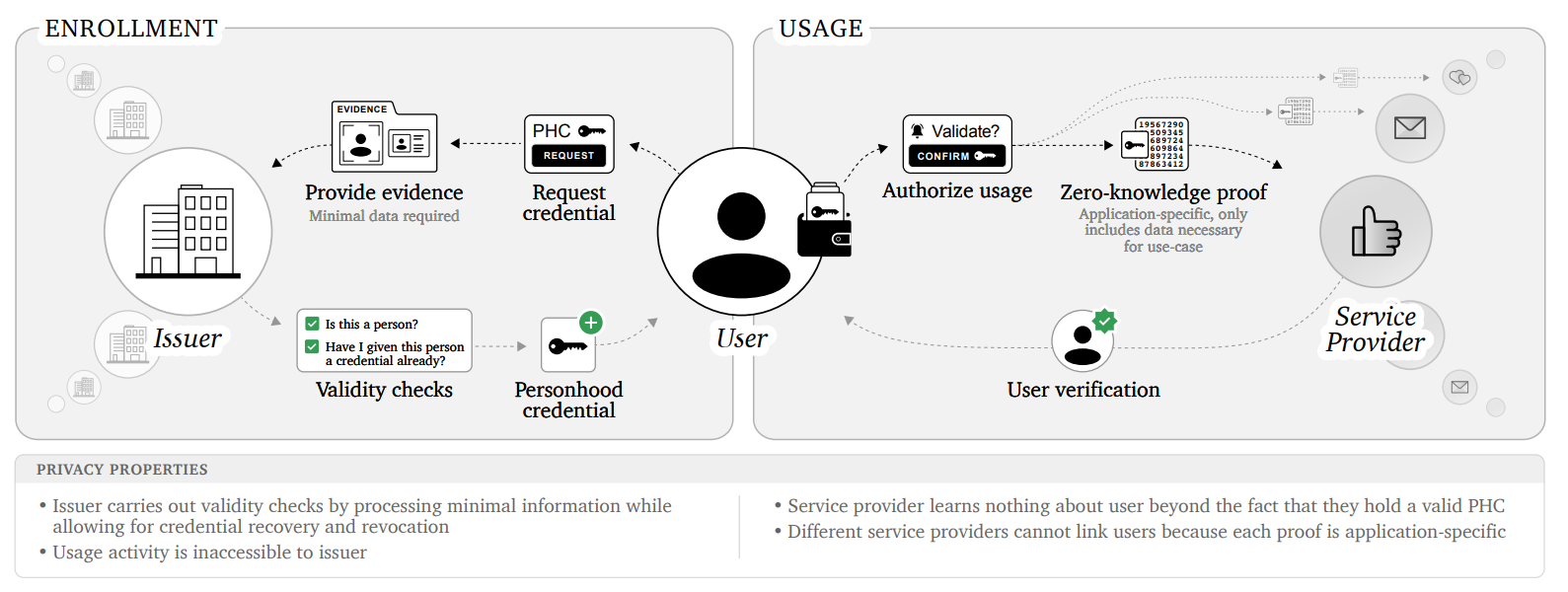

To address this issue, researchers propose a solution called Personhood Credentials (PHCs) in a new paper. These digital credentials would confirm that the holder is a real person without revealing any other identity information.

PHCs aim to differentiate humans from advanced AI systems by exploiting two key limitations of AI: The systems can't convincingly mimic humans offline (yet), and, according to the researchers, they can't bypass modern cryptographic systems (yet).

The researchers outline two core requirements for a PHC system: An issuer should "maintain a one-per-person per-issuer credential limit" and have ways to mitigate credential transfer or theft.

In addition, PHCs should allow anonymous interaction with services through service-specific pseudonyms, with digital activities untraceable by the issuer and unlinkable by service providers.

Potential issuers could include countries that offer a PHC to every tax ID holder, or other trusted institutions such as foundations.

Balancing privacy and fraud prevention

The authors say a PHC system must balance two potentially conflicting goals: protecting user privacy and civil liberties while limiting fraudulent activity. They propose having multiple issuers, each limiting credentials per person.

This would allow people to receive a limited total number of credentials – more than one to protect privacy, but not so many that it loses fraud prevention ability at scale.

The researchers emphasize the need to develop proof-of-personhood systems such as PHCs and recommend prioritizing their design, testing, and adoption as a specific countermeasure against AI impersonation.

OpenAI researcher Steven Adler, who contributed to the paper, references Meta CEO Mark Zuckerberg's prediction of "hundreds of millions or billions of AI agents using the Internet on people’s behalf" and asks: "What happens if he’s correct?"

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.