InstantMesh transforms a 2D image into a 3D object in seconds

InstantMesh, an AI framework developed by researchers from Tencent PCG ARC Lab and Shanghai Tech University, can generate high-quality 3D meshes from individual 2D images in just ten seconds, according to a preprint article published by the research team.

The open-source framework consists of two main components: a multi-view diffusion model and a reconstruction model for 3D meshes from a few views. The multi-view diffusion model synthesizes 3D-consistent views from different angles using a single input image, and these views serve as input for the reconstruction model.

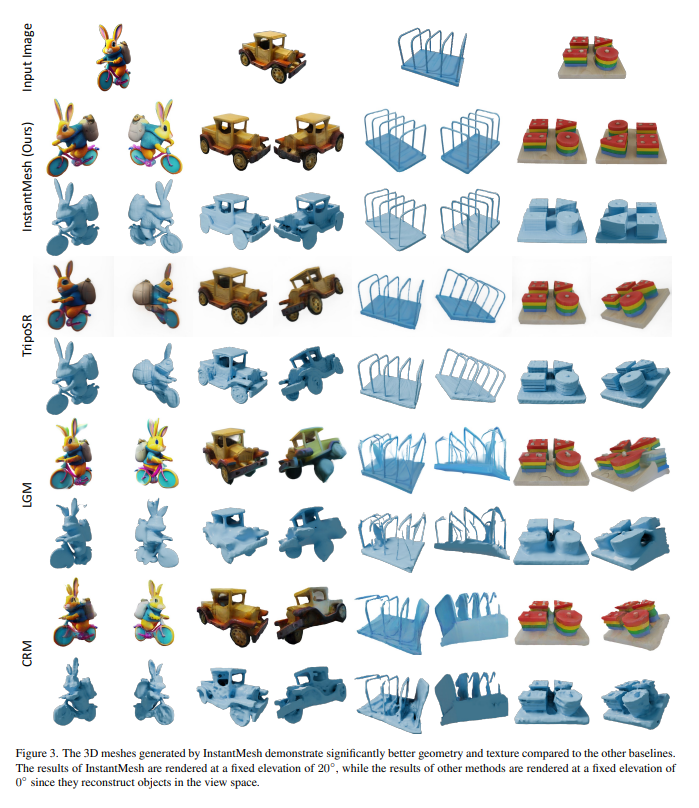

InstantMesh relies on meshes instead of the triplane NeRF representation used in previous methods, resulting in smoother meshes and easier post-processing, according to the researchers. The framework achieves significantly better results than current reference methods such as TripoSR, LGM, and Stable Video 3D, both in terms of perceived quality of synthesized new views and geometric accuracy.

Demo available on Hugging Face

Along with the paper, the researchers have made all the code, trained model variants, and a demo available on Hugging Face. Users can choose from predefined sample images or upload their own, including both photographs and AI-generated images.

If the result is poor, the developers recommend changing the seed, which determines the multi-view perspectives and can significantly affect the quality of the 3D object.

Plans for InstantMesh include increasing the resolution of the generated 3D meshes generated and using more advanced multi-view diffusion architectures to further improve consistency between views.

Technologies like these could significantly increase productivity in the 3D industry, especially in video game development. But it's still an open question how much better these models can get to the point where they can be used without a lot of post-processing, which in the current state could result in more work.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.