Kling 2.6 adds voice control and motion upgrades as AI video tools race toward realism

Key Points

- Kuaishou has updated its AI video generator Kling 2.6 with native audio features, voice control, and improved motion control.

- Users can now precisely set spoken content, upload their own voices, and use them in generated videos.

- The motion control improvements allow for more detailed full-body movements, including fast or complex actions like dance or martial arts, according to the company.

Chinese AI company Kuaishou has released two new features for its Kling 2.6 video generator: voice control for spoken content and improved motion control for more precise movement handling.

The new voice control builds on the synchronized video audio generation Kling 2.6 introduced recently. Like Google's Veo 3 or Sora 2, the model can generate sound effects that match video content, including voices and music.

According to Kling AI, the feature supports various types of human voices: speaking, dialog, narration, singing, and rapping. It also handles ambient noise and composite scene sounds. The model accepts both pure text descriptions and combinations of text and images as input.

Kling AI highlights numerous use cases: product demos, lifestyle vlogs, news broadcasts, sports commentary, documentaries, interview formats, dramatic short films, and music performances, including singing and even polyphonic choirs.

Custom voice training enables more consistent characters

The new Voice Control feature lets users upload their own voices to train a model. They can also upload an audio file directly. The trained or uploaded voice can then be applied to text-to-video creations.

This improves character consistency—characters in generated videos can now speak with a defined, recognizable voice. That makes it possible to create consistent characters across multiple video clips.

Kling AI didn't share technical details about how Kling 2.6 was trained and built. A user guide is available here.

Motion control now handles complex actions better

The second major feature is an upgrade to motion control. According to Kling AI, the system now captures full-body movements in greater detail. Even fast and complex actions like martial arts or dances should be processed more accurately.

The company specifically highlights improvements in two areas where AI videos typically struggle: hand movements should now appear precise and blur-free, while facial expressions and lip sync should remain natural.

Users can upload motion references between 3 and 30 seconds long to create uninterrupted sequences. Scene details can also be adjusted using text prompts.

Impressive examples are already circulating on social media, suggesting that AI-generated video content will continue to grow as platform algorithms reward quick clicks and AI creators capitalize on this low-hanging fruit. At the same time, some genuinely creative ideas are emerging.

Competitive pricing

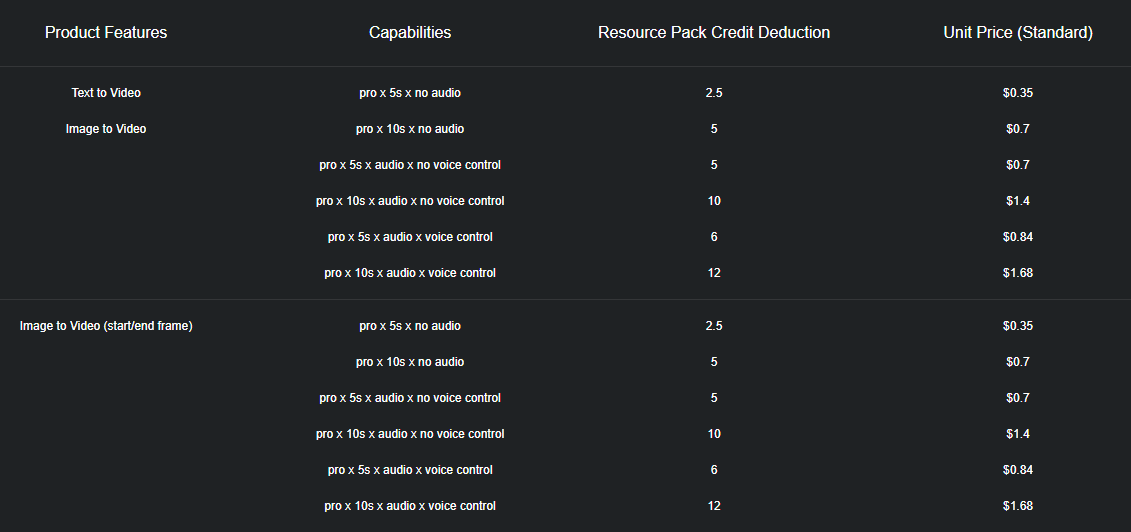

Kling is available through third-party providers like Fal.ai, Artlist, and Media.io in addition to its own platform. API pricing through these providers runs about $0.07 to $0.14 per second of generated video—highly competitive rates. Prices vary based on generation speed, length, and resolution. KlingAI itself uses a credit system.

In early December, Kuaishou unveiled Video O1, which the company calls the "world's first unified multimodal video model" - combining generation and editing in one system. Video O1 can edit existing videos using text commands, changing protagonists, weather, or video style.

With these new Kling 2.6 features, Kuaishou is competing in a crowded market against Western players like Google, OpenAI, and Runway, as well as Chinese competitors including Hailuo, Seedance, and Vidu.

Kuaishou operates Kwai, one of the world's largest short video platforms, comparable to TikTok. This gives the company access to massive amounts of video-audio pairs and motion data that can be used directly to train video models with synchronized sound and realistic motion sequences.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now