Mastra's open source AI memory uses traffic light emojis for more efficient compression

The open-source framework Mastra compresses AI agent conversations into dense observations modeled after how humans remember things, prioritized with emojis. The system sets a new top score on the LongMemEval benchmark.

AI agents have a fundamental problem with memory. The longer a conversation runs, the more tokens fill the context window. The model gets slower, more expensive, and less accurate. Mastra, an open source framework for agent-based AI systems, tackles this problem with a new approach called "observational memory."

Instead of keeping the entire message history in the context window, two background agents watch the conversation and compress it into dense notes. The idea is inspired by how the human brain works, distilling millions of visual impressions into a handful of observations rather than storing every single detail.

The system runs without a vector database or knowledge graph. Observations are stored as plain text in a standard storage backend like PostgreSQL, LibSQL, or MongoDB and loaded directly into the context window instead of being pulled through embedding search.

Emoji priority system borrows from software logging

The prioritization system for compressed observations is one of the more creative design choices. Mastra reimplements classic logging levels from software development using emojis that language models can parse particularly well.

A red circle 🔴 flags important information, a yellow circle 🟡 marks potentially relevant details, and a green circle 🟢 tags pure context without any particular priority. The system also uses a three-date model with observation date, referenced date, and relative date, which Mastra says improves temporal reasoning.

Date: 2026-01-15

- 🔴 12:10 User is building a Next.js app with Supabase auth, due in 1 week (meaning January 22nd 2026)

- 🔴 12:10 App uses server components with client-side hydration

- 🟡 12:12 User asked about middleware configuration for protected routes

- 🔴 12:15 User stated the app name is "Acme Dashboard"

New messages get appended until a configurable threshold is reached, 30,000 tokens by default. Then an "observer" agent kicks in and compresses the messages into emoji-annotated observations.

The compression ratio varies a lot depending on the content. For text-only conversations like those in the LongMemEval benchmark, Mastra reports 3x to 6x compression on its research page, around 6x in the benchmark itself.

For agents that make many tool calls, like browser agents with Playwright screenshots or coding agents scanning files, compression jumps to 5x to 40x. The noisier the tool output, the higher the ratio. A Playwright session with 50,000 tokens per page screenshot shrinks down to a few hundred tokens.

If the observations themselves grow past a second threshold, 40,000 tokens by default, a "Reflector" agent takes over. It condenses the observations further, combines related entries, and drops anything no longer relevant. This creates a three-tier system of current messages, observations, and reflections.

Continuous event logging replaces one-shot summarization

Mastra explicitly distinguishes observational memory from conventional summarization, where the message history gets summarized once when context overflow is about to hit. Observational memory instead works as a continuous, append-only event log.

The observer documents what's happening, what decisions have been made, and what has changed on an ongoing basis. Even during reflection, the log is only restructured, not summarized. Connections get made and redundant entries get removed.

A key advantage, according to Mastra, is compatibility with prompt caching as supported by Anthropic, OpenAI, and other providers. Since observations are only appended and never dynamically recompiled, the prompt prefix stays stable and enables full cache hits on every turn.

The full cache only gets invalidated during reflections, which happen infrequently. This cuts costs and tackles two core problems with long conversations: performance degradation from too much message history and irrelevant tokens eating up space in the context window.

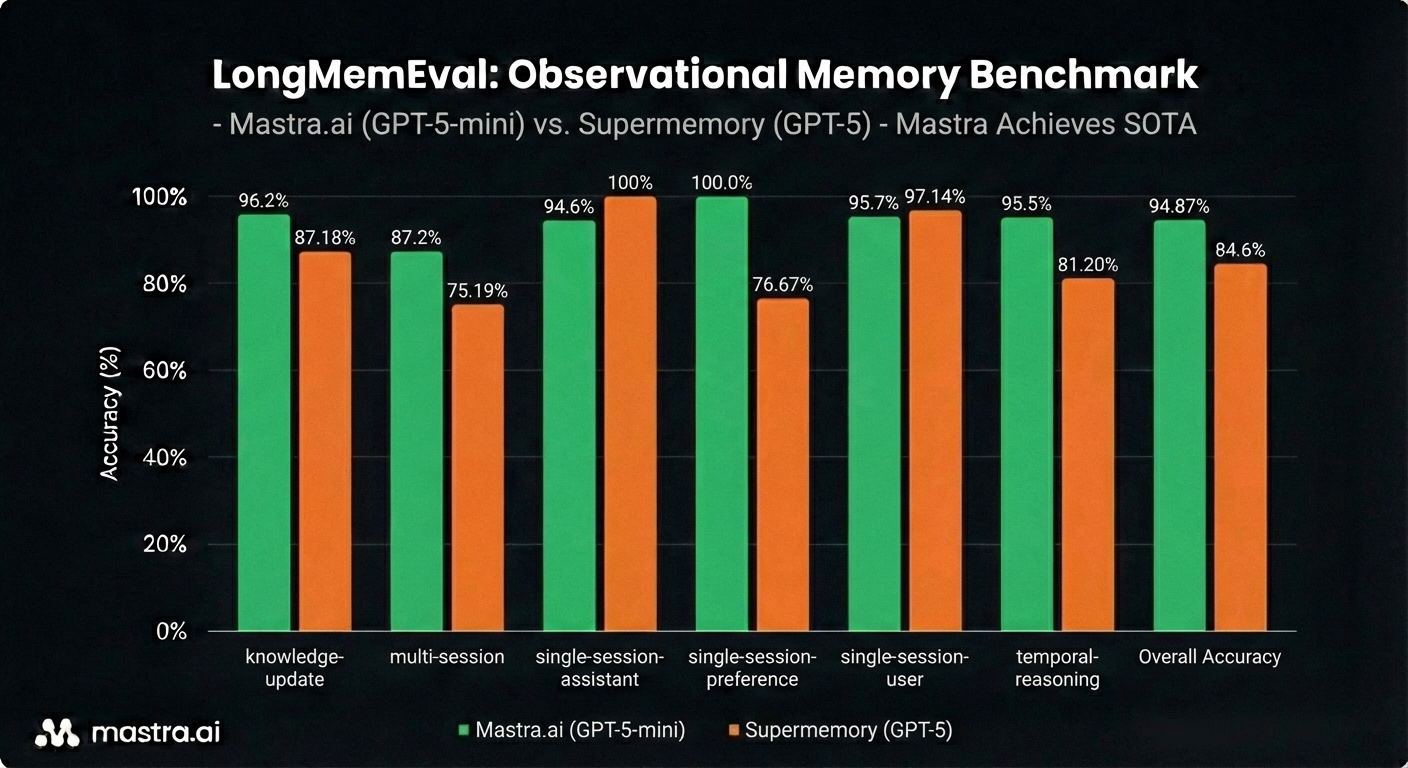

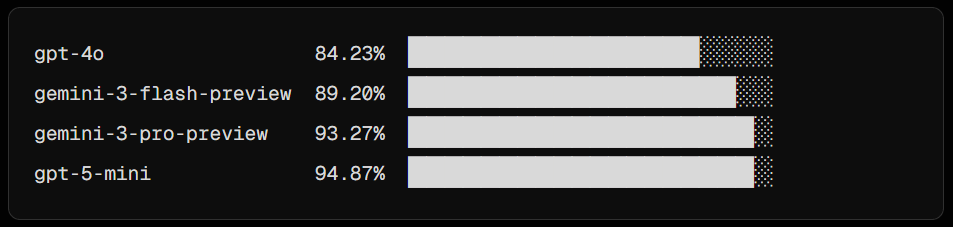

Observational memory sets a new LongMemEval record

According to Mastra, Observational Memory scores 94.87 percent on the LongMemEval benchmark with GPT-5 Mini, more than three points above any previously recorded result. With GPT-4o, the system hits 84.23 percent, beating both the Oracle configuration (which only receives the relevant conversations) and the previous best score from Supermemory. Competing systems like Hindsight rely on multi-stage retrieval and neural reranking, while Observational Memory gets the job done with a single pass and a stable context window.

The system does have some limitations. Observation currently runs synchronously and blocks the conversation while the Observer processes messages. Mastra says an async background mode is coming soon.

Anthropic's Claude 4.5 models also don't currently work as Observer or Reflector. Mastra is positioning Observational Memory as the successor to its previous memory systems Working Memory and Semantic Recall, which the team shipped in the spring. The framework's code is publicly available on GitHub.

AI memory is turning into an architecture race

Last year, a Chinese research team introduced "GAM," a similarly motivated memory system that also uses two specialized agents, called "Memorizer" and "Researcher," to fight so-called "context rot" in long conversations. Unlike Mastra's text-based approach, GAM relies on vector search and iterative retrieval across a complete history.

Around the same time, Deepseek shipped an OCR model that processes text documents as compressed images, designed to cut the load on the context window by up to 10x. Similar to how people remember the look of a page rather than each individual sentence, the system saves the visual impression instead of the full text.

Researchers from Shanghai outlined the vision of a "Semantic Operating System" as a lifelong AI memory that doesn't just store context but actively manages, adapts, and forgets it, much like the human brain.

Efficient memory for AI agents is quickly becoming one of the hottest research areas in the industry, recently boosted by the personal assistant OpenClaw. Chatbots like ChatGPT have offered memory features for a while now, but they tend to rely on conventional summarization, which brings its own error potential when working with generative AI.

Regardless of which architecture wins out or whether a dedicated AI memory system gets widely adopted, solid context engineering, feeding only the relevant information to the AI model at the right time, will likely play a major role going forward. If nothing else, it keeps things resource efficient. With today's technology, context engineering is still essential to keeping AI error rates low.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.