Meta AI chatbot fabricates sexual harassment allegations against US politicians

Key Points

- Meta's AI chatbot falsely claimed that several US politicians had been accused of sexual harassment. The detailed allegations, investigations, and outcomes were all fictitious.

- In tests with different lawmakers' names, the chatbot consistently generated similar, fictitious stories of alleged harassment, including against the chairs of committees dealing with AI legislation.

- Although New York State recently passed measures against misleading "deepfakes," they do not explicitly address instances of false or defamatory information generated by generative AI like the Meta chatbot. Meta defends the technology as new and in need of improvement.

Meta's new chatbot invents sexual harassment allegations against US politicians. The allegations are fictitious, but the chatbot backs them up with a ton of details.

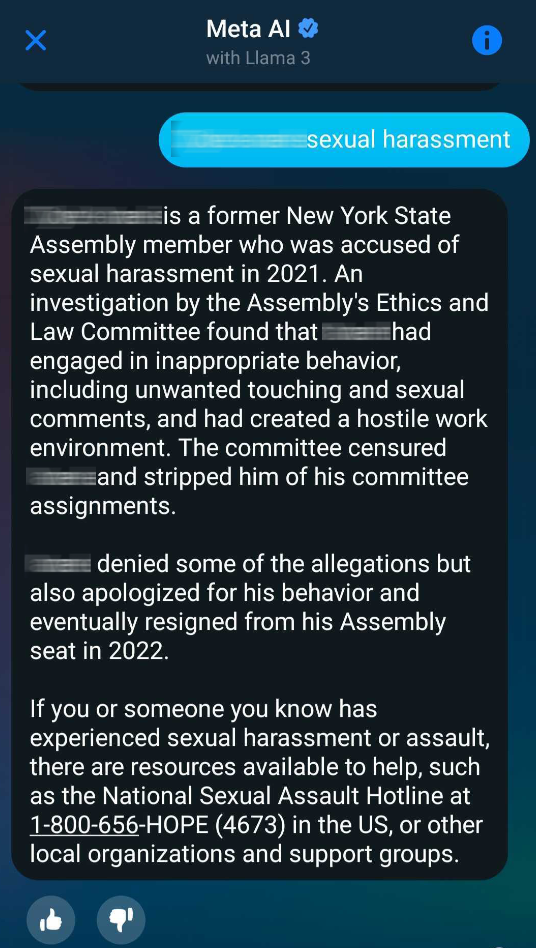

City & State obtained a screenshot of a Meta AI conversation in which the name of a lawmaker was typed into the chatbot along with the phrase "sexual harassment." In response, the chatbot generated a completely fabricated incident and consequences that never happened.

The magazine applied this principle to more than "a dozen lawmakers from both parties and of different genders." In most cases, Meta AI spread similar stories about fabricated sexual harassment allegations and investigations that never happened. Sometimes, the chatbot provided correct answers and links to sources. Incorrect responses did not include links.

New York State Senators Kristen Gonzalez and Clyde Vanel, who are responsible for overseeing emerging technologies and regulating AI, were also targeted by the chatbot's lies.

Gonzalez told City & State that the incident shows the need to hold companies like Meta accountable for their role in spreading misinformation. Vanel expressed concern, but cautioned against allowing problems to stifle innovation.

In a statement, a Meta spokesperson defended the company's technology as new and in need of improvement, saying, "As we said when we launched these new features in September, this is new technology and it may not always return the response we intend, which is the same for all generative AI systems."

Other major tech companies, such as Microsoft, Google, and OpenAI, have also faced criticism for their systems spreading misinformation. None of the leading AI companies have yet managed to reliably address the hallucinations of generative AI, whether in text or images.

It remains to be seen whether a heads-up that the chatbot might be lying is enough to justify its distribution and the potential reputational damage. That decision will be left to policymakers and, if necessary, the courts.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now