Meta's Free Transformer introduces a new approach to LLM decision-making

A Meta researcher has developed a new AI architecture called the Free Transformer. This model lets language models pick the direction of generated text before they start writing, and the research shows it performs especially well on programming and math tasks.

François Fleuret from Meta uses a film review generator to explain the challenge. Standard transformers write word by word and only gradually reveal if the review is positive or negative. The model doesn't make this call up front; it just emerges as tokens are chosen.

The study highlights a few problems with this. The model is always guessing where the text is going, which makes calculations more complicated than they need to be. One wrong word can also send the output in the wrong direction.

The Free Transformer addresses this by making a decision first. For example, if it's writing a movie review, it decides right away if the review is positive or negative, then generates text that matches that choice.

Adding new functions with little extra overhead

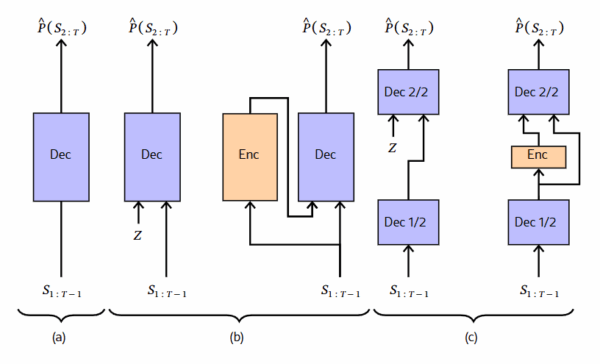

Technically, the Free Transformer adds a layer in the middle of the model. This layer takes random input during text generation and turns it into structured decisions. A separate encoder learns during training which hidden choices lead to which outputs.

Unlike a standard transformer, which only sees previous words, this encoder looks at the entire text at once. That lets it spot global features and pick the right hidden decision. A conversion step then translates these decisions into a format the decoder can use.

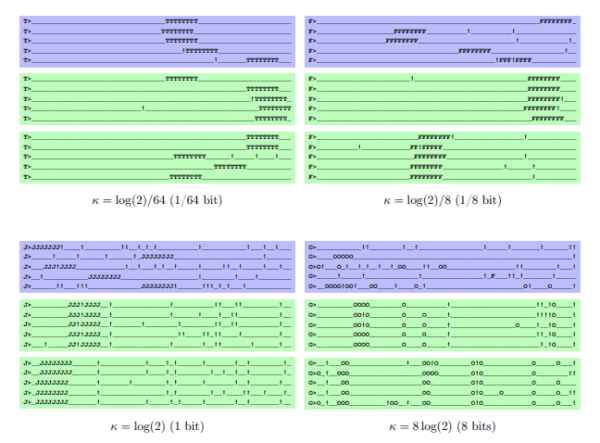

The system can pick from over 65,000 hidden states. A control process limits the amount of information in these decisions. If there were no guardrails, the encoder could just encode the entire target text up front, which would make the model useless in practice.

Structured choices lead to better results on hard tasks

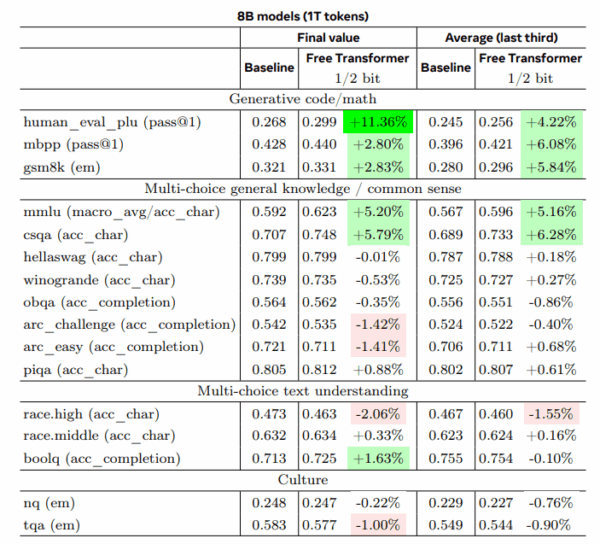

The Free Transformer was tested on models with 1.5 and 8 billion parameters across 16 standard benchmarks. The biggest gains showed up on tasks that require logical thinking.

On code generation, the smaller model outperformed the baseline by 44 percent, even with less training. For math, it scored up to 30 percent higher. The larger model, which had much more training data, still managed an 11 percent bump in code generation and 5 percent on knowledge questions.

Fleuret credits the improvements to the model's ability to make a plan up front. Instead of rethinking every step, the model sets a strategy and sticks to it.

The study notes that training methods haven't been specially tuned for this architecture yet—they used the same settings as standard models. Tailored training could make the gains even stronger.

Scaling up to bigger models is still an open question, since these tests used much smaller models than the largest language models on the market.

Fleuret also sees ways to combine this method with other AI techniques. While the Free Transformer makes hidden decisions behind the scenes, other approaches could make those reasoning steps visible in the generated text.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.