Microsoft's RUBICON tells if your AI coding buddy is actually helping or just slacking off

Microsoft researchers have developed RUBICON, a technique to automatically assess the quality of conversations between software developers and AI assistants. The system generates tailored evaluation criteria.

Evaluating AI assistants like GitHub Copilot is challenging for tool developers because the quality of human-AI interactions is difficult to gauge due to the variety of tasks and complexity of conversations.

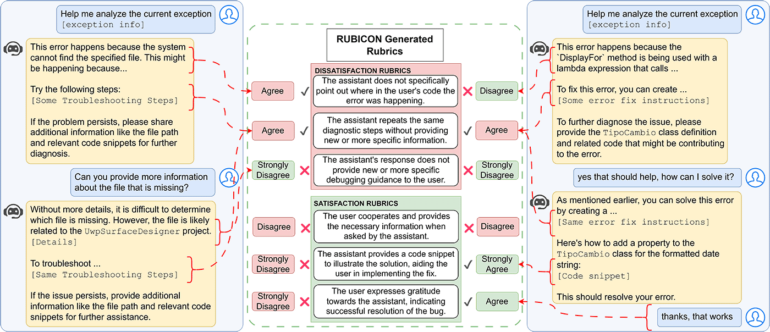

Microsoft researchers now present RUBICON, a technique to automatically assess the quality of such domain-specific conversations. RUBICON stands for "Rubric-based Evaluation of Domain Specific Human-AI Conversations" and was presented at the AIware conference 2024.

The system consists of three main components: generating evaluation criteria, selecting the most relevant criteria, and the actual assessment of conversations. To generate criteria, RUBICON first analyzes a training dataset of conversations labeled as positive or negative. It then identifies patterns indicating user satisfaction or dissatisfaction.

Better Coding-AI

Unlike previous approaches, RUBICON incorporates principles of effective communication, such as Grice's Conversational Maxims (which capture four dimensions of conversational effectiveness: quantity, quality, relevance, and manner), and domain-specific knowledge.

This tailors the generated criteria to the specific application domain. In a second step, RUBICON uses an iterative process to select a subset of the generated criteria that best distinguish between positive and negative conversations. Finally, a large language model evaluates the conversations to be tested based on the selected criteria and a determined threshold.

The researchers evaluated RUBICON using 100 conversations between developers and an AI assistant for debugging in C#. The results showed that the criteria generated by RUBICON allowed for a significantly better distinction between positive and negative conversations than criteria from previous methods or manually created criteria.

With RUBICON, 84% of conversations could be classified as positive or negative with a precision of over 90%. Previous methods achieved a maximum of 64%.

According to Microsoft, RUBICON has already been successfully used in a popular development environment of a large software company to monitor two AI assistants.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.